Evalml: Patching on AutoMLSearch tests failing

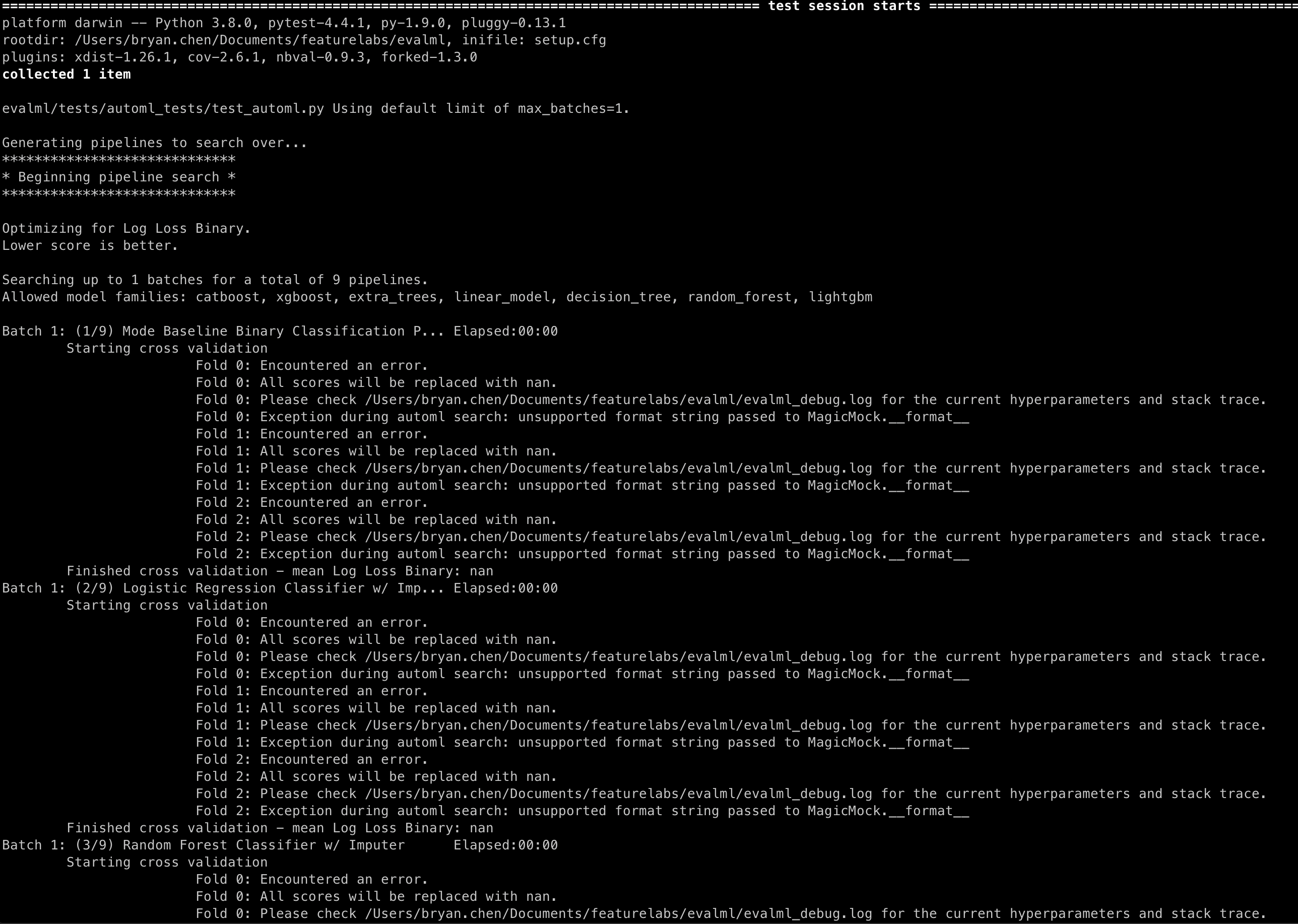

Patching pipelines .score() and .fit() methods are causing the following messages in AutoMLSearch tests:

Repro

checkout main

pytest -s evalml/tests/automl_tests/test_automl.py::test_automl_rerun

All 4 comments

@bchen1116 If you add a return_value to the score mock it should go away:

@patch('evalml.pipelines.BinaryClassificationPipeline.score', return_value={"Log Loss Binary": 0.2})

@freddyaboulton is this something we need to add to all of the .score() mocks? And would this be the best solution for this bug?

I guess I hesitate to call this a bug because it doesn't change the behavior of automl in such a way that it causes the test to pass when it should fail or vice versa.

The underlying issue is that if you don't provide a return_value, a MagicMock is returned. Eventually we go to log the score with an f-string:

logger.info(f"Best pipeline {self.objective.name}: {best_pipeline['score']:3f}")

Which uses the __format__ method for the MagicMock, which I guess is not defined.

Maybe if we change the way the logging is called, we can avoid the ugly log but I worry that would complicate the code for little benefit.

What do you think?

Ah I see, that makes sense. I was unsure where that message was coming from. I think the behavior is fine then, since it doesn't seem to be detrimental to the behavior of AutoML. Thanks for the help, i'll close this out!