Moby: New feature request: Selectively disable caching for specific RUN commands in Dockerfile

branching off the discussion from #1384 :

I understand -no-cache will disable caching for the entire Dockerfile. But would be useful if I can disable cache for a specific RUN command? For example updating repos or downloading a remote file .. etc. From my understanding that right now RUN apt-get update if cached wouldn't actually update the repo? This will cause the results to be different than from a VM?

If disable caching for specific commands in the Dockerfile is made possible, would the subsequent commands in the file then not use the cache? Or would they do something a bit more intelligent - e.g. use cache if the previous command produced same results (fs layer) when compared to a previous run?

All 245 comments

I think the way to combat this is to take the point in the Dockerfile you do want to be cached to and tag that as an image to use in your future Dockerfile's FROM, that can then be built with -no-cache without consequence, since the base image would not be rebuilt.

But wouldn't this limit interleaving cached and non-cached commands with ease ?

For e.g. lets say I want to update my repo and wget files from a server and perform bunch of steps in between - e.g. install software from the repo (that could have been updated) - perform operations on the downloaded file (that could have changed in the server) etc.

What would be ideal is for a way to specify to docker in the Dockerfile to run specific commands without cache every time and the only reuse previous image if there is no change (for e.g no update in repo).

Wouldn't this be useful to have ?

What about CACHE ON and CACHE OFF in the Dockerfile? Each instruction would affect subsequent commands.

Yeah, I'm using git clone commands in my Dockerfile, and if I want it to re-clone with updates, I need to, like, add a comment at the end of the line to trigger a rebuild from that line. I shouldn't need to create a whole new base container for this step.

Can a container ID be passed to 'docker build' as a "do not cache past this ID" instruction? Similar to the way in which 'docker build' will cache all steps up to a changed line in a Dockerfile?

I agree we need more powerful and fine-grained control over the build cache. Currently I'm not sure exactly how to expose this to the user.

I think this will become easier with the upcoming API extensions, specifically naming and introspection.

Would be a great feature. Currently I'm using silly things like RUN a=a some-command, then RUN a=b some-command to break the cache

Getting better control over the cache would make using docker from CI a lot happier.

@shykes

What about changing --no-cache from a bool to a string and have it take a regex for where in the docker we want to bust the cache?

docker build --no-cache "apt-get install" .

I agree and suggested this exact feature on IRC.

Except I think to preserve reverse compatibility we should create a new flag (say "--uncache") so we can keep --cached as a (deprecated) bool flag that resolves to "--uncache .*"

On Fri, Feb 7, 2014 at 9:17 AM, Michael Crosby [email protected]

wrote:

@shykes

What about changing--no-cachefrom a bool to a string and have it take a regex for where in the docker we want to bust the cache?

docker build --no-cache "apt-get install" .Reply to this email directly or view it on GitHub:

https://github.com/dotcloud/docker/issues/1996#issuecomment-34474686

What does everyone else think about this? Anyone up for implementing the feature?

I'm up for having a stab at implementing this today if nobody else has started?

I've started work on it - wanted to validate the approach looks good.

- The

noCachefield ofbuildfilebecomes a*regexp.Regexp.

- A

nilvalue there means whatutilizeCache = trueused to.

- A

- Passing a string to

docker build --no-cachenow sends a validate regex string to the server. - Just calling

--no-cacheresults in a default of.* - The regex is then used in a new method

buildfile.utilizeCache(cmd []string) boolto check commands that ignore cache

One thing: as far as I can see, the flag/mflag package doesn't support string flags without a value, so I'll need to do some extra fiddling to support both --no-cache and --no-cache some-regex

I really think this ought to be a separate new flag. The behavior and syntax of --no-cache is already well defined and used in many, many places by many different people. I'd vote for --break-cache or something similar, and have --no-cache do exactly what it does today (since that's very useful behavior that many people rely on and still want).

Anyways, IANTM (I am not the maintainer) so these are just my personal thoughts. :)

@tianon --no-cache is currently bool, so this simply extends the existing behaviour.

docker build --no-cache- same behaviour as before: ignores cachedocker build --no-cache someRegex- ignores anyRUNorADDcommands that matchsomeRegex

Right, that's all fine. The problem is that --no-cache is a bool, so the existing behavior is actually:

--no-cache=true- explicitly disable cache--no-cache=false- explicitly enable cache--no-cache- shorthand for--no-cache=true

I also think we'd be doing ourselves a disservice by making "true" and "false" special case regex strings to solve this, since that will create potentially surprising behavior for our users in the future. ("When I use --no-cache with a regex of either 'true' or 'false', it doesn't work like it's supposed to!")

+1 for @wagerlabs approach

@crosbymichael, @timruffles Wouldn't it be better if the author of the Dockerfile decides which build step should be cached and which should not? The person that creates the Dockerfile is not necessarily the same that builds the image. Moving the decision to the docker build command demands detailed knowledge from the person that just want to use a specific Dockerfile.

Consider a corporate environment where someone just want to rebuild an existing image hierarchy to update some dependencies. The existing Dockerfile tree may be created years ago by someone else.

+1 for @wagerlabs approach

+1 for @wagerlabs approach although it would be even nicer if there was a way to cache bust on a time interval too, e.g.

CACHE [interval | OFF]

RUN apt-get update

CACHE ON

I appreciate this might fly against the idea of containers being non deterministic, however it's exactly the sort of thing you want to do in a continuous deployment scenario where your pipeline has good automated testing.

As a workaround I'm currently generating cache busters in the script I use to run docker build and adding them in the dockerfile to force a cache bust

FROM ubuntu:13.10

ADD ./files/cachebusters/per-day /root/cachebuster

...

ADD ./files/cachebusters/per-build /root/cachebuster

RUN git clone [email protected]:cressie176/my-project.git /root/my-project

I'm looking to use containers for continuous integration and the ability to set timeouts on specific elements in the cache would be really valuable. Without this I cannot deploy. Forcing a full rebuild every time is much too slow.

My current plan to work around this is to dynamically inject commands such as RUN echo 2014-04-17-00:15:00 with the generated line rounded down to the last 15 minutes to invalidate cache elements when the rounded number jumps. ala every 15 minutes. This works for me because I have a script generating the dockerfile every time, but it won't work without that script.

+1 for the feature.

I also want to vote for this feature. The cache is annoying when building parts of a container from git repositories which updates only on the master branch.

:+1:

@hiroprotagonist Having a git pull in your ENTRYPOINT might help?

@amarnus I've solved it similar to the idea @tfoote had. I am running the build from a jenkins job and instead of running the docker build command directly the job starts a build skript wich generates the Dockerfile from a template and adds the line 'RUN echo currentsMillies' above the git commands. Thanks to sed and pipes this was a matter of minutes. Anyway, i still favor this feature as part of the Dockerfile itself.

Adding my +1 for @wagerlabs approach. Also having this issue with CI. I'm simply using a dynamic echo RUN statement for the time being, but I would love this feature.

+1 for CACHE ON/OFF. My use case is also CI automation.

+1, especially the ability to set a run commands cache interval like in @cressie176 's example

"For example updating repos or downloading a remote file"

+1

If it helps anyone, here's the piece of code I'm using in my Jenkins build:

echo "Using build $BUILD_NUMBER for docker cachebusting"

sed -i s/cachebust_[0-9]*/cachebust_"$BUILD_NUMBER"/g Dockerfile

+1 for CACHE ON/OFF

As a possible alternative to the CACHE ON/OFF approach, what about an extra keyword like "ALWAYS". The keyword would be used in combination with an existing command (e.g. "ALWAYS RUN" or "ALWAYS ADD"). By design, the "ALWAYS" keyword does not go to the cache to complete the adjacent command. However, it compares the result to the CACHE (implicitly the cache for other times the same line was executed), linking to the cached image if the result of the ALWAYS command is unchanged.

I believe the underlying need is to identify "non-idempotent instructions". The ALWAYS command does this very explicitly. My impression is that the CACHE ON/OFF approach could work equally well, but could aso require lots of switching over blocks of code (which may encourage users to block off more lines than really required).

I am also more for a prefix to commands, like ALWAYS or CACHE 1 WEEK ADD ...

So I was struggling with this issue for a while and I just wanted to share my work around incase its helpful while this gets sorted out. I really didn't want to add anything outside of the docker file to the build invocation or change the file every time. Anyway this is a silly example but it uses the add mechanism to bust the cache and doesn't require any file manipulations.

From ubuntu:14.04

RUN apt-get -yqq update

RUN apt-get -yqq install git

RUN git clone https://github.com/coreos/fleet

ADD http://www.random.org/strings/?num=10&len=8&digits=on&upperalpha=on&loweralpha=on&unique=on&format=plain&rnd=new uuid

RUN cd fleet && git pull

Obviously you can pick your own use case and network random gen. Anyway maybe it will help some people out idk.

Another +1 for @wagerlabs approach

Another +1 to the feature. Meanwhile using @cruisibesarescondev workaround.

one more +1 for the feature request. And thanks to @cruisibesarescondev for the workaround

Another +1 for the feature.

Cheers @cruisibesarescondev for the workaround.

I think the ALWAYS keyword is a good approach, especially as it has simple clear semantics. A slightly more complicated approach would be to add a minimum time, (useful in things like a buildfarm or continuous integration). For that I'd propose a syntax "EVERY XXX" where XXX is a timeout. And if it's been longer than XXX since the cache of that command was built it must rerun the command. And check if the output has changed. If no change reuse the cached result, noting the last updated time. This would mean that EVERY 0 would be the same as ALWAYS.

For a workaround at the moment I generate my Dockerfiles using empy templates in python and I embed the following snippets which works as above except that does not detect the same result in two successive runs, but does force a retrigger every XXX seconds. At the top:

@{

import time

def cache_buster(seconds):

ts = time.time()

return ts - ts % seconds

}@

Where I want to force a rerun:

RUN echo @(cache_buster(60))

Which looks like this in the Dockerfile

RUN echo 1407705360.0

As you can see it rounds to the nearest 60 so each time 60 seconds pass the next run will rerun all following commands.

+1 for ALWAYS syntax. +.5 for CACHE ON/CACHE OFF.

+1 for ALWAYS syntax.

Yes, ALWAYS syntax looks very intuitive.

I don't like CACHE ON/OFF because I think lines should be "self contained" and adding blocks to Dockerfiles would introduce a lot of "trouble" (like having to check "is this line covered by cache?" when merging...).

@kuon I think there are already a number of commands that affect subsequent instructions, e.g. USER and WORKDIR

Yeah, that's true, but I don't use them for the same reason. I always do RUN cd ... && or RUN su -c ...&&.

I'd prefer a block notation:

CACHE OFF {

RUN ...

}

This is more explicit and avoid having a CACHE OFF line inserted by mistake (it would trigger a syntax error).

I might be overthinking it, Dockerfiles are not actually run in production (just when building the image), so having the cache disabled when you build won't actually do much harm. But I also feel Dockerfiles are really limiting (having to chain all commands with a && in a single RUN to avoid creating a gazillion of images, not being able to use variables...).

Maybe this issue is the opportunity for a new Dockerfile format.

I'd like to come back on what I just said. I read what @shykes said in another issue https://github.com/docker/docker/pull/2266 and I also agree with him (Dockerfile need to stay a really simple assembly like language).

I said I'd like variable or things like that, but that can be covered by some other language, but in this case, each line in a Dockerfile should be self contained, eg:

NOIMAGE ALWAYS RUN USER:jon apt-get update

Which would always run the command (no cache), but would also not create an image and use the user jon.

This kind of self contained line are much easier to generate from any other language. If you have to worry about the context (user, cache, workdir), it's more error prone.

Can it be RUN! for ease, please?

Any status update on this one?

Selectively disabling the cache would be very useful. I grab files from a remote amazon s3 repository via the awscli command (from the amazon AWS toolkit), and I have no easy way to bust the cache via an ADD command (at least I can't think of a way without editing the Dockerfile to trigger it). I believe there is a strong case for control to be given back to the user to selectively bust the cache when using RUN. If anyone has a suggestion for me I'd be happy to hear from you.

Wanted to bump this issue up a bit since it's something that we have a big need for.

Still convinced ALWAYS syntax is the ideal one.

How about a simple BREAK statement.

@cpuguy83 that would work also for my particular use case.

I am not sure if it's technically possible to have only one command not be cached, but the rest of them to be cached. Probably not since docker is based on incremental diffs.

Having support for BREAK though would give me feature parity with my current workaround based on the suggestion by @CheRuisiBesares.

Regarding my previous post, it would indeed be sufficient to just bust the cache from that point in the script onwards, the rest would just be down to intelligent script design (and I believe this would address most people's requirements). Is this doable instead of selectively disabling cache bust?

@orrery You could probably "bust" the cache by adding a COPY _before_ that build-step. If the copied file(s) are different, all steps after that should no longer use the cache (see this section). Dirty trick, but may solve your case.

A key to ALWAYS (or similar concepts like EVERY # DAYS) is the cache comparison after the attached command. For myself (and I assume many others), the goal isn't to bust the cache per se.

- The goal is to ensure we stop using the cache if and when the result of the command (i.e. "upgrade to the most recent version") changes.

- By contrast, if the result matches a cached version, we want to take advantage of the cache.

This addresses the comment by @hellais since you can take advantage of cache for subsequent commands... if and only if the output of ALWAYS matches a cached version (this could easily be the majority of the time).

Naturally, the same logic _could_ be included in a CACHE ON/OFF model. The comparison with cache is likely to be cheaper than rerunning all subsequent commands, but could still be expensive. If a CACHE ON/OFF block encouraged a user to include extra commands in an OFF block (something that cannot happen with ALWAYS), it could contribute to significant differences in performance.

I'm in exactly the same situation as @tfoote: I'm using Docker for CI and need to force cache expiration.

+1 for EVERY syntax. The ALWAYS syntax would also get the job done.

@claytondaley that's a great point. However, it is still important to have the ability to completely disable caching for a command. There will always been hidden state that is inherently invisible to Docker. E.g. executing command may change state on a remote server.

@mkoval, you bring up a good point about _creating_ hidden states as an important time to use ALWAYS, but I don't think it affects my logic around resuming cache. To make the example concrete (if somewhat trivial), a command that upgrades a 3rd party system:

- Creates a hidden state (needs to be run

ALWAYS) and - Doesn't change the current container

If the next command does not involve a hidden state (trivially, a mv command on the container), the cache will be 100% reliable. Same container, same command, no dependency on hidden information.

If the next command (or any subsequent command) involves hidden information, then it should use the ALWAYS keyword, only resuming the cache if the resulting container matches the cache.

@claytondaley your solution seems very elegant and efficient to me. I would be very grateful if this were to be implemented. :+1: :octopus:

+1 for this feature using ALWAYS and EVERY X suggested syntax. CACHE ON/OFF feels a bit clumsy to me, but I would use it. I also really like @claytondaley's suggestion of resuming the cache where possible.

+1 for ALWAYS syntax. especially for pull codes from git repo.

+1 For any of these solutions.

I'm a bit confused. How can caching be turned back on once its been turned off? Once you turn it off and make any kind of change in the container, wouldn't turning caching back on basically toss out any changes made by those Dockerfile commands that ran while caching was turned off? I thought the entire reason we could do caching was because we knew exactly the full list of previous commands that were run and could guarantee what was in the container was the exact same. If you turn off caching (and I'm talking about the look-up side of it) doesn't that blow away that guarantee? Or is this just about not populating the cache?

My understanding of the suggestions is that you can specify "ALWAYS" as part of a Dockerfile command to always run the step again. For example "RUN ALWAYS git clone https://example.com/myrepo.git" will always run (thereby always cloning the repo). Then what @claytondaley is suggesting is that after this command is run again, Docker checks the changes against the cache. If the checksum is the same (i.e. if the cloned repo had no changes, so the newest layer is identical to the same layer in the cache), we can continue with the cache on. You're right that once the cache is invalidated, all steps afterwards cannot use the cache. These suggestions just enable more granularity of control over when to use the cache, and is also smart about resuming from the cache where possible.

@curtiszimmerman... exactly

@duglin... The idea may be more obvious if we use a mathematical proxy. Cache (in this context) is just memory of the result of action B when applied to state A so you don't have to reprocess it. Assume, I run a sequence of commands:

- start with

6 - ALWAYS run

* xwhere the value ofxis downloaded from a git repo (and thus could change) - run

+ 12

The first time I run the command, x is 8 so I get (and cache) the following sequence:

648(as the result of* xapplied to6)60(as the result of+ 12applied to48)

If my machine ever reaches state 48 again (by any sequence)... and is given the command + 12, I don't have to do the processing again. My cache knows the outcome of this command is 60.

The hard part is figuring out when you're in the same state (48) again.

- We could theoretically compare the machine after every command to every other cached image, but this is resource intensive and has a very low odds of finding a match.

- My proposal is to keep it simple. Every time we are in a state (e.g.

6) and hit a command (e.g.* x) we compare the result to the cache from the last time (or times) we were in the same state running the same command. If the state of the machine after this process is the same (e.g. still48), we resume the cache. If the next command is still+ 12, we pull the result from the cache instead of processing it.

@claytondaley but I don't understand how you determine the current state. As you said, we're not comparing all of the files in the container. The way the cache works now is to basically just strcmp the next command we want to run against all known children containers from the current container. The minute you skip a container in the flow I don't see how you can ever assume your current container is like any other cached container w/o checking all files in the filesystem. But perhaps I don't grok what you're doing.

Let me rephrase it.... given a random container (which is basically what you have if you're not using the cache) how can you find a container in the cache that matches it w/o diffing all of the files in the container?

@claytondaley @duglin Determining whether a "no cache" operation can be cached due to no change is a Hard Problem, as you've described. It's also more of a nice-to-have rather than strictly necessary.

Personally, I would be more than happy if all I had was the ability to ensure a command always runs. Take a Dockerfile like:

RUN install_stuff_take_forever

RUN always_do_it #will not run every time

RUN more_stuff

Currently, the always_do_it line will only run the first time, unless I edit the text to force a cache bust. I think most of us would be happy to accept that more_stuff will sometimes run unnecessarily (when always_do_it hasn't changed, if in exchange we can keep the cache for install_stuff_take_forever.

RUN install_stuff_take_forever

NOCACHE

RUN always_do_it

RUN more_stuff

@pikeas I totally get a NOCACHE command and that's easy to do. What I don't get is a command that turns it back on w/o diffing/hashing/whatever the entire filesystem.

I read the "layer" explanation of Docker to mean that:

- Docker creates a "layer" for each command.

- That layer includes only the files changed (or possibly "saved" whether changed or unchanged) by that command.

- The current state of the file system is logically (if not operationally) achieved by checking each layer in order until it finds a (the most recently updated) version of that particular file.

In this case, a comparison of two instances of the same command is relatively cheap. You need only compare the top layer (since every underlying layer is shared). There's a specific list of files that were changed by the command. Only those files are included in the layer. Granted... you'd need to compare all the files in that layer... but not the entire file system.

It's also possible (although not guaranteed to be exhaustive) to only compare the new layer to the last time the command was run:

- In most cases (git pull or software upgrade), the current version will either be (1) the same as the last versions or (2) a new version... but never -- at least rarely -- a rollback to a previous version.

- In rare cases (like upgrading to dev-master and then reverting to a stable version), it's possible to switch back to an older version. However, these are pretty rare so that most people would probably be better off (frequently) checking only the most recent version and rerunning commands in the rare instance they do rollback.

Of course, you could also do a hash check on all previous versions... followed by a full file check... to offer full support without the overhead.

if you look at the bottom of https://github.com/docker/docker/pull/9934 you'll see a discussion about supporting options for Dockerfile commands. What if there was a --no-cache option available on all (or even just RUN) that meant "don't use the cache" for this command? e.g.:

RUN --no-cache apt-get install -y my-favorite-tool

This would then automatically disable the cache for the remaining commands too (I think).

Would this solve the issue?

Between "RUN ALWAYS" and "RUN --no-cache" which are semantically identical, I would personally prefer the more natural-looking "RUN ALWAYS" syntax. I agree with the last comment on that PR: I think --option breaks readability and will make Dockerfiles ugly. Additionally, Dockerfile commands I think will need to be very distinct versus the actual commands which follow them. Imagine something like "RUN --no-cache myapp --enable-cache" for one example of convoluted syntax which would start to express itself quickly with that kind of option.

@curtiszimmerman your example is very clear to me. --no-cache is for RUN while --enable-cache is for myapp. Placement matters. For example, look at:

docker run -ti ubuntu ls -la

people understand that -ti is for 'run' while '-la' is for 'ls'. This is a syntax people seems to be comfortable with.

One the issues with something like RUN ALWAYS is extensibility. We need a syntax that can work for all Dockerfile commands and support passing in value. For example, people have expressed an interest in specifying the USER for certain commands.

RUN USER=foo myapp

is technically setting an env var USER to 'foo' within myapp's shell. So we're ambiguous here.

While: RUN --user=foo myapp doesn't have this issue.

Is: RUN var=foo myapp

trying to set and env var called 'var' or a typo trying to get some RUN option?

IOW, the odds of overlapping with an existing valid command, IMO, are far less when we start things with -- than just allowing a word there

I actually advocate the reverse sequence, EVERY <options> COMMAND. Several reasons:

- In the case of "user" and "cache" (at least), they're really environment characteristics that could wrap any COMMAND (though they may not materially affect others).

- The syntax

RUN ALWAYSmeans changing theRUNcommand interpreter, which sounds unnecessary. - This problem is even worse with

RUN EVERY 5 daysbecause the options attached to EVERY create even more ambiguity.EVERY 5 days RUNis clear about the command the options affect. We have the same issue withRUN USER usrvs.USER usr RUN. So long as either (1) command keywords are never options or (2) there's an easy way to escape them, this is unambiguous.

I could get on board with prefixing the commands with their options (ALWAYS AS user RUN ...). I'm just really concerned about using GNU-style longopts for options because they aren't very separate to old or glazed-over eyes. I can imagine myself staring at a complex Dockerfile command after 20 hours wondering wtf is going on. But I predict --options are going to happen regardless.

But I predict --options are going to happen regardless.

Nothing is decided yet, on the contrary; the syntax @duglin is suggesting is a _counter proposal_ to a syntax that was proposed/decided on earlier. Please read #9934 for more information on that.

Also, @duglin is _not_ the person making that decision (at least, not alone). Some of the points you're raising have been mentioned in the other thread.

I share your concern about readability, but also think the other syntaxes that were proposed could have the same problem if multiple options have to be specified.

This problem can possibly be overcome by formatting the Dockerfile for readability. I think it would be good to write some more examples to test/check if readability is a concern when properly formatted.

And, yes, your input is welcome on that.

I'm still very -1 on letting the Dockerfile itself define where the cache

should and shouldn't be applied. I have yet to see a good example of a

Dockerfile that couldn't be rewritten to cache-bust appropriately and

naturally when the underlying resource needed to be updated.

Having a flag on "docker build" to stop the cache in a particular place

would be much more flexible, IMO (and put the control of the cache back

into the hands of the system operator who gets to manage that cache anyhow).

+1 on @tianon's -1 (so that's a -1!), and adding a flag to break at step N seems reasonable. Considering that once the cache is broken, it's broken for the rest of the Dockerfile anyway, I think this makes sense.

The main need for this is because docker's caching mechanism is directly tied to the storage and transport of the image, which makes for efficient caching but at the trade-off of significantly larger images. So let's fix that!

W/o saying how I feel about this feature - not sure yet, to be honest - how do you guys envision someone saying (from "docker build") to stop at step N? Seems kind of brittle when today step N would be step N+1 tomorrow.

Seems like we may need a way to add a "label" of some kind within the Dockerfile so that people could reference that label from the build cmd line.

If we had that then I'm not sure I see much of a difference between that and adding a "STOP-CACHING" command that appears in the Dockerfile.

What's a good example of a Dockerfile cmd that will bust the cache each time?

Well, that's actually why it was originally discussed to make it a

line-content-based regexp, which I'd be fine with as well (especially since

that's a lot easier to script than knowing exactly which step number you

don't want cached -- no way I'm writing a full copy of the current

Dockerfile parser in Bash, thanks :D).

Tianon Gravi [email protected] wrote:

Well, that's actually why it was originally discussed to make it a

line-content-based regexp, which I'd be fine with as well (especially

since

that's a lot easier to script than knowing exactly which step number

you

don't want cached -- no way I'm writing a full copy of the current

Dockerfile parser in Bash, thanks :D).

Would like to restate my earlier suggestion, that ALWAYS/cache-breaking

"RUN" should just be "RUN!" to keep the 1 word command structure(?).

It seems kludgy to have to edit a Dockerfile (by adding something which is basically random because it's a placeholder) in order to break the cache on a specific step. I would use a docker build CLI option which always runs a certain step, but totally agree with @duglin that having to track down the specific line number in order to feed that to the command is unwieldy. I don't want to have to take the extra step of adding some random characters (!) to a Dockerfile immediately before my git clone just to prod Docker into actually cloning the repo instead of working from the cache.

@curtiszimmerman I suggested (!) because it indicates something akin to urgency in english. ("You should DO THIS!")

I think the Dockerfile is at least one appropriate place to define which commands should be un/cache-able. Having to build with "--no-cache=git" (I realize this isn't something you suggested, but you didn't suggest anything for me to quote/compare) seems more kludgy.

Why the focus on RUN? Why not allow the cache to be busted for any command?

Seems like adding a:

BUST-CACHE

type of Dockerfile command would be far more flexible. And to really add flexibility, it could optionally allow a flag:

BUST-CACHE $doit

where it only applies if $doit is define - then if we do add support for a -e option on build ( https://github.com/docker/docker/pull/9176 ) then people could do:

docker build -e doit=true ...

@zamabe Oh, I totally would use RUN!, sorry. Here I was using (!) to say "This is unusual!" about editing a Dockerfile every time I want to break the cache on a specific step. Any way I could bust the cache inside a Dockerfile before a specific step would be useful (and for extra win, if the step after that cache-busting command is the same result as what's in the cache, be smart enough to continue from the cache). BUST-CACHE or ALWAYS RUN (or RUN ALWAYS) or RUN!... Really any mechanism supporting this feature, I would use it.

@duglin Sorry? The bug title says RUN which is just easier to give as an example.

@curtiszimmerman ah.

As an aside; I think cache revalidation(?) is a bit beyond the cache invalidation this bug is looking for. Though I like what you're suggesting, I would just reorder my Dockerfile to put cache-busting commands as close to the end as possible. This negates the benefits gained from a _possible_ cache hit since you _always_ do the necessary computations/comparisons, which is probably a heavier penalty than finishing the Dockerfile build normally since people using cache-busting are probably hoping for/expecting a cache miss.

@zamabe Agreed. I suggest that if the implementation is fairly trivial to do this, perhaps a special command to continue from the cache, which is separate from the cache-busting identifier. Something like DISABLE-CACHE at a certain point to disable the cache every time, and if you have a use case where the rest of the Dockerfile would be expensive versus continuing from the cache, something like DISABLE-CACHE? would continue from the cache if possible. That is not a suggestion, just a demonstration to convey what I'm talking about.

+1 for pull codes from git repo

+1

This would be huge! Right now I have part of my continuous integration writing the git commit hash into the Dockerfile (overwriting a placeholder) just to break the cache for git clones.

I submitted this PR: https://github.com/docker/docker/pull/10682 to address this issue.

While it doesn't support turning caching back on, I don't think that's possible today.

+1

I'm generating a random number in the Dockerfile and it gets cached...

+1 for a NOCACHERUN instruction

+1

Should be really useful for some RUN we need to do each time without rebuilding everything

I've been noticing that git clone will hit the cache but go get -d will not. any ideas why?

_Collective review with @LK4D4 @calavera @jfrazelle @crosbymichael @tiborvass_

Closing this as we don't see that many real world use cases (see the related #10682 for more details).

+1 for RUN. Would be nice.

+1

docker 1.9 introduces build-time variables; it's possible to (mis-)use those to force breaking the cache; for more info, see https://github.com/docker/docker/issues/15182

How is this not yet a feature?

@hacksaw6 You can take a look at what was said here: https://github.com/docker/docker/pull/10682

+1

+1

+1 how is this not even a thing yet!!!???!

+1 We need this feature to provide more granular control for building.

+1

+1

+1

+1

+1 very useful

(using @timruffles workaround for now)

+1

+1

+1

it might be useful is folks posted their usecases instead of just +1 so that people can see why this is just a needed feature.

+1, arrived here via Google looking for a solution to a cached git clone.

My use case:

I have a docker configuration, which during its build will call via gradle a groovy microservice app in dry-run mode. This will result that all dependent java libraries (from a remote mvn repository) will be downloaded into the local docker mvn repository. The dry run will just run the app and returns immediately but ensures that all java library dependencies are loaded.

During the docker run phase the same app will be executed via gradle --offline mode. I.e the microservice app will just load from the local mvn repository directory. no expensive, time-consuming remote library fetch will take place. When I now release a new snapshot version of such a library, docker will not trigger a remote fetch during a build (i.e. he will not call my gradle dryrun cmd), unless I modify the docker directory.

My use case: Fetch the latest 3rd party version of a library to use on an image. I'm using docker hub for that and AFAIK, it will not cache anything. But who knows when that may change.

If there was such a command flag as NOCACHE in docker, it would guarantee that, no matter where the image is built.

It's worse to depend on a build system "feature" than on a latest version, IMHO.

How about adding a new syntax: FORCE RUN git clone ....?

Right now I am using RUN _=$(date) git clone ... to invalid the cache.

@c9s does that actually work? I don't think it does.

@duglin setting environment variable works for me. the "FORCE RUN" is just a proposal :]

@c9s I don't see how setting the env var could work since that's done by the container's shell, not the Dockerfile processing. When I try RUN _=$(date) echo hi it uses the cache on the 2nd build.

@duglin you're right :| it doesn't invalidate the cache

@c9s try this instead

FROM foo

ARG CACHE_DATE=2016-01-01

RUN git clone ...

docker build --build-arg CACHE_DATE=$(date) ....

@thaJeztah Thanks! it works!

+1 cloning git repos (use case)

So many +1s, if you pull the git repo in your docker file, cache keeps your images from building. Makes it kind of hard to push builds through CI.

+1 cloning git repos (its very annoying that the image needs to be build from scratch each time a small edit has been made in a git repo)

@Vingtoft If you are updating the files in the repo then your cache is invalidated.

@itsprdp I did not know that, thank you for clarifying.

@itsprdp I have just tested. When I'm updating the repo and building the image, Docker is still using the cache.

Perhaps I'm misunderstanding something?

@itsprdp That isn't correct in my experience. I made a new commit to a repo to test, and when building again, it uses the same cache.

If I change the docker file previous to the repo, of course it will be cache busted, however simply updating a repo does not seem to fix this issue.

@RyanHartje Sorry for the confusion. It is supposed to invalidate the cache if the repository is updated and that's something to consider by contributors.

The use case @Vingtoft expecting is to cache the repository and only update the changed files in the repository. This might be complicated to implement.

@itsprdp Only updating the changed files in a repo would be awesome, but less (or should I say more?) would do as well.

In my use-case (and many others) the actual git pull does not take long time: Its the building of everything else thats killing the development flow.

+1, cache used during git clone :(

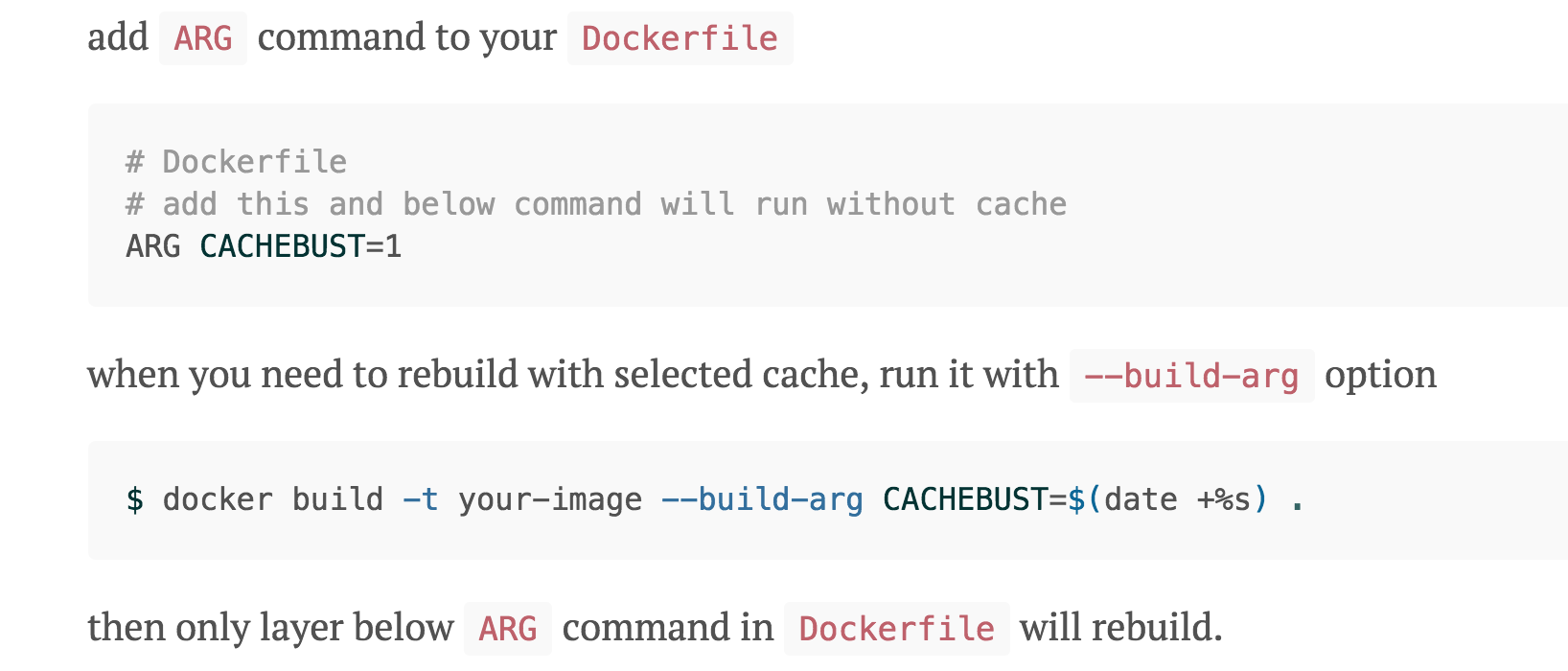

An integrated solution would be nice, but in the meantime you can bust the cache at a specific Dockerfile instruction using ARG.

In the Dockerfile:

ARG CACHEBUST=1

RUN git clone https://github.com/octocat/Hello-World.git

On the command line:

docker build -t your-image --build-arg CACHEBUST=$(date +%s) .

Setting CACHEBUST to the current time means that it will always be unique, and instructions after the ARG declaration in the Dockerfile won't be cached. Note that you can also build without specifying the CACHEBUST build-arg, which will cause it to use the default value of 1 and preserve the cache. This can be used to always check out fresh copies of git repos, pull latest SNAPSHOT dependencies, etc.

Edit: Which, uh, is just what @thaJeztah said. I'll leave this up as an additional description of his solution.

@shane-axiom How about using the git commit hash as the value for CACHEBUST?

export CACHEBUST=`git ls-remote https://[email protected]/username/myRepo.git | grep refs/heads/develop | cut -f 1` && \

echo $CACHEBUST && \

docker build -t myDockerUser/myDockerImage \

--build-arg blah=blue \

--build-arg CACHEBUST=$CACHEBUST \

.

Based on clues from http://stackoverflow.com/questions/15677439/how-to-get-latest-git-commit-hash-command#answer-15679887

@pulkitsinghal That looks wonderful for busting the cache for git repos. For other uses (such as pulling in SNAPSHOT dependencies, etc) the always-busting timestamp approach works well.

+1 for CACHE ON | OFF

+1

+1

Remember about @CheRuisiBesares aproach, you can always use ADD https://www.random.org/strings/?num=16&len=16&digits=on&upperalpha=on&loweralpha=on&unique=on&format=plain&rnd=new uuid as workaround for cache issues.

To post an additional use-case....

COPY package.json /usr/src/

RUN npm install

In our package.json we will commonly point to a master tag for some of our private github dependencies. This means that we never really get the latest master unless we change the package.json file (usually just add to the description a - to the description then remove it while testing).

A RUN NO CACHE to take place of RUN seems like it would be a good solution.

+1

I have similar issue for npm install which use cache and dont use my new published library in npm.

It would be great if I can disable cache per RUN command in docker file.

@brycereynolds @mmobini see https://github.com/docker/docker/issues/1996#issuecomment-172606763 for manually busting the cache. However, _not_ specifying a specific version of packages that need to be installed may not be best practice, as the end-result of your Dockerfile (and source code) is no longer guaranteed to be reproducible (i.e., it builds successfully today, but doesn't tomorrow, because one of the packages was updated). I can see this being "ok" during development, but for production (and automated builds on Docker Hub), the best approach is to explicitly specify a version. Doing so also allows users to verify the exact packages that were used to produce the image.

I have a use case where not being able to invalidate the cache is causing issues. I am running Dropwizard applications (Java REST Services built with Maven) from Docker and an automated system is doing all of the container builds and deployment for me. I include a Dockerfile in my repo and it does the rest. The system runs a production version and one or more development versions of my application. Development builds are where I am having issues.

During development, some of the project's dependencies have SNAPSHOT in their version numbers. This instructs Maven that the version is under development and it should bring down a new version with every build. As a result, an identical file structure can result in two distinct builds. This is the desired behavior, since bugs may have been fixed in a SNAPSHOT dependency. To support this, it would be helpful to force Docker to run a particular command, since there is no way to determine the effect of the command based on the current state of the file system. A majority of Java projects are going to run into this, since Maven style SNAPSHOT dependencies are used by several different build systems.

@ctrimble You can use --no-cache, or --build-arg to invalidate the cache.

You can minimize the effect of --no-cache by having a base image with all the cacheable commands.

@cpuguy83 thank you for the reply. I read the thread and understand the current options. I have opened a ticket with the build system I am using to supply a cache busting argument. Producing two distinct images for a single application seems like a lot of hoops to go through to speed up builds. It would be much easier to be able to specify something like:

- do things that can be cached if the file system is identical

- do a thing that might change the file system based on when it is executed

- do some more things that could be cached if the previous step did not change the file system

This pattern will come up in development builds frequently. It would be nice to have semantics for it in the Dockerfile.

@ctrimble Busting the cache on one step will cause the cache to always be busted for each subsequent step.

@cpuguy83 exactly. The semantics of my build system are temporal for development builds. I have to select correct builds over caching. I would really like to get both.

There's been considerable discussion here, apologies if it's already been suggested, but what if there was something like this:

CHECK [FILE_PATH]

All docker would do is store the MD5 (or whatever other hash is hip) of the file and if it changes, all steps thereafter are invalidated.

I'd probably be doing something like:

CHECK Gemfile

CHECK package.json

CHECK composter.json

CHECK project.json

May also want to enable a check that some how elapses after a time period. Ansible's cache_valid_time parameter for the apt plugin might offer some inspiration: http://docs.ansible.com/ansible/apt_module.html

For that, the syntax would be:

EXPIRE 1234567

RUN apt-get update

RUN bundle install

Docker would know the last-run time and calculate if the time had elapsed based on "now".

@atrauzzi We just support --squash on build now in 1.13 (experimental only for now).

@cpuguy83 Are there any docs or explanations about --squash anywhere that I can read up on? At the outset the name doesn't make it sound like it does what I'm thinking. But I could be (and most likely am) wrong!

@atrauzzi yes, in the build reference.

Basically, --squash both preserves the layer cache, and creates a 2nd image which is as if everything in the Dockerfile happened in a single layer.

I don't see why one would need to check that a file cache is still valid individually, ADD and COPY already do this for everything that's being copied in.

@cpuguy83 Good point, didn't even think that, and of course I'm already using it.

What about the timestamp/duration approach? Is that doable with what's already available?

What about the timestamp/duration approach? Is that doable with what's already available?

Through build-args;

ARG expire_after=never

RUN do some thing

docker build --build-arg expire_after=2016-12-01 -t foo .

change the build arg to bust the cache

+1 for a cleaner way

+1 for a cleaner way

There should also be separate options for disabling reading the cache and for disabling writing to it. For example, you may want to build an image anew from scratch and ignore any cached layers, but still write the resulting new layers to the cache.

+1

Might I suggest passing the step number to the build command?

Something like this:

docker build --step 5 .

It would ignore all caches after and including step 5 during the build.

+1

Please.

CACHE ON|OFF +1

The issue with these CACHE ON|OFF commands is that at whatever step the cache is turned off, there is no way to cache further steps. The only sensible command would be ENDCACHE.

It is a valid idea / ethos. The command is supposed to coalese together all non-cached layers into a single layer at the point when the cache gets switched back on. Of course you can still argue the best naming / correctness of semantics / preferred syntax of the feature.

+1

+1 the must have feature

Agree for CACHE ON|OFF +1

+1 Would be amazing.

I did not really understand the way Docker caches the steps before and spent half a day investigating why my system is not building correctly. It was the "git clone" caching.

Would love to have the ALWAYS keyword.

How it's closed?

What is the best workaround?

I tried https://github.com/moby/moby/issues/1996#issuecomment-185872769 and it worked

In the Dockerfile:

ARG CACHEBUST=1

RUN git clone https://github.com/octocat/Hello-World.git

On the command line:

docker build -t your-image --build-arg CACHEBUST=$(date +%s)

Why not create a new command similar to RUN but doesn't ever cache RUNNC for RUN NO CACHE?

I can confirm, @habeebr (https://github.com/moby/moby/issues/1996#issuecomment-295683518) - I use it in combination with https://github.com/moby/moby/issues/1996#issuecomment-191543335

+1

RUNNC is a great idea!

Why was this issue closed? Between the myriad duplicates asking for essentially the same thing and the lengthy comment history of more than one of these duplicates, it _seems_ obvious that there is a healthy interest in seeing this functionality available.

I get that it's hard, and perhaps that no one has suggested a sufficiently elegant solution that both meets the need and is clean enough be an attractive Docker addition...but that does not mean _that there is no need_.

The only other argument I've heard in favor of closing this is that there are other ways to accomplish this...but that argument doesn't really pass muster either. Creating multiple base images for the sole purpose of getting around the lack of cache control is unwieldy, contriving an invalidation through an ARG is obtuse and unintuitive. I imagine users want to utilize these "workarounds" about as much as Docker developers want to officially incorporate a sloppy hack into the tool.

its not hard: https://github.com/moby/moby/pull/10682

easy solution, easy UX. Just no clear consensus on whether it should be done.

Wow, just wow...

I would have implemented it already just to not have to hear about it, never mind that there is a clear consensus that the user base wants it. I've not been on the developer side of such a big open source project, only much smaller ones so perhaps I am missing something.

+1

+1 for sensible security and better performance

+1

+1

+1

+1

+1

+1

Can you guys stop spamming the +1? Just use the reaction feature to upvote.

Any changes?

Still doesn't know why this issue is closed.

In my opinion it is a must-have feature that handles perfectly version pull from remote git repository.

+1

+1

+1

+1

+1

+1

+1

+1

+1

+1

Why close this? I think it's useful

+1

+1

+1

Currently the most simple way to disable cache for a layer (and the following):

Dockerfile

ARG CACHE_DATE

RUN wget https://raw.githubusercontent.com/want/lastest-file/master/install.sh -O - | bash

And when you build the image, --build-arg needs to be added

docker build --build-arg CACHE_DATE="$(date)"

Then the wget command will be executed everytime you build the image, rather than using a cache.

RUNNC or CACHE OFF would be nice

in the meantime, this looks promising:

http://dev.im-bot.com/docker-select-caching/

that is:

i'm going to go keep calm and join the herd:

+1

Yeah I need selective caching on commands. My COPY fails 80% of the time if I only change one word in a config file. I would like to never cache my COPY but cache everything else. Having a CACHE ON and CACHE OFF would be great.

RUN X

RUN X

CACHE OFF

COPY /config /etc/myapp/config

CACHE ON

@shadycuz You will never be able to "re-enable" the cache after disabling/invalidating it using any method. The build will not be able to verify (in a reasonable amount of time with a reasonable amount of resources) that the non-cached layer didn't change something else in the filesystem which it would need to consider in newer layers. In order to minimize the impact of always needing to pull in an external config file, you should put your COPY directive as far down in the Dockerfile as possible (so that Docker can use the build cache for as much of the build process as possible before the cache is invalidated).

To invalidate the cache at a specific point in the build process, you can refer to any of the other comments about using --build-arg and ARG mentioned here previously.

@shadycuz @curtiszimmerman Yes, we might only preserve CACHE OFF but not CACHE ON, because the following layers need to be rebuilt if a former layer is changed.

I agree that CACHE ON makes no sense from a technical point of view. It helps to express the intention more clearly, which layers are actually intended to be invalidated however.

A more flexible solution would be command similar to RUN that allowed some shell code to determine if the cache should be invalidated. An exit code of 0 could mean "use cache" and 1 "invalidate cache". If no shell code is given, the default could be to invalidate the cache from here on. The command could be called INVALIDATE for instance.

why was this closed with no comment?

There was a comment, but its hidden by github

https://github.com/moby/moby/issues/1996#issuecomment-93592837

+1

This feature would be a life-saver for me right now.

+1

Closing this as we don't see that many real world use cases

212 comments and counting, but still no use case? Seems pretty ignorant.

+1

+1

+1

+1

+1

the problem is still here and still requires a solution. There are plenty of real-world uses still present.

+1

I suspect the Docker developers have no incentive to implement this, to protect their centralised building infrastructure from being DDsS'ed by no-cache requests.

I also suspect that a parallel infrastructure that facilitate no-cache builds would be interesting for enterprise users.

Overall this issue is not about a software feature, but a service scaling issue.

@jaromil That's not entirely true, as this is not possible on self-hosted repositories as well.

What software is there to run a self-hosted repository? I don't really know what you refer to.

A simple self-hosted solution could be a cron cloning git repos and runnig docker build --no-cache - I'm sure this problem cannot occur on open source software: anyone is then able to modify the docker build commandline.

@jaromil I don't think that's the problem. It would be more efficient to have it for DockerHub's open source projects (as well as paid ones, they don't charge for number of builds). In a CI/CD environment with frequent builds, this get even worse.

As long as you need to do that (you are using docker and git and don't want to have 5 containers running shared volumes), you must rebuild the container and upload every time you upload new version. The entire container.

With an in-code no-cache flag, every time you run the build you just build and replace that single layer instead of whole container for updating the version.

About the self-hosting rep, you'd be surprised. I understand @bluzi comment, there is no ddos impact if you self- host (or use aws ecr).

Ok this is certainly a more complex scenario I was envisioning. now i think...uploading with a sort of nocache single layer hashes... push and override, you name it. I am Not Sure

TLDR: I think some improvements to the Docker documentation might help a lot.

I ended up here after encountering my own problems/confusion with caching. After reading all of the comments here and in https://github.com/moby/moby/pull/10682, I found a workable solution for my particular use case. Yet somehow I still felt frustrated with Docker's response to this, and it appears that many others feel the same way.

Why? After thinking about this from several different angles, I think the problem here is a combination of vague use cases, overly generalized arguments against the proposed changes (which may be valid but don't directly address the presented use cases), and a lack of documentation for Docker's recommendations for some common use cases. Perhaps I can help clarify things and identify documentation that could be improved to help with this situation.

Reading between the lines, it sounds to me like most of the early commenters on this feature request would be happy with a solution that uses additional arguments to docker image build to disable the cache at a specific point in the Dockerfile. It sounds like Docker's current solution for this (described in https://github.com/moby/moby/issues/1996#issuecomment-172606763) should be sufficient in most of these cases, and it sounds like many users are happy with this. (If anyone has a use case where they can provide additional arguments to docker image build but this solution is still inadequate, it would probably help to add a comment explaining why this is inadequate.)

All of the lingering frustration appears to be related to the requirement to pass additional arguments to docker image build to control the caching behavior. However, the use cases related to this have not been described very well.

Reading between the lines again, it appears to me that all of these use cases are either related to services that run docker image build on a user's behalf, or related to Dockerfiles that are distributed to other users who then run docker image build themselves. (If anyone has any other use cases where passing additional arguments to docker image build is a problem, it would probably help to add a comment explaining your use case in detail.)

In many of these cases, it sounds like the use case does not actually require the ability to disable caching at a specific point in the Dockerfile (which was the original point of this feature request). Instead, it sounds like many users would be happy with the ability to disable caching entirely from within the Dockerfile, without using the "--no-cache" argument to docker image build and without requiring manual modifications to the Dockerfile before each build. (When describing use cases, it would probably help to mention whether partial caching is actually required or whether disabling the cache entirely would be sufficient for your use case.)

In cases where a service runs docker image build on a user's behalf, it sounds like Docker is expecting all such services to either unconditionally disable the cache or give the user an option to disable the cache. According to https://github.com/moby/moby/pull/10682#issuecomment-73777822, Docker Hub unconditionally disables the cache. If a service does not already do this, Docker has https://github.com/moby/moby/pull/10682#issuecomment-159255451 suggested complaining to to service provider about it.

This seems to me to be a reasonable position for Docker to take regarding services that run docker image build. However, this position really needs to be officially documented in a conspicuous place so that both service providers and users know what to expect. It does not appear that this position or the Docker Hub caching behavior are currently documented anywhere other than those off-the-cuff comments buried deep inside that huge/ancient/closed pull request, so it is no surprise that both service providers and users routinely get this wrong. Perhaps adding information to the docker build reference describing Docker's opinion on the use of caching by build services, and adding information to the Docker Hub automated build documentation about the Docker Hub caching behavior might eliminate this problem?

For cases where Dockerfiles are distributed to other users who then run docker image build themselves, some people have argued that the use of the simple docker build . command (with no additional arguments) is so common that it would be unreasonable for Dockerfile builders to require users to add arguments, while other people (for example: https://github.com/moby/moby/issues/1996#issuecomment-72238673 https://github.com/moby/moby/pull/10682#issuecomment-73820913 https://github.com/moby/moby/pull/10682#issuecomment-73992301) have argued that it would be inappropriate to unconditionally prevent users from using caching by hard-coding cache overrides into the Dockerfile. In the absence of detailed/compelling use cases for this, Docker has made the executive decision to require additional command line arguments to control caching, which seems to be the source of much of the lingering frustration. (If anyone has a compelling use case related to this, it would probably help to add a comment explaining it in detail.)

However, it seems to me that Docker may be able to make everyone happy simply by breaking users' habit of running docker build . without additional arguments. The caching behavior and "--no-cache" argument are not mentioned in any of the relevant Docker tutorials (such as this or this

or this). In addition, while the docker build documentation does list the "--no-cache" argument, it doesn't explain its significance or highlight the fact that it is important in many common use cases. (Also note that the docker image build documentation is empty. It should at least reference the docker build documentation.) It appears that only the Dockerfile reference and best practices documentation actually describe the caching behavior and mention the role of the "--no-cache" argument. However, these documents are likely to be read only by advanced Dockerfile writers. So, it is no surprise that only advanced users are familiar with the "--no-cache" argument, and that most users would only ever run docker build . without additional arguments and then be confused when it doesn't behave how they or the Dockerfile writer expect/want. Perhaps updating the tutorials and docker build documentation to mention the "--no-cache" argument and its significance might eliminate this problem?

+1

+1

docker's official tool bashbrew doesn't let you add arguments when building images, so the "officially supported" answer does not work.

+1

+1

The use case I'm hitting right now is wanting to pass transient, short-lived secrets in as build args for installing private packages. That completely breaks caching because it means that every time the secret changes (basically every build), the cache gets busted and the packages get reinstalled all over again, even though the only change is the secret.

I've tried bypassing this by consuming the ARG in a script that gets COPY'd in prior to specifying the ARG, but Docker appears to invalidate everything after the ARG is declared if the ARG input has changed.

The behavior I'd like to see is to be able to flag an ARG as always caching, either in the Dockerfile or on the CLI when calling build. For use cases like secrets, that's often what you want; the contents of the package list should dictate when the cache is invalidated, not the argument passed to ARG.

I understand the theory that these sections could be pulled out into a second image that's then used as a base image, but that's rather awkward when the packages are used by a project, like in a package.json, requirements.txt, Gemfile, etc. That base image would just be continually rebuilt as well.

+1 to CACHE OFF from this line directive - I've been waiting for this for literally years.

I have had to disable cache on docker hub / docker cloud and this would save tonnes of time and builds if I could cache the big layer and then just run a nocache update command near the end of the dockerfile.

The behavior I'd like to see is to be able to flag an ARG as always caching, either in the Dockerfile or on the CLI when calling build. For use cases like secrets, that's often what you want; the contents of the package list should dictate when the cache is invalidated, not the argument passed to ARG.

--build-arg PASSWORD=<wrong> could produce a different result than --build-arg PASSWORD=<correct>, so I'm not sure if just looking at the contents of the package list would work for that. The builder cannot anticipate by itself what effect setting/changing an environment variable would have on the steps that are run (are make DEBUG=1 foo and make DEBUG=0 foo the same?). The only exception currently made is for xx_PROXY environment variables, where the assumption is made that a proxy may be needed for network-connections, but switching to a different proxy should produce the same result. So in order for that to work, some way to indicate a specific environment variable (/ build arg) to be ignored for caching would be needed.

note that BuildKit now has experimental support for RUN --mount=type=secret and RUN --mount=type=ssh, which may be helpful for passing secrets/credentials, but may still invalidate cache if those secrets change (not sure; this might be something to bring up in the buildkit issue tracker https://github.com/moby/buildkit/issues).

I have had to disable cache on docker hub / docker cloud

Does Docker Hub / Cloud actually _use_ caching? I think no caching is used there (as in; it's using ephemeral build environments)

I remember DockerHub used to not use build caching, but I had been looking at my automated builds on Docker Cloud just before this ticket and there is a Building Caching slider next to each branch's Autobuild slider now, although it is off by default.

I dare not enable build caching because steps like git clone will not get the latest repo download since it only compares the directive string which will not change. Explaining this issue to a colleague today that has been a thorn in our side for years, he was surprised as it seems like a large imperfection for many use cases.

I would much prefer the initial git clone && make build be cached and then just do a NO CACHE on a git pull && make build step to get only a much smaller code update + dependencies not already installed as the last layer, thereby efficiently caching the bulk of the image, not just for builds, but more importantly for all clients who right now must re-download and replace hundreds of MB of layers each time which is extremely inefficient.

The size is because many of the projects have a large number of dependencies, eg. system packages + Perl CPAN modules + Python PyPI modules etc.

Even using Alpine isn't much smaller once you add the system package dependencies and the CPAN and PyPI dependencies as I have been using Alpine for years to try to see if I could create smaller images but once you have lots of dependencies it doesn't make much difference if the base starts smaller since adding system packages adds most of it right back.

Caching the earlier layers which include all the system packages + CPAN + PyPI modules would mean very little should end up changing in the last layer of updates as I won't update working installed modules in most cases (I used scripts from my bash-tools utility submodule repo to only install packages that aren't already installed to avoid installing needless non-bugfix updates)

I was looking at using a trick like changing ARG for a while (an idea I got from searching through blogs like http://dev.im-bot.com/docker-select-caching/):

In Dockerfile:

ARG NOCACHE=0

Then run docker build like so:

docker build --build-arg NOCACHE=$(date +%s) ...

but I don't think this is possible in Docker Cloud.

There are environment variables but it seems not possible to use dynamic contents such as epoch above (or at least not documented that I could find), and with environment variables I'm not sure it would invalidate caching for that directive line onwards.

@thaJeztah Yes, this sort of behavior could easily have negative consequences if misunderstood or abused, but it would very nicely solve certain use cases.

--build-arg PASSWORD=<wrong>could produce a different result than--build-arg PASSWORD=<correct>, so I'm not sure if just looking at the contents of the package list would work for that

Although you're correct that it would produce different results, if the package list hasn't changed, I don't really care if the password is right or wrong; the packages are already in the prior image, so the user running this already has access (i.e., it's not a security concern), and if the password was wrong previously, I would expect the burden to be on the Dockerfile author to fail the installation if it's required, which would mean that you'd still get a chance to correctly install packages after fixing the password.

Yes, I was picturing something like docker build --force-cache-build-arg SECRET=supersecret. That's pretty clunky, I'm sure someone could come up with something better.

@HariSekhon It sounds like your use-case is actually the opposite of mine, though, right? You want to selectively force miss the cache, rather than selectively force hit the cache?

Adding this worked for me:

ADD http://date.jsontest.com/ /tmp/bustcache

but that site is down right now. This should work

ADD http://api.geonames.org/timezoneJSON?formatted=true&lat=47.01&lng=10.2&username=demo&style=full /tmp/bustcache

@itdependsnetworks

Perfect, that's a good workaround and the site is back up now. It's also useful to record the build date of the image.

I had tried this and similar other special files would should change each time

COPY /dev/random ...

but that didn't work even though RUN ls -l -R /etc showed such files were present they were always not found, I suspect there is some protection against using special files.

Now I think more about it on DockerHub / Docker Cloud you could probably also use a pre build hook to generated a file containing a datestamp and then COPY that to the image just before the layer your want to cachebust, achieving similar result, although the ADD shown above I think is more portable to local docker and cloud builds.

We need to find a more reliable date printing site for this cache busting workaround - both of these above seem to be demos / have quotas and are hence unreliable and randomly break builds.

The first one breaks it's daily quota all the time and the second one is now giving this error

{"status": {

"message": "the daily limit of 20000 credits for demo has been exceeded. Please use an application specific account. Do not use the demo account for your application.",

"value": 18

}}

I think it would be great to having a instruction I can put in a docker file that will run at every build and if the output differs from last build subsequent layers are rebuilt.

Example Usage would be:

FROM something

...

CACHE_BUST git ls-remote my-git-repo HEAD

RUN git clone --depth=1 my-git-repo ...

...

CMD ["my-cmd"]

The command in the CACHE_BUST instruction above will output the SHA from the HEAD of the specified repo, this way my dockerfile can know whether to clone or not based on changes to the repo.

All of my use cases here are related to non-deterministic network related layers as seen above. Currently I can use ARG for all my needs here but that means I often need a build script that uses a dockerfile, when it would be nice to only have the dockerfile itself to maintain. (plus some tools don't allow arguments)

My current workflow would looks like this:

ARG SHA_TO_BUILD

RUN echo SHA_TO_BUILD

RUN git clone ...

...everything else reliant on that clone

$> ./build-my-image.sh $(get-latest-sha)

I think turning caching on and off for each command is a bad idea, files should be written in a way so that if one layer needs rebuilding, the rest need rebuilding too. But I do think it would be nice to be able to force a rebuild at a certain point in the file.

such a great feature, why still pending?

Another use case is when the contents of a file change but the Dockerfile has not.

For example, if I have a COPY file.txt . and I modify file.txt Docker will still used the cached old version.

If Docker were to perform a checksum of the files it copied and used that next time around to determine if it should use the cached layer that would solve the problem.

As of right now I'm forced to use --no-cache and that downloads and performs way more than is needed (wasting time and bandwidth).

@brennancheung if that's the case, it's a bug. Feel free to open a separate issue with reproducible steps.

I think the main point in what @brennancheung

https://github.com/brennancheung is saying is, for instance, when you are

loading a configurations file (or something similar). You don't need to

reinstall the entire application, just update these files and the commands

associated with it, run the docker and voila, the system is set.

Of course, oftentimes you can just put the config file nearer to the end of

your stack, but that is not always the case.

Am Mi., 20. März 2019 um 00:10 Uhr schrieb Tibor Vass <

[email protected]>:

@brennancheung https://github.com/brennancheung if that's the case,

it's a bug. Feel free to open a separate issue with reproducible steps.—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

https://github.com/moby/moby/issues/1996#issuecomment-474666893, or mute

the thread

https://github.com/notifications/unsubscribe-auth/ALBon0edO9m5BU3C5Ik2i__9eogZc1Jiks5vYaaNgaJpZM4BB_sR

.

--

Thiago Rodrigues

Tried this https://github.com/moby/moby/issues/1996#issuecomment-185872769

But it only impacts cache upon/after first use and not merely its definition.

https://docs.docker.com/engine/reference/builder/#impact-on-build-caching

If a Dockerfile defines an ARG variable whose value is different from a previous build, then a “cache miss” occurs upon its first usage, not its definition.

@samstav "first use" there is the first RUN after the ARG (if that ARG is with a build-stage), so:

ARG FOO=bar

FROM something

RUN echo "this won't be affected if the value of FOO changes"

ARG FOO

RUN echo "this step will be executed again if the value of FOO changes"

FROM something-else

RUN echo "this won't be affected because this stage doesn't use the FOO build-arg"

The above depends a bit if you're using the next-generation builder (BuildKit), so with DOCKER_BUILDKIT=1 enabled, because the BuildKit can skip building stages if they are not needed for the final stage (or skip building stages if they can be fully cached)

Most helpful comment

What about CACHE ON and CACHE OFF in the Dockerfile? Each instruction would affect subsequent commands.