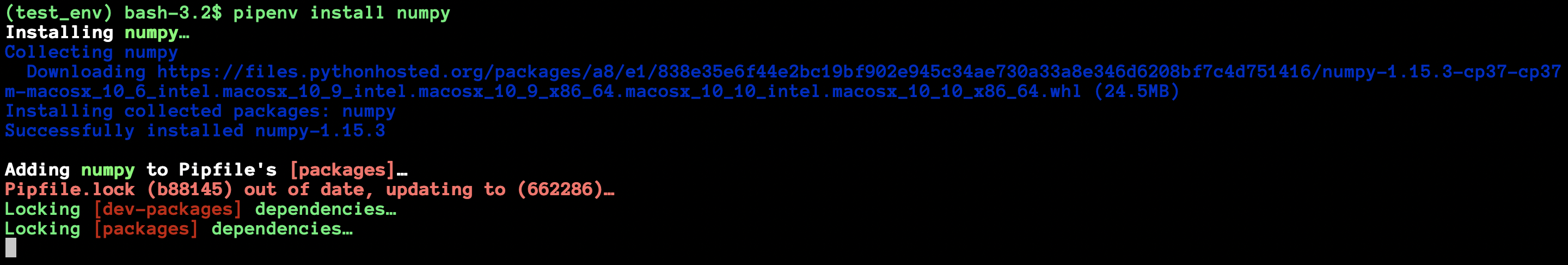

Pipenv: Locking is slow (and performs redundant downloads)

Is this an issue with my installation? It happens on all of my machines... Is there anything I/we can do to speed it up?

I install one package and the locking seems to take minutes.

Locking [packages] dependencies…

$ python -m pipenv.help output

Pipenv version: '2018.05.18'

Pipenv location: '/Users/colllin/miniconda3/lib/python3.6/site-packages/pipenv'

Python location: '/Users/colllin/miniconda3/bin/python'

Other Python installations in PATH:

2.7:/usr/bin/python2.72.7:/usr/bin/python2.73.6:/Library/Frameworks/Python.framework/Versions/3.6/bin/python3.6m3.6:/Library/Frameworks/Python.framework/Versions/3.6/bin/python3.63.6:/Users/colllin/miniconda3/bin/python3.63.6:/Users/colllin/.pyenv/shims/python3.63.6:/usr/local/bin/python3.63.6.3:/Users/colllin/miniconda3/bin/python3.6.3:/Users/colllin/.pyenv/shims/python2.7.10:/usr/bin/python3.6.4:/Library/Frameworks/Python.framework/Versions/3.6/bin/python33.6.3:/Users/colllin/miniconda3/bin/python33.6.4:/Users/colllin/.pyenv/shims/python33.6.4:/usr/local/bin/python3

PEP 508 Information:

{'implementation_name': 'cpython',

'implementation_version': '3.6.3',

'os_name': 'posix',

'platform_machine': 'x86_64',

'platform_python_implementation': 'CPython',

'platform_release': '17.5.0',

'platform_system': 'Darwin',

'platform_version': 'Darwin Kernel Version 17.5.0: Mon Mar 5 22:24:32 PST '

'2018; root:xnu-4570.51.1~1/RELEASE_X86_64',

'python_full_version': '3.6.3',

'python_version': '3.6',

'sys_platform': 'darwin'}

System environment variables:

TERM_PROGRAMNVM_CD_FLAGSTERMSHELLTMPDIRApple_PubSub_Socket_RenderTERM_PROGRAM_VERSIONTERM_SESSION_IDNVM_DIRUSERSSH_AUTH_SOCKPYENV_VIRTUALENV_INITPATHPWDLANGXPC_FLAGSPS1XPC_SERVICE_NAMEPYENV_SHELLHOMESHLVLDRAM_ROOTLOGNAMENVM_BINSECURITYSESSIONID___CF_USER_TEXT_ENCODINGPYTHONDONTWRITEBYTECODEPIP_PYTHON_PATH

Pipenv–specific environment variables:

Debug–specific environment variables:

PATH:/Library/Frameworks/Python.framework/Versions/3.6/bin:/Users/colllin/miniconda3/bin:/Users/colllin/.pyenv/plugins/pyenv-virtualenv/shims:/Users/colllin/.pyenv/shims:/Users/colllin/.pyenv/bin:/Users/colllin/.nvm/versions/node/v8.1.0/bin:/usr/local/bin:/usr/bin:/bin:/usr/sbin:/sbinSHELL:/bin/bashLANG:en_US.UTF-8PWD:/Users/.../folder

Contents of Pipfile ('/Users/.../Pipfile'):

[[source]]

url = "https://pypi.org/simple"

verify_ssl = true

name = "pypi"

[packages]

gym-retro = "*"

[dev-packages]

[requires]

python_version = "3.6"

All 63 comments

@colllin Have you checked to see whether pip commands that contact the server - like pip search (I think) - are also slow?

I see similar behavior, but it's kind of a known issue and network dependent. For some reason, access to pypi.org from my work network is incredibly slow but it is normally fast from my home network. I think locking does a lot of pip transactions under the hood, so slow access to the server slows the operation a lot.

EDIT: It may also be that you just have a lot of sub-dependencies to resolve - how big is the environment once created (e.g. how many top-level packages in your pipfile, and how many packages returned by pip list once the environment is bootstrapped)?

Thank you for the thoughtful response.

pip search isn't especially fast or slow for me... ~1 second?

Forgive me for my lack of domain knowledge: Does it really need to pip search? Didn't it just install everything? Doesn't it just need to write down what is already installed? Or... since it ensures the existence of the lock file anyway, could it do this as it installs the packages, or before?

I'm guessing... pipenv uses pip under the hood? so the installation process is a black box, and it can't know the dependency graph of what was/will be installed without doing ~a pip search~ its own pip queries?

EDIT: There is 1 top-level package, and ~65 packages returned by pip list in this particular repo.

I'm not a contributor to the project and at the moment I don't know all the specifics, but my understanding is that the locking phase is where all of the dependencies get resolved and pinned. So if you have one top-level package with ~65 dependencies, it's during the locking phase that all of the dependencies of that first package are (recursively) discovered, and then the dependency tree is used to resolve which packages need to be installed and (probably) in what rough order they should be installed in. Not as sure about the last part.

If you pip install from a Pipfile without a lockfile present, you'll notice that it does the locking phase before installing the packages into the venv. Similarly if you have a lockfile but it's out of date. I suspect having a lockfile and installing using the --deploy option would be faster, as would the --no-lock option; in the former case you get an error if the lockfile is out of date, in the latter you lose the logical splitting of top-level packages (declared environment) and the actual installed (locked) environment of all packages. At least this is how I understand it.

Whether or not pipenv uses pip under the hood - I think it does - it still needs to get the information from the pypi server(s) about package dependencies and the like, so my question about pip search was more a proxy for how fast or slow your path to the pypi server is than a direct implication about the mechanism by which pipenv does its thing.

An interesting experiment might be to compare the time required for locking the dependency tree in pipenv, and installing requirements into a new venv using pip install -r requirements.txt. I think they should be doing pretty similar things during the dependency resolution phase.

Have we established somewhere that there are redundant downloads happening btw? I suspect that is the case but proving it would be really helpful

FYI comparing pip install -r requirements.txt to the time it takes to lock a dependency graph is not going to be informative as a point of comparison. Pip doesn't actually _have_ a resolver, not in any real sense. I think I can describe the difference. When pip installs your requirements.txt, it follows this basic process:

- Find the first requirement listed

- Find all of its dependencies

- Install them all

- Find the second requirement listed

- Find all of its dependencies

- Install them all

- Find the third requirement listed

- Find all of its dependencies

- Install them all

This turns out to be pretty quick because pip doesn't really care if the dependencies of _package 1_ conflicted with the dependencies of _package 3_, it just installed the ones in _package 3_ last so that's what you get.

Pipenv follows a different process -- we compute a resolution graph that attempts to satisfy _all_ of the dependencies you specify, before we build your environment. That means we have to start downloading, comparing, and often times even _building_ packages to determine what your environment should ultimately look like, all before we've even begun the actual process of installing it (there are a lot of blog posts on why this is the case in python so I won't go into it more here).

Each step of that resolution process is made more computationally expensive by requiring hashes, which is a best practice. We hash incoming packages after we receive them, then we compare them to the hashes that PyPI told us we should expect, and we store those hashes in the lockfile so that in the future, people who want to build an identical environment can do so with the contractual guarantee that the packages they build from are the same ones you originally used.

Pip search is a poor benchmark for any of this, in fact any of pip's tooling is a poor benchmark for doing this work -- we use pip for each piece of it, but putting it together in concert and across many dependencies to form and manage environments and graphs is where the value of pipenv is added.

One point of clarification -- once you resolve the full dependency graph, installation order shouldn't matter anymore. Under the hood we actually pass --no-deps to every installation anyway.

As a small side-note, pip search is currently the only piece of pip's tooling that relies on the now deprecated XMLRPC interface, which is uncacheable and very slow. It will always be slower than any other operation.

Locking numpy (and nothing else) takes 220 s on my machine (see below). Most of the time seems to be spent downloading more than 200MB of data, which is quite puzzling given that the whole numpy source has 4 MB. Though clearly even if that was instant, there's still 25 s of actual processing, and even that seems excessive to calculate a few hashes. Subsequent locking, even after deleting Pipenv.lock, takes 5 s.

11:46 ~/Co/Ce/torchdft time pipenv install

Creating a virtualenv for this project…

Using /usr/local/Cellar/pipenv/2018.5.18/libexec/bin/python3.6 (3.6.5) to create virtualenv…

⠋Already using interpreter /usr/local/Cellar/pipenv/2018.5.18/libexec/bin/python3.6

Using real prefix '/usr/local/Cellar/python/3.6.5/Frameworks/Python.framework/Versions/3.6'

New python executable in /Users/hermann/.local/share/virtualenvs/torchdft-mABBUp_t/bin/python3.6

Also creating executable in /Users/hermann/.local/share/virtualenvs/torchdft-mABBUp_t/bin/python

Installing setuptools, pip, wheel...done.

Virtualenv location: /Users/hermann/.local/share/virtualenvs/torchdft-mABBUp_t

Creating a Pipfile for this project…

Pipfile.lock not found, creating…

Locking [dev-packages] dependencies…

Locking [packages] dependencies…

Updated Pipfile.lock (ca72e7)!

Installing dependencies from Pipfile.lock (ca72e7)…

🐍 ▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉▉ 0/0 — 00:00:00

To activate this project's virtualenv, run the following:

$ pipenv shell

7.81 real 6.39 user 1.64 sys

11:46 ~/Co/Ce/torchdft time pipenv install numpy --skip-lock

Installing numpy…

Collecting numpy

Using cached https://files.pythonhosted.org/packages/f6/cd/b2c50b5190b66c711c23ef23c41d450297eb5a54d2033f8dcb3b8b13ac85/numpy-1.14.5-cp36-cp36m-macosx_10_6_intel.macosx_10_9_intel.macosx_10_9_x86_64.macosx_10_10_intel.macosx_10_10_x86_64.whl

Installing collected packages: numpy

Successfully installed numpy-1.14.5

Adding numpy to Pipfile's [packages]…

4.97 real 2.88 user 1.81 sys

11:46 ~/Co/Ce/torchdft time pipenv lock --verbose

Locking [dev-packages] dependencies…

Using pip: -i https://pypi.org/simple

ROUND 1

Current constraints:

Finding the best candidates:

Finding secondary dependencies:

------------------------------------------------------------

Result of round 1: stable, done

Locking [packages] dependencies…

Using pip: -i https://pypi.org/simple

ROUND 1

Current constraints:

numpy

Finding the best candidates:

found candidate numpy==1.14.5 (constraint was <any>)

Finding secondary dependencies:

numpy==1.14.5 not in cache, need to check index

numpy==1.14.5 requires -

------------------------------------------------------------

Result of round 1: stable, done

Updated Pipfile.lock (4fccdf)!

219.24 real 25.14 user 5.77 sys

Numpy should be substantially faster now (I have been using your example as a test case in fact!). As of my most recent test, I had it at ~30s on a cold cache on a vm.

Can you confirm any improvements with the latest release?

It has improved substantially for me as well. I'm now sitting on a very fast connection, and got as low as 14 s, but that was when the downloading went at 30 MB/s. What is being downloaded besides a single copy of the source code of numpy?

I think we’re downloading redundant wheels (not sure). We’re already evaluating the situation.

I changed my Pipfile.lock drastically by uninstalling a fe changes and now deploying that on a different machine is freezing. Any particular fix for this?

It’s not recommended that you manually edit your lockfile. Without more information it’s not possible to help. Please open a separate issue.

If you want to benchmark the performance of pipenv lock, you should try to add pytmx to your dependencies...

pipenv lock used to take 1 hour or more for us (we have a pretty slow internet), and after removing pytmx, we got down to about 5 minutes and finally pipenv is more usable.

I know pytmx is a large package because it's a big monolithic lib and depends on opengl/pygame and other related things, but it shouldn't take 1 hour to pipenv lock no matter how big the package

It doesn’t take one hour for me

$ cat Pipfile

[packages]

pytmx = "*"

$ time pipenv lock --clear

Locking [dev-packages] dependencies...

Locking [packages] dependencies...

Updated Pipfile.lock (eb50ab)!

real 0m2.827s

user 0m2.287s

sys 0m0.390s

Also PyTMX is less than 20kb on PyPI, and only has one dependency to six (which is super small), so networking shouldn’t be an issue. There is likely something else going on in your environment.

you're right it's smaller than I thought it does not depend explicitly on pygame and such, not sure why it was taking so long then !

I will try to find more information but i have a top CPU and SSD so I still think the issue is related to our slow internet

@techalchemy I didn't edit the file manually. I uninstalled a lot of dependencies using pipenv uninstall package_name and afterwards ran it on the server. It stayed in the lock state for a very long time.

I am not interested in spending energy on this discussion with random shots in the dark. Please provide a reproducible test case.

Here's what I hope is a reproducible test case

https://github.com/Mathspy/tic-tac-toe-NN/tree/ab6731d216c66f5e09a4dabbe383df6dc745ba18

Attempting to do

pipenv install

in this lock-less repository have so far downloaded over 700MBs or so while it displayed

Locking [packages] dependencies...

Will give up in a bit and rerun with --skip-lock until it's fixed

I noticed that lock was really slow and downloaded huge amount of data from files.pythonhosted.org, more than 800MB for a small project that depends on scipy flask etc.

So I sniffed the requests made to files.pythonhosted.org, and it turns out that pip or pipenv were doing completely unnecessary downloads, which makes lock painfully slow.

For example, same version numpy had been downloaded several times in full. And it downloaded wheels for windows / linux, although I was using a Mac.

My setup:

$ pipenv --version

pipenv, version 2018.05.18

$ pip -V

pip 18.0 from /usr/local/lib/python2.7/site-packages/pip (python 2.7)

are additional Pipfiles helpful for debugging here?

Most likely @AlJohri, also any info about running processes / locks / io would help

Been stuck here for about 5 minutes already. First thought it might have been some sort of pip install issues and reinstalled everything fresh via Homebrew, but still the same problem. Any ideas why?

Finally finished after about 6 - 7 minutes. Pretty new to Python and Pipenv, so a little help about where to find the necessary files for debugging would be great! :)

this is pretty bad to the point I am afraid to install new python libs or upgrade existing ones.

After watching one of the talks from the creator, I decided to use pipenv. But it is too slow.

Thanks for your insightful feedback.

@techalchemy If there is something I could do to help and fix this. I am very happy to contribute.

I noticed that

lockwas really slow and downloaded huge amount of data fromfiles.pythonhosted.org, more than 800MB for a small project that depends onscipyflasketc.

I have a suspicion, though not conclusive evidence, that scipy is correlated with very long pipenv lock times.

really painful at times, I am installing PyPDF2 and textract; pipenv took ~10 mins to lock.

The slowness of pipenv really hinders dev process for us. I now advise everyone to stick with pip + virtualenv until this issue is resolved.

Any news on this? Any way to help?

dupe of https://github.com/pypa/pipenv/issues/1914

/ edit: btw, why does pipenv install update the versions in the lockfile? o.Ò I just ran it after locking timed out and now that I look at the new lock file I see pandas was updated from 0.23.4 to 0.24.0, numpy from 0.16.0 to 0.16.1, etc... Didn't expect that to happen unless I did pipenv update ...

I find it install quickly and locks slowly, so as soon as you get the Installation Succeeded message your good to continue working... unless you want to install something else...

... or need to push the lock file into some repo.

Should install be preforming lock anyway, seeing that lock is already a separate command? In the meanwhile the install option description should specify that locking also takes place, and maybe even recommend --skip-lock.

Also, how about pinning this issue?

Pipenv is a really wonderful tool and I used to recommend it, but a project with 8 modules can't lock... it just times out. There doesn't seem to be any interest in solving this issue and that is very frustrating. I read you can get dependencies without downloading from pypy now, is that a workaround for this issue? Don't see any talk about that option here. At the moment the tool is unusable for my purposes.

pexpect.exceptions.TIMEOUT: <pexpect.popen_spawn.PopenSpawn object at 0x10e12e400>

searcher: searcher_re:

0: re.compile('\n')

<pexpect.popen_spawn.PopenSpawn object at 0x10e12e400>

searcher: searcher_re:

0: re.compile('\n')

pipenv lock -r 87.22s user 18.57s system 11% cpu 15:02.77 total

How is this not the main priority for this project? Pipenv is so slow its pretty much unusable. An not only in some uncommon side uses cases its always super slow.

Without knowing too much about what's going on under-the-hood, I was thinking that this might be solved nicely with a local cache of the dependency graph.

Think about it: if we cache that package x-1.2.3 depends on y>=3.4, we can locally do a lot of the work that's currently done in downloading packages one at a time, expanding them, and checking dependencies. The lock phase would then be as simple as:

- Compare the Pipfile to the cache and make sure we have everything we need.

- If not, download anything new and calculate dependencies there

- Cache the changes

- Install.

In every case, while the first time might be slow, subsequent locks would be painless, no?

I just decided to use pipenv instead of pip for a small project. First thing I did was pipenv install -r requirements.txt. It's been locking the dependencies for about 10 minutes now. Therefore, I'm gonna go back to pip.

Guys, this issue is costing you a lot of users. I propose to address it quickly.

In my case installing the dependencies on the server hangs the server for hours. I'm using AWS EC2 instance t2.micro with 1 GB RAM. This much RAM is enough for a single application with few dependencies but the installation take all the memory and there is only one way to get it to work by restarting the server.

This issue is pending for so long years and no fix has been made for this. I see multiple issues being closed without any resolution.

Such a nice tool getting neglected. I will be unsubscriing from this issue as I see it will never get resolved. Will be sticking to something like conda or do it manually using virtualenv.

Guess I'll give https://github.com/sdispater/poetry a shot :|

Can an admin kindly close this thread to comments? It looks like no helpful additional content is being added to the discussion.

I'd be happy to subscribe a ticket tracking the work towards fixing the issue.

Thanks!

I suspect 99% of folks using this tool and complaining on this thread are programmers . Instead of whining, put your time where your mouth is and submit a PR.

Hello @idvorkin,

I've tried once.

It took weeks to achieve merging of the trivial fix.

Just compare the amount of discussions with the actual fix size.

I definitely do not want to submit any more fixes to this project.

So your advice is not as viable as you can assume.

@Jamim on behalf of the many users (and I suspect the admins as well), thank you for your contributions. I read your PR, and I could empathize with the frustration. However, I have to agree w/ @techalchemy on this one:

Of course we care about the library we maintain, but I would suggest that the phrasing is probably not the most effective way to have a positive interaction.

I've never met the admins, but if they're anything like me (and maybe you) they are humans with busy lives whose lives are packed with commitments even before they have energy to spend on this project.

Similarly, I bet if you (or anyone else) fixed the performance problem, you'd have slews of people who'd help you develop, test, merge it, or if required (and I highly doubt it would be) create a fork.

I'm thankful for the time the developers of this project are spending on this, but I suggest that it should be warned in bold that this project is not yet production ready right above the user testimonials in README.md, currently it's misleading people to spend precious time to replace their current pip/virtualenv stack with pipenv until they find out about this slow locking and they understand they can't use it.

until they find out about this slow locking and they understand they can't use it.

While I'd very happy to get a speed up as it is indeed very slow, there is no need for such hyperbole.

I'm using pipenv just fine every day. The workflow improvements it provides greatly outweight the occasional slowness. Locking is just not something I do all the time, as opposed to running scripts for example.

How often do you lock that it actually becomes such a problem you feel you can't use it? :open_mouth:

@bochecha my statement may be hyperbole in your opinion but it's a fact based on my experience, I heard about pipenv from some coworkers, today I tried to update an old project, updating its dependencies, etc I thought lets update from pip/virtualenv to pipenv as part of the update process. I had to update a dependency, check how things work with it, update parts of code if needed and then update another dependency, each time I ran pipenv install <something> I had to wait a ridiculously long time, first I thought it's calculating something and it'll cache it for future as I couldn't believe it's a problem in a claiming to be production ready package manager. After installing ~10th package I started searching about it and I found this thread, I removed Pipfile and Pipfile.lock and went back to my pip/virtualenv workflow. I was tempted to try poetry but I couldn't risk another hour.

This things happen in JS community for example but I don't expect it in Python community, we don't have this kind of problems in our community and we should try to avoid it, a disclaimer in README.md can avoid this inconvenience so I suggested it in my comment. It could save my time today and I think it'll save time for other newcomers and they won't have a bad experience with this project so they may stay as potential future users.

I kinda agree with sassanh. Not everyone is equally affected by the issue but some of us were affected pretty bad. I have made open source projects that were not really fully functional or production ready and when it was the case I put a disclaimer on it so I don't waste people's time if they are not ready for the bumps.

I am not mad at the the people who work on this project but I am kinda mad at the person who made a public talk about it, selling it as a great tool with 0 disclaimer. As a result, I wasted quite a lot of my precious time trying to make a tool work, hoping to save time in the long run, but I ended up having to go back to pip and my own script, because pipenv didn't work in my time and bandwidth constrained environment.

each time I ran pipenv install

Did you know about pipenv install -r requirements.txt to lock/install only once from your existing project when you try moving it to pipenv? If you don't, the problem might be one of documentation?

Before anything I'm positive that pipenv will be a good replacement for pip/virtualenv workflow, I guess we all know that we need it and I think the day pipenv is production ready it'll be a great help for many people/projects.

@bochecha as I explained I had to install a newer version of a package, do some stuff and then go with the next package, maybe if I did this process with pip and then migrated to pipenv I wouldn't notice the problem at all, but I first migrated to pipenv and then did the package updates one by one and it was really annoying. I'm glad it works for your usecase, but I'm sure it doesn't work for many people like me (take a look at the comments above). It should be mentioned in the README.md, at least it should be mentioned that "each locking may take a long time, so if your usecase includes installing/removing lots of packages quickly you should avoid using pipenv until this issue is resolved" (again in bold, and on top of testimonials) if you announce the problems before anyone is affected by it, everyone will be thankful, if the problems affect others and you didn't warn them, everyone will get angry.

Agreed. I started looking into poetry and even though of adding another user to my OS per project instead of using pipenv again. If it's not working fine for casual use cases with the default settings it's broken imho.

It's a super useful tool! There's just this one thing that makes it super useless to me. And sadly I have little time to contribute :|

Sure, it is annoying waiting for a lock when having to do multiple installs mid dev session, but this can be managed.

The important thing is that a lock file is generated before pushing local changes to repo. I make judicious use of the —skip-lock flag during dev sessions, and pipenv lock once at the end before I commit.

Thanks for the project. But,

Locking is verrrrrrrrrrrrrrrrrrrrrrrrrrrrrry slowwwwwwwwwwwwwwwwwwwwwwwwwwwww.

PIP_NO_CACHE_DIR=off

This env makes locking more fast, if it has a pip package cache installed already.

Hi @yssource and everyone,

Thanks for the project. But,

Locking is verrrrrrrrrrrrrrrrrrrrrrrrrrrrrry slowwwwwwwwwwwwwwwwwwwwwwwwwwwww.

This project seems to be dead, so if you want to eliminate the speed issue please consider migrating to Poetry which is significantly faster.

@Jamim , thanks for suggesting Poetry. Personally, for some reason I did not come across it. After reading its readme it seems worth trying. It lists some benefits over Pipenv as well (https://github.com/sdispater/poetry/#what-about-pipenv).

Having said that, the project being dead is a gross overstatement, and if I were in pipenv authors' shoes, I would find it disrespectful. The author replied in the issues section just yesterday. It's just this locking issue being overlooked, probably because it is hard to fix.

For the record, Poetry suffers from performance issues as well:

https://github.com/sdispater/poetry/issues/338

I have the same problem in all of my projects.

The cause seems to be pylint.

Pipenv (pip) can install it successfully, but locking takes forever!

pipenv, version 2018.11.26

Minimal working example

djbrown@DESKTOP-65P6D75:~$ mkdir test

djbrown@DESKTOP-65P6D75:~$ cd test

djbrown@DESKTOP-65P6D75:~/test$ pipenv install --dev pylint --verbose

Creating a virtualenv for this project…

Pipfile: /home/djbrown/test/Pipfile

Using /usr/bin/python3 (3.6.9) to create virtualenv…

⠸ Creating virtual environment...Already using interpreter /usr/bin/python3

Using base prefix '/usr'

New python executable in /home/djbrown/.local/share/virtualenvs/test-PW-auWy_/bin/python3

Also creating executable in /home/djbrown/.local/share/virtualenvs/test-PW-auWy_/bin/python

Installing setuptools, pip, wheel...done.

✔ Successfully created virtual environment!

Virtualenv location: /home/djbrown/.local/share/virtualenvs/test-PW-auWy_

Creating a Pipfile for this project…

Installing pylint…

⠋ Installing...Installing 'pylint'

$ ['/home/djbrown/.local/share/virtualenvs/test-PW-auWy_/bin/pip', 'install', '--verbose', '--upgrade', 'pylint', '-i', 'https://pypi.org/simple']

Adding pylint to Pipfile's [dev-packages]…

✔ Installation Succeeded

Pipfile.lock not found, creating…

Locking [dev-packages] dependencies…

⠇ Locking...

I heard about pipenv alot and tried it today,

the locking is also very slow for me. It's been already around 2 minute, still stuck on locking.

Downloading is pretty fast, but issue is with locking.

Is this issue resolved?

I am using Pop os 19.10, pipenv, version 11.9.0 from apt, python 3.7.5.

I want to draw attention to this excellent comment from #1914 on the same topic https://github.com/pypa/pipenv/issues/1914#issuecomment-457965038 which suggests that downloading and executing each dependency is not necessary any longer.

I wonder if any devs could comment on the feasibility of this approach.

I've noticed that it's actually faster to remove the environment and recreate it from scratch to update the lockfile.

This is true both for running pipenv lock and pipenv install some-package

I really like pipenv but not as much as I like my bandwidth and time. So I end up solving the issue using:

$ pipenv --rm

$ virtualenv .

$ source bin/activate

$ # Create a requirement file (Cause pipenv lock -r > requirements.txt... you know!)

$ pip install -r requirement.txt

Wish the developers best of luck...

@ravexina thanks for the suggestion, I'll try for sure

Most helpful comment

I noticed that

lockwas really slow and downloaded huge amount of data fromfiles.pythonhosted.org, more than 800MB for a small project that depends onscipyflasketc.So I sniffed the requests made to

files.pythonhosted.org, and it turns out that pip or pipenv were doing completely unnecessary downloads, which makeslockpainfully slow.For example, same version

numpyhad been downloaded several times in full. And it downloaded wheels for windows / linux, although I was using a Mac.My setup: