Azure-sdk-for-java: [BUG] Possible Memory Leak in Storage Blobs

Filing this here on behalf of @jdubois

This issue was reported by a customer doing large selection of blobs.

This is using the new API for blob storage. Please note that those are my notes from discussing with the client, I haven't reproduced the case myself (for obvious reasons, you need a lot of blobs!), but I am pretty confident he is correct.

Doing a "blobContainerClient.listBlobs().streamByPage()" is causing memory leaks when you have a huge number of blobs, so we probably have some pointer left somewhere there.

Here is his solution, that do indeed work in production:

- use

streamByPageand notiterableByPage, as it has aclosemethod - force clean the body of HTTP requests using the Reactor API, using

response.getRequest().setBody(Flux.never()); - disable the buffer copies in Netty:

new NettyAsyncHttpClientBuilder().disableBufferCopy(true).build();

All 22 comments

Thanks for the feedback! We are routing this to the appropriate team for follow-up. cc @xgithubtriage.

@kasobol-msft can you please look at this.

In @Petermarcu description I find this piece contradicting: "Doing a "blobContainerClient.listBlobs().streamByPage()" is causing memory leaks" and then in workaround section I can see "use streamByPage and not iterableByPage, as it has a close method". It's kind of contradicting - to see same API mentioned as a problem and a workaround. @jdubois could you please clarify that part?

@jdubois few other questions that could bring in more clarity:

- Does the problem occur when single listBlobs call result is iterated on OR is this because many listBlobs calls are leaving garbage behind? (my guess and repro I got indicates first problem, looking for confirmation)

- How many blobs does customer have?

So far I came up with the following repro:

- create blob container with 20k+ blobs

- list them with page size 2

- run program with -Xmx60m

The repro code:

package com.azure.storage.blob;

import com.azure.core.http.rest.PagedIterable;

import com.azure.core.http.rest.PagedResponse;

import com.azure.storage.blob.models.BlobItem;

import com.azure.storage.blob.models.ListBlobsOptions;

import java.io.BufferedReader;

import java.io.ByteArrayInputStream;

import java.io.IOException;

import java.io.InputStreamReader;

import java.util.UUID;

import java.util.stream.Stream;

public class TestList {

public static void main(String[] args) throws IOException {

BlobServiceClient blobServiceClient = new BlobServiceClientBuilder()

.connectionString("YOUR OWN")

.buildClient();

BlobContainerClient blobContainerClient = blobServiceClient.getBlobContainerClient("duzoblobow");

if(!blobContainerClient.exists()){

blobContainerClient.create();

}

// addMoreBlobs(blobContainerClient);

System.out.println("Press enter");

new BufferedReader(new InputStreamReader(System.in)).readLine();

System.out.println("Starting");

int pageSize = 2;

total = 0;

while (true) {

total = 0;

PagedIterable<BlobItem> blobItemPagedIterable = blobContainerClient.listBlobs(new ListBlobsOptions().setMaxResultsPerPage(pageSize), null);

Stream<PagedResponse<BlobItem>> pagedResponseStream = blobItemPagedIterable.streamByPage();

pagedResponseStream

.forEach(page -> {

System.out.println("Processing page");

page.getValue().forEach(item -> {

System.out.println(item.getName());

});

total += pageSize;

System.out.println(total);

});

System.out.println("Press enter");

new BufferedReader(new InputStreamReader(System.in)).readLine();

}

}

static int total = 0;

private static void addMoreBlobs(BlobContainerClient blobContainerClient) {

for (int i = 0; i < 15000; i++) {

blobContainerClient.getBlobClient(UUID.randomUUID().toString()).upload(new ByteArrayInputStream(new byte[0]), 0);

if(i%100 == 0) {

System.out.println(i);

}

}

}

}

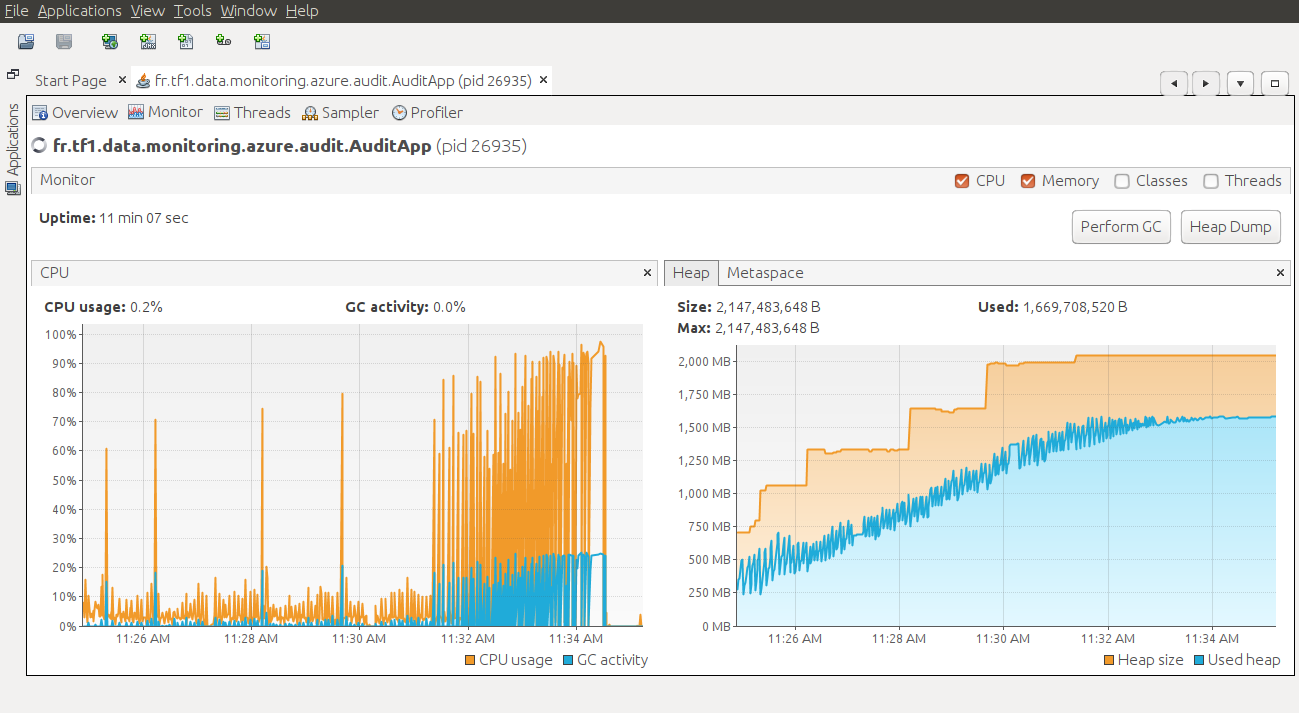

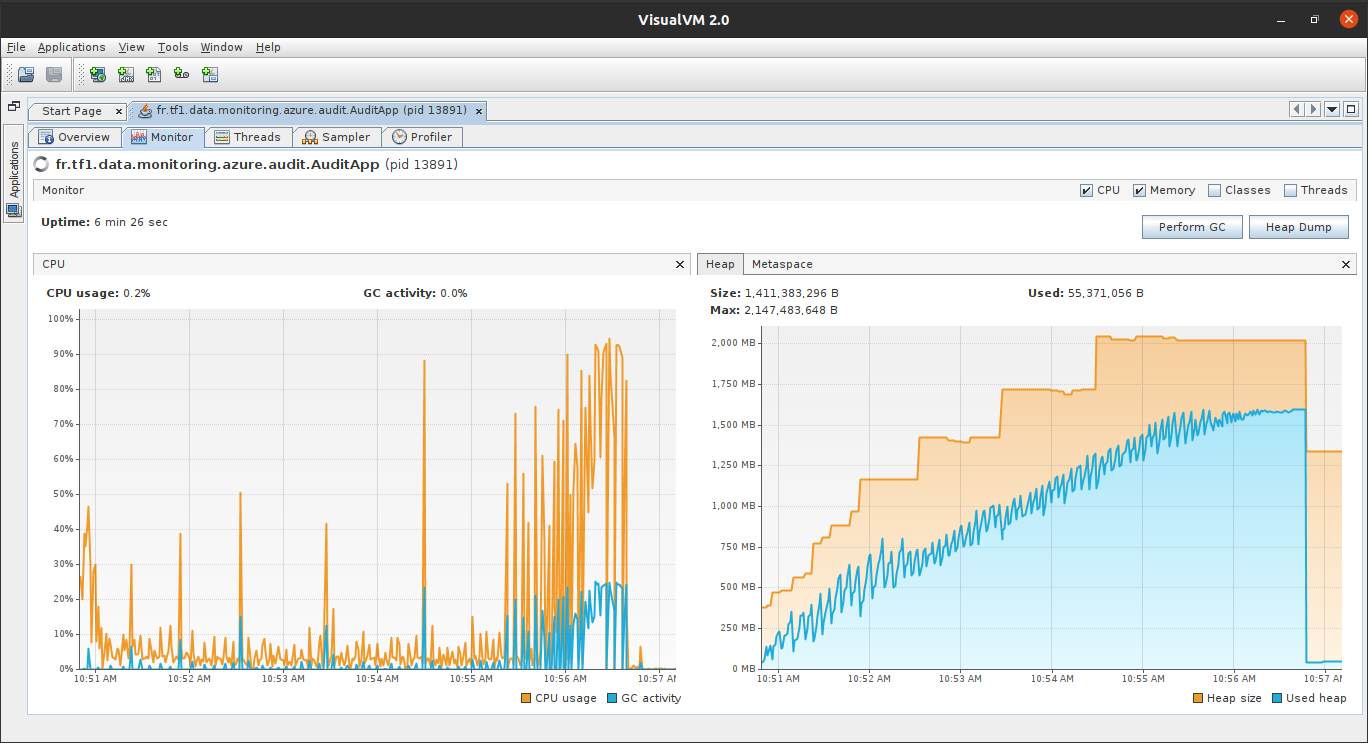

The results from two runs:

Also took a heapdump before it blew up:

It seems that the problem hides inside PagedResponse/PagedIterable/PagedFlux family - they seem to accumulate references to each page result somehow. While debugging this part seems to be a suspect:

@alzimmermsft could you please take a look?

@anuchandy could you please also assist here from the azure-core side?

The best person to help here is in fact my customer - let me ask him directly if he would like to participate to this issue.

Hi folks, I'm really glad you started to work on this one, thanks a lot.

I was trying to mock the Netty client to reproduce this memory leak.

Feel free to let me know how can I help.

Thanks @cbismuth !! Christophe is my client, of course 👍

@cbismuth Please see my long comment above ^. There are some questions I asked @jdubois - could you please provide answers to them?

That comment also contains a repro I came up with which is based on the initial issue description. Please take a look on repro and exception traces I was getting and let us know if this is consistent with issue you're observing.

Also if you are able to share any stack traces from your deployments that'd be very helpful.

cc @BelkhousNabil

Here is a minimal code snippet to reproduce this memory leak with a container enclosing a huge number of blob items, like an Event Hub sink.

public void consumeBlobItems(final StorageAccount storageAccount,

final BlobContainerItem blobContainerItem,

final Consumer<BlobItem> consumer) {

createBlobContainerClient(storageAccount, blobContainerItem).listBlobs()

.streamByPage()

.map(PagedResponse::getValue)

.flatMap(Collection::stream)

.forEach(consumer);

}

11:14:31.509 [reactor-http-epoll-1] ERROR r.n.channel.ChannelOperationsHandler - [id: 0x025238ad, L:/192.168.1.26:39590 - R:storagehbbtvv3prod.blob.core.windows.net/20.150.37.196:443] Error was received while reading the incoming data. The connection will be closed.

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOf(Arrays.java:3332)

at java.lang.StringCoding.safeTrim(StringCoding.java:89)

at java.lang.StringCoding.decode(StringCoding.java:230)

at java.lang.String.<init>(String.java:463)

at java.lang.String.<init>(String.java:515)

at com.azure.core.implementation.http.BufferedHttpResponse.lambda$getBodyAsString$0(BufferedHttpResponse.java:62)

at com.azure.core.implementation.http.BufferedHttpResponse$$Lambda$587/966822880.apply(Unknown Source)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:107)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:121)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1592)

at reactor.core.publisher.MonoCollect$CollectSubscriber.onComplete(MonoCollect.java:145)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onComplete(FluxMapFuseable.java:144)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replayNormal(FluxReplay.java:551)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replay(FluxReplay.java:654)

at reactor.core.publisher.FluxReplay$ReplaySubscriber.onComplete(FluxReplay.java:1218)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.core.publisher.FluxDoFinally$DoFinallySubscriber.onComplete(FluxDoFinally.java:138)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.netty.channel.FluxReceive.terminateReceiver(FluxReceive.java:397)

at reactor.netty.channel.FluxReceive.drainReceiver(FluxReceive.java:197)

at reactor.netty.channel.FluxReceive.onInboundComplete(FluxReceive.java:345)

at reactor.netty.channel.ChannelOperations.onInboundComplete(ChannelOperations.java:363)

at reactor.netty.channel.ChannelOperations.terminate(ChannelOperations.java:412)

at reactor.netty.http.client.HttpClientOperations.onInboundNext(HttpClientOperations.java:556)

at reactor.netty.channel.ChannelOperationsHandler.channelRead(ChannelOperationsHandler.java:91)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:377)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:363)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:355)

at io.netty.channel.CombinedChannelDuplexHandler$DelegatingChannelHandlerContext.fireChannelRead(CombinedChannelDuplexHandler.java:436)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:321)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:295)

at io.netty.channel.CombinedChannelDuplexHandler.channelRead(CombinedChannelDuplexHandler.java:251)

11:14:31.518 [reactor-http-epoll-1] WARN i.n.c.AbstractChannelHandlerContext - An exception 'java.lang.OutOfMemoryError: Java heap space' [enable DEBUG level for full stacktrace] was thrown by a user handler's exceptionCaught() method while handling the following exception:

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOf(Arrays.java:3332)

at java.lang.StringCoding.safeTrim(StringCoding.java:89)

at java.lang.StringCoding.decode(StringCoding.java:230)

at java.lang.String.<init>(String.java:463)

at java.lang.String.<init>(String.java:515)

at com.azure.core.implementation.http.BufferedHttpResponse.lambda$getBodyAsString$0(BufferedHttpResponse.java:62)

at com.azure.core.implementation.http.BufferedHttpResponse$$Lambda$587/966822880.apply(Unknown Source)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:107)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:121)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1592)

at reactor.core.publisher.MonoCollect$CollectSubscriber.onComplete(MonoCollect.java:145)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onComplete(FluxMapFuseable.java:144)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replayNormal(FluxReplay.java:551)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replay(FluxReplay.java:654)

at reactor.core.publisher.FluxReplay$ReplaySubscriber.onComplete(FluxReplay.java:1218)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.core.publisher.FluxDoFinally$DoFinallySubscriber.onComplete(FluxDoFinally.java:138)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.netty.channel.FluxReceive.terminateReceiver(FluxReceive.java:397)

at reactor.netty.channel.FluxReceive.drainReceiver(FluxReceive.java:197)

at reactor.netty.channel.FluxReceive.onInboundComplete(FluxReceive.java:345)

at reactor.netty.channel.ChannelOperations.onInboundComplete(ChannelOperations.java:363)

at reactor.netty.channel.ChannelOperations.terminate(ChannelOperations.java:412)

at reactor.netty.http.client.HttpClientOperations.onInboundNext(HttpClientOperations.java:556)

at reactor.netty.channel.ChannelOperationsHandler.channelRead(ChannelOperationsHandler.java:91)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:377)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:363)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:355)

at io.netty.channel.CombinedChannelDuplexHandler$DelegatingChannelHandlerContext.fireChannelRead(CombinedChannelDuplexHandler.java:436)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:321)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:295)

at io.netty.channel.CombinedChannelDuplexHandler.channelRead(CombinedChannelDuplexHandler.java:251)

The suggested workarounds as implemented below don't fix this issue:

public void consumeBlobItems(final StorageAccount storageAccount,

final BlobContainerItem blobContainerItem,

final Consumer<BlobItem> consumer) {

createBlobContainerClient(storageAccount, blobContainerItem).listBlobs()

.iterableByPage()

.forEach(response -> {

response.getValue().forEach(consumer);

response.getRequest()

.setBody(Flux.never());

try {

response.close();

} catch (final IOException e) {

LOGGER.error(e.getMessage(), e);

}

});

}

private HttpClient createHttpClient() {

return new NettyAsyncHttpClientBuilder().disableBufferCopy(true)

.build();

}

11:34:31.618 [reactor-http-epoll-1] ERROR r.n.channel.ChannelOperationsHandler - [id: 0x2966f906, L:/192.168.1.26:39928 - R:storagehbbtvv3prod.blob.core.windows.net/20.150.37.196:443] Error was received while reading the incoming data. The connection will be closed.

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOfRange(Arrays.java:3664)

at java.lang.StringBuffer.toString(StringBuffer.java:669)

at java.util.regex.Matcher.replaceFirst(Matcher.java:1006)

at java.lang.String.replaceFirst(String.java:2178)

at com.azure.core.util.serializer.JacksonAdapter.deserialize(JacksonAdapter.java:166)

at com.azure.core.implementation.serializer.HttpResponseBodyDecoder.deserializeBody(HttpResponseBodyDecoder.java:169)

at com.azure.core.implementation.serializer.HttpResponseBodyDecoder.lambda$decode$1(HttpResponseBodyDecoder.java:81)

at com.azure.core.implementation.serializer.HttpResponseBodyDecoder$$Lambda$588/1106952291.apply(Unknown Source)

at reactor.core.publisher.MonoFlatMap$FlatMapMain.onNext(MonoFlatMap.java:118)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:121)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:121)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1592)

at reactor.core.publisher.MonoCollect$CollectSubscriber.onComplete(MonoCollect.java:145)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onComplete(FluxMapFuseable.java:144)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replayNormal(FluxReplay.java:551)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replay(FluxReplay.java:654)

at reactor.core.publisher.FluxReplay$ReplaySubscriber.onComplete(FluxReplay.java:1218)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.core.publisher.FluxDoFinally$DoFinallySubscriber.onComplete(FluxDoFinally.java:138)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.netty.channel.FluxReceive.terminateReceiver(FluxReceive.java:397)

at reactor.netty.channel.FluxReceive.drainReceiver(FluxReceive.java:197)

at reactor.netty.channel.FluxReceive.onInboundComplete(FluxReceive.java:345)

at reactor.netty.channel.ChannelOperations.onInboundComplete(ChannelOperations.java:363)

at reactor.netty.channel.ChannelOperations.terminate(ChannelOperations.java:412)

at reactor.netty.http.client.HttpClientOperations.onInboundNext(HttpClientOperations.java:556)

at reactor.netty.channel.ChannelOperationsHandler.channelRead(ChannelOperationsHandler.java:91)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:377)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:363)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:355)

at io.netty.channel.CombinedChannelDuplexHandler$DelegatingChannelHandlerContext.fireChannelRead(CombinedChannelDuplexHandler.java:436)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:321)

11:34:31.627 [reactor-http-epoll-1] WARN i.n.c.AbstractChannelHandlerContext - An exception 'java.lang.OutOfMemoryError: Java heap space' [enable DEBUG level for full stacktrace] was thrown by a user handler's exceptionCaught() method while handling the following exception:

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOfRange(Arrays.java:3664)

at java.lang.StringBuffer.toString(StringBuffer.java:669)

at java.util.regex.Matcher.replaceFirst(Matcher.java:1006)

at java.lang.String.replaceFirst(String.java:2178)

at com.azure.core.util.serializer.JacksonAdapter.deserialize(JacksonAdapter.java:166)

at com.azure.core.implementation.serializer.HttpResponseBodyDecoder.deserializeBody(HttpResponseBodyDecoder.java:169)

at com.azure.core.implementation.serializer.HttpResponseBodyDecoder.lambda$decode$1(HttpResponseBodyDecoder.java:81)

at com.azure.core.implementation.serializer.HttpResponseBodyDecoder$$Lambda$588/1106952291.apply(Unknown Source)

at reactor.core.publisher.MonoFlatMap$FlatMapMain.onNext(MonoFlatMap.java:118)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:121)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:121)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1592)

at reactor.core.publisher.MonoCollect$CollectSubscriber.onComplete(MonoCollect.java:145)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onComplete(FluxMapFuseable.java:144)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replayNormal(FluxReplay.java:551)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replay(FluxReplay.java:654)

at reactor.core.publisher.FluxReplay$ReplaySubscriber.onComplete(FluxReplay.java:1218)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.core.publisher.FluxDoFinally$DoFinallySubscriber.onComplete(FluxDoFinally.java:138)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.netty.channel.FluxReceive.terminateReceiver(FluxReceive.java:397)

at reactor.netty.channel.FluxReceive.drainReceiver(FluxReceive.java:197)

at reactor.netty.channel.FluxReceive.onInboundComplete(FluxReceive.java:345)

at reactor.netty.channel.ChannelOperations.onInboundComplete(ChannelOperations.java:363)

at reactor.netty.channel.ChannelOperations.terminate(ChannelOperations.java:412)

at reactor.netty.http.client.HttpClientOperations.onInboundNext(HttpClientOperations.java:556)

at reactor.netty.channel.ChannelOperationsHandler.channelRead(ChannelOperationsHandler.java:91)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:377)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:363)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:355)

at io.netty.channel.CombinedChannelDuplexHandler$DelegatingChannelHandlerContext.fireChannelRead(CombinedChannelDuplexHandler.java:436)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:321)

@kasobol-msft, you're right the problem occurs when single listBlobs API call result is iterated because in my use case I iterate over a single container in a storage account.

There are probably more than 100 000 blob items as Event Hub flushes non-empty files every 5 minutes.

@cbismuth, also to rule-out/confirm any possible issue in async-to-sync conversation and associated batching, would you be able to try the equivalent async code in your env and see the same issue happens?

BlobServiceAsyncClient blobServiceClient = new BlobServiceClientBuilder()

.endpoint("https://<account-name>.blob.core.windows.net")

.credential(StorageSharedKeyCredential.fromConnectionString("<connection-string>"))

.buildAsyncClient();

BlobContainerAsyncClient blobContainerClient

= blobServiceClient.getBlobContainerAsyncClient("<container-name>");

CountDownLatch latch = new CountDownLatch(1);

//

blobContainerClient.listBlobs()

.doOnNext(blobItem -> {

// consume blob here

}).doOnTerminate(() -> {

latch.countDown();

})

.subscribe();

//

try {

latch.await();

} catch (InterruptedException ie) {

throw new RuntimeException(ie);

}

@anuchandy, memory leak is still there with the async behavior.

public void consumeBlobItems(final StorageAccount storageAccount,

final BlobContainerItem blobContainerItem,

final Consumer<BlobItem> consumer) {

consumeBlobItemsAsync(storageAccount, blobContainerItem, consumer);

}

private void consumeBlobItemsAsync(final StorageAccount storageAccount,

final BlobContainerItem blobContainerItem,

final Consumer<BlobItem> consumer) {

final CountDownLatch latch = new CountDownLatch(1);

createBlobContainerClientAsync(storageAccount, blobContainerItem).listBlobs()

.doOnNext(consumer)

.doOnTerminate(latch::countDown)

.subscribe();

try {

latch.await();

} catch (final Exception e) {

throw new RuntimeException(e);

}

}

private BlobContainerAsyncClient createBlobContainerClientAsync(final StorageAccount storageAccount, final BlobContainerItem blobContainerItem) {

return new BlobServiceClientBuilder().endpoint(storageAccount.endPoints().primary().blob())

.credential(getCredential(storageAccount))

.buildAsyncClient()

.getBlobContainerAsyncClient(blobContainerItem.getName());

}

10:56:39.024 [reactor-http-epoll-1] ERROR r.n.channel.ChannelOperationsHandler - [id: 0x59c65405, L:/192.168.1.26:34918 - R:storagehbbtvv3prod.blob.core.windows.net/20.150.37.196:443] Error was received while reading the incoming data. The connection will be closed.

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOf(Arrays.java:3332)

at java.lang.StringCoding.safeTrim(StringCoding.java:89)

at java.lang.StringCoding.decode(StringCoding.java:230)

at java.lang.String.<init>(String.java:463)

at java.lang.String.<init>(String.java:515)

at com.azure.core.implementation.http.BufferedHttpResponse.lambda$getBodyAsString$0(BufferedHttpResponse.java:62)

at com.azure.core.implementation.http.BufferedHttpResponse$$Lambda$596/221249371.apply(Unknown Source)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:107)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:121)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1592)

at reactor.core.publisher.MonoCollect$CollectSubscriber.onComplete(MonoCollect.java:145)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onComplete(FluxMapFuseable.java:144)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replayNormal(FluxReplay.java:551)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replay(FluxReplay.java:654)

at reactor.core.publisher.FluxReplay$ReplaySubscriber.onComplete(FluxReplay.java:1218)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.core.publisher.FluxDoFinally$DoFinallySubscriber.onComplete(FluxDoFinally.java:138)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.netty.channel.FluxReceive.terminateReceiver(FluxReceive.java:397)

at reactor.netty.channel.FluxReceive.drainReceiver(FluxReceive.java:197)

at reactor.netty.channel.FluxReceive.onInboundComplete(FluxReceive.java:345)

at reactor.netty.channel.ChannelOperations.onInboundComplete(ChannelOperations.java:363)

at reactor.netty.channel.ChannelOperations.terminate(ChannelOperations.java:412)

at reactor.netty.http.client.HttpClientOperations.onInboundNext(HttpClientOperations.java:556)

at reactor.netty.channel.ChannelOperationsHandler.channelRead(ChannelOperationsHandler.java:91)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:377)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:363)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:355)

at io.netty.channel.CombinedChannelDuplexHandler$DelegatingChannelHandlerContext.fireChannelRead(CombinedChannelDuplexHandler.java:436)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:321)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:295)

at io.netty.channel.CombinedChannelDuplexHandler.channelRead(CombinedChannelDuplexHandler.java:251)

10:56:39.031 [reactor-http-epoll-1] WARN i.n.c.AbstractChannelHandlerContext - An exception 'java.lang.OutOfMemoryError: Java heap space' [enable DEBUG level for full stacktrace] was thrown by a user handler's exceptionCaught() method while handling the following exception:

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOf(Arrays.java:3332)

at java.lang.StringCoding.safeTrim(StringCoding.java:89)

at java.lang.StringCoding.decode(StringCoding.java:230)

at java.lang.String.<init>(String.java:463)

at java.lang.String.<init>(String.java:515)

at com.azure.core.implementation.http.BufferedHttpResponse.lambda$getBodyAsString$0(BufferedHttpResponse.java:62)

at com.azure.core.implementation.http.BufferedHttpResponse$$Lambda$596/221249371.apply(Unknown Source)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:107)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:121)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1592)

at reactor.core.publisher.MonoCollect$CollectSubscriber.onComplete(MonoCollect.java:145)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onComplete(FluxMapFuseable.java:144)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replayNormal(FluxReplay.java:551)

at reactor.core.publisher.FluxReplay$UnboundedReplayBuffer.replay(FluxReplay.java:654)

at reactor.core.publisher.FluxReplay$ReplaySubscriber.onComplete(FluxReplay.java:1218)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.core.publisher.FluxDoFinally$DoFinallySubscriber.onComplete(FluxDoFinally.java:138)

at reactor.core.publisher.FluxMap$MapSubscriber.onComplete(FluxMap.java:136)

at reactor.netty.channel.FluxReceive.terminateReceiver(FluxReceive.java:397)

at reactor.netty.channel.FluxReceive.drainReceiver(FluxReceive.java:197)

at reactor.netty.channel.FluxReceive.onInboundComplete(FluxReceive.java:345)

at reactor.netty.channel.ChannelOperations.onInboundComplete(ChannelOperations.java:363)

at reactor.netty.channel.ChannelOperations.terminate(ChannelOperations.java:412)

at reactor.netty.http.client.HttpClientOperations.onInboundNext(HttpClientOperations.java:556)

at reactor.netty.channel.ChannelOperationsHandler.channelRead(ChannelOperationsHandler.java:91)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:377)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:363)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:355)

at io.netty.channel.CombinedChannelDuplexHandler$DelegatingChannelHandlerContext.fireChannelRead(CombinedChannelDuplexHandler.java:436)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:321)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:295)

at io.netty.channel.CombinedChannelDuplexHandler.channelRead(CombinedChannelDuplexHandler.java:251)

Thanks for the feedback! We are routing this to the appropriate team for follow-up. cc @xgithubtriage.

Updating labels as @anuchandy is investigating this in azure-core

I should be able to start investigating this around the beginning of Oct.

@kasobol-msft, in your first reproduction was it able to repeat page listing? I'm looking to determine if this change may have fixed the synchronous case, https://github.com/Azure/azure-sdk-for-java/pull/15646.

I ran the reproduction with the latest code changes in azure-core and was able to have the entire page listing run multiple times without hitting an OOM.

@alzimmermsft I've run that and went through 49670 pages before I gave up. The memory chart formed nice healthy saw pattern. So it seems that the fix solves this issue as well.

Took a look into the asynchronous portion of the issue, this PR should tentatively fix the OOM there: https://github.com/Azure/azure-sdk-for-java/pull/15929.

Fixed in #15929

Dear awesome MS team, a quick follow up to let you know we've upgraded our compliance scan app to fully use reactive, parallel and paging capabilities of the SDK (ParallelFlux<PagedResponse<BlobItem>>), and we're now able to browse 19 110 738 files over 330 terabytes in less than one hour.

You've built a finely tuned piece of software, thanks :clap:

Thank you @cbismuth !!!

Most helpful comment

Dear awesome MS team, a quick follow up to let you know we've upgraded our compliance scan app to fully use reactive, parallel and paging capabilities of the SDK (

ParallelFlux<PagedResponse<BlobItem>>), and we're now able to browse 19 110 738 files over 330 terabytes in less than one hour.You've built a finely tuned piece of software, thanks :clap: