Certbot: Default RSA key bitlength should be 3072

According to NSA and ANSSI, RSA with 3072 bit-modulus is the minimum to protect up to TOP SECRET.

We should not be in the red line of cryptography. This security by default will bring the needed protection for all the Internet users that pass by HTTP webservers powered with Let's Encrypt.

All 74 comments

I would even suggest 4096 (I do it on almost all of my systems).

Thanks,

Martin

I would suggest also 4096.

But some months ago, they disagree with 4096 by default: https://github.com/letsencrypt/letsencrypt/issues/489

I prefer a compromise (= RSA with 3072 bit-modulus) compared to no security at all by default.

Suggest 4096 too.

Security must be good by design and default : no standard user change the default configuration.

And there is no acceptable argument for 2048/3072 vs 4096 bits (only a very small speed overhead).

3072 bits can lead to compatibility problem if user agent hardcode some key sizes (2048 and 4096 bits will be better supported than 3072).

Worst, argument of the 90 days key regeneration to justify 2048 bits key usage is not good :

- Key regeneration breaks a lot of others TLS stack protection, like DANE/TLSA or HPKP, hardened configuration must disable it to avoid big trouble.

- Key regeneration doesn’t say "destruction of the past". If the web server is not configured correctly with only PFS ciphers suite, NSA-like agencies can intercept and store network communication protected with 2048 bits key, and can decrypt them in 20 or 30 years, even if the corresponding keys were destroy. 4096 bits keys protect longer non PFS communication.

- Even with PFS only ciphers, web servers generally base the negociated DH parameter on the private key size. If you use only 2048 bits private key, your DH parameter is 2048 bits only, and so possible weak to LogJam.

Another +1 for 4096, that's what I always use these days.

See #489 for a lot of discussion about this issue.

With LE's default settings, RSA 2048 bits, 90 days, try to crack it? I suggest to close this issue.

Question is not « proof you can crack it » (or stay at 1024 bits, nobody crack it since now) but « all recommendation / state of the art ask for at least 3072 bits » (NSA, NIST, ANSSI, FIPS, Qualys and more).

There is NO valid drawbacks to upgrade to 4096 bits by default.

(And I repeat, even with 90 days key renew, if no PFS used, you have a practical key validity of plenty decades even if technical validity is only 90 days, and 90 days key renew breaks a lot of other TLS stack (TLSA, HPKP, cert pinning), so sane sys admin MUST disable it and reuse a key for at least 120 days and 1 year in practice)

However, in the Alexa top 1,000,000 websites, over 89% of SSL/TLS enabled websites use RSA 2048 bits, including many online banking websites.

Yep, bank have also SSLv3 and MD5 if you want https://imirhil.fr/tls/#Banques%20en%20ligne

And Google/Youtube too https://imirhil.fr/tls/alexa.html

And porn site no TLS at all https://imirhil.fr/tls/porn.html

Even CA have SSLv3, MD5, RC4 or SSLv2 (yep, you read correctly…) and 40 bits EXPORT suites https://imirhil.fr/tls/ca.html

TLS configuration are VERY bad in the wild, this is not a plea to continue to do craps.

@devnsec-com So they are not secure. Even if IPv6 and DNSSEC are good technologies, they are not massively deployed yet. It happens the same for RSA 4096 bits. We have to move forward instead of treading water.

The only reason to use RSA 3072 or 4096 (or even longer) is future proofing, but with a 90 days only certificate, do all users really need RSA longer than 2048 by default? If you are really paranoid, go for longer keys, there is a parameter and you can change it.

All user need 3072+ key by default.

People really knowing what they do can eventually reduce the size, but by default, Let’s encrypt must provide state of the art compliant configuration.

Standard user (99% in fact) don’t even look into the config or care about the size of the generated key or even know what TLS really is…

Remember, we are talking about a SSL/TLS certificate, you can have PFS support on your server, and it can be revoked if necessary. We are not talking about an OpenGPG key or SSH key which you could use them for 20 years or even longer.

And many years later, when RSA 2048 is actually being proved no longer secure, we can change LE defaults to RSA 4096, nearly all users will be changed to RSA 4096 within 90 days.

Since I've mentioned OpenGPG, I'd say that even OpenGPG is default to RSA 2048.

Read this: https://gnupg.org/faq/gnupg-faq.html#no_default_of_rsa4096

You can. You can have not PFS too. And if 2048 bits key, you’re not secure. Even with 90d renew. And if no PFS, key destruction is useless, your datas are already in nature and should be decrypted in few decades.

And only certificate can be revoked, not key.

Keys are exactly like GPG/SSH key : after generation, you have to handle them til physical destruction of the surface of the earth if PFS and eternally if no PFS.

Since I've mentioned OpenGPG, I'd say that even OpenGPG is default to RSA 2048.

GPG have real drawback to use 4096 keys (only few smartcard support more than 2048, mobile device…). On TLS, there is none.

And on GPG, everybody currently generate 4096 bits keys, all tutorials and recommandations ask for it.

You have just made a point, instead of arguing default to RSA 2048 or longer, we should talking about default to enable PFS. Because no matter how long the key is, it will be decrypted soon or later, RSA 2048 will, RSA 4096 will too.

Yep, but you have no way to ensure PFS with Let’s Encrypt (and worse only PFS ciphers, you can negociate a PFS cipher suite but server can support no-PFS or EXPORT…).

And currently, there are major drawback to use only PFS cipher suites (browser compatibility, see https://tls.imirhil.fr/suite/EECDH+AES:EDH+AES+aRSA).

In all cases, this is not the job of a CA.

We are not talking about CA here, but a client software, right?

No. TLS is very complicated, with a lot of stack.

No software can handle it completely.

You have no way to guess if your user have DNSSec or TLSA, must ensure IE compatibility or not standard user-agent, what version of OpenSSL is used, etc.

The only thing LE can do is to raise key size…

No, when LE uses RSA 3072 or 4096 by default, someone will say he wants RSA 8192 by default for all users, this is an endless arms race.

@devnsec-com No. NSA, NIST, ANSSI, FIPS, Qualys don't recommend RSA 8192 but at least RSA 3072.

No, if somebody ask for 8192, you can say 4096 is currently safe and recommanded everywhere.

Currently, this is not the case of 2048.

I can also say Yubico, Cisco, and many companies are recommending RSA 2048 at the time we are speaking.

The longer the key length the safer they are, but business companies are more pragmatic on this topic. Again, RSA 2048 is enough at current stage as a default, as long as we support longer key when needed.

Good resource for this conversation - keylength.com

@devnsec-com Yubico and Cisco position are valid, because they have hardware issue (HSM, PKI and smartcard don’t support 4096 bits very well).

From Yubico : « This is not a constraint from Yubico, but rather a hardware limitation of the NXP A700x chip used within the YubiKeys »

From Cisco : 4096 bits only available from Cisco IOS XE Release 2.4 (and hardware are difficult to upgrade), and the given page is very old (seems not updated since 2.4 release (2009), same default value since at least 2.X release…).

And this is not because all the other sheeps are running towards the cliff that we must follow thew…

@devnsec-com I add too that Cisco documentation is about PKI and CA management, and in this field (CA cert, not end user cert), CA/B-forum recommends at least 2048 bits.

And there is internal debate about 4096 inclusions too, since 2014.

Would we include recommendation on key size? Some people intentionally want to use 4096 bit key. Would they take advice? The document could explain the trade-off between security, compatibility, and other factors

But seems hardware routers don’t support roots more than 2048 (Cisco)

Iñigo said that Cisco’s latest products don’t support SHA-1 and 4096-bit roots. Kelvin said he could try to work with Cisco to change that.

@devnsec-com Another internal comment on CA/B-forum.

Setting target dates for the next set of changes for improved security/performance (RSA-4096/ECC/SHA-512/etc)

Hello guys, It would be great to keep this thread going and hear back from LE staff.

As far as I can read, @aeris arguments seem to be valid and not contradicted. Except if we missed something, I also expect LE to switch default RSA key to 4096bits soon.

+1 for RSA 3072bit by default.

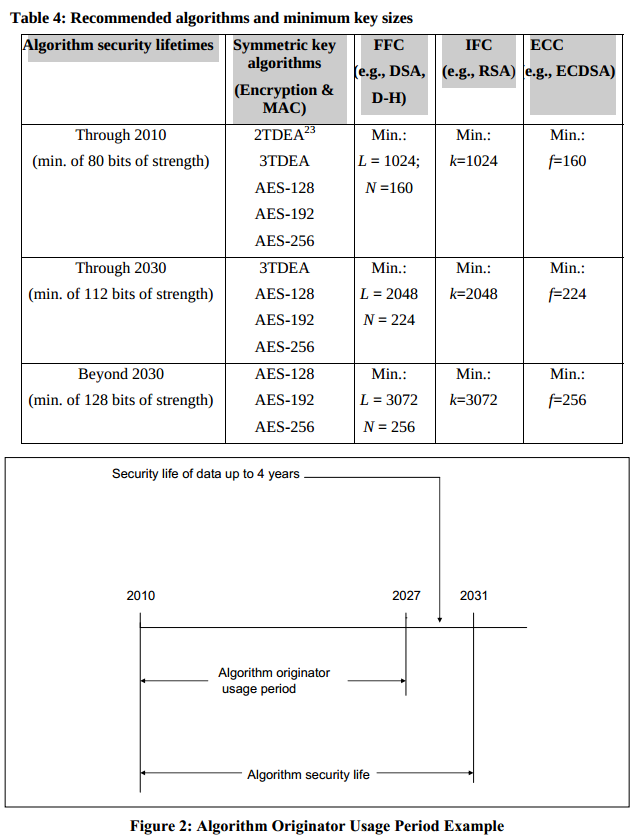

As has been mentioned, RSA 2048bit is only equivalent to 112bits of symmetric key protection. That falls below the widely-held minimum of 128bit used today. RSA 3072bit is only 128bit equivalent, so sufficient for right now (and the bare minimum recommended by the NSA as of January, 2016).

Note that all previous estimates of longevity for key sizes should be taken with healthy skepticism, because they generally assume a linear improvement in key-breaking, but new attacks can decrease security much more quickly.

RSA keys with >2048 bits are currently incompatible with Amazon Web Services. From the Amazon CloudFront Developer Guide:

The maximum size of the public key in an SSL/TLS certificate is 2048 bits

Just thought I'd add this extra point into the conversation.

Anyone who wishes to have greater than 2048 bits RSA can simply enter --rsa-key-size 3072 or --rsa-key-size 4096 to request these key sizes.

Please note that larger RSA keys take exponentially longer to generate, and anything larger than 4096 can crash older Apple browsers.

When #2632 has been implemented the performance drawback will have nearly no impact for the majority.

So we can combine EC 256 with 3072 RSA (or what ever we prefer as default) to provide equal strength for both.

By the way, as a suggestion to support EC keys, have a command like --ec-key-type to generate an EC key and request the certificate.

I currently generate and get signed my RSA keys using certbot and my EC keys using a shell script.

@WilliamFeely that's #2625

Thanks.

As for the RSA key size, I am guessing RSA 2048 is still industry standard, and still authorized for PCI-DSS compliance, and thus why it is still default?

Here's what the latest PCI-DSS documentation (v3.2, April 2016) says:

Refer to industry standards and best practices for information on strong cryptography and secure protocols (e.g. NIST SP 800-52 and SP 800-57, OWASP, etc.)

Fortunately, the glossary defines Strong Cryptography as follow:

Cryptography based on industry-tested and accepted algorithms, along with key lengths that provide a minimum of 112-bits of effective key strength and proper key-management practices. Cryptography is a method to protect data and includes both encryption (which is reversible) and hashing (which is “one way”; that is, not reversible). See Hashing.

At the time of publication, examples of industry-tested and accepted standards and algorithms include AES (128 bits and higher), TDES/TDEA (triple-length keys), RSA (2048 bits and higher), ECC (224 bits and higher), and DSA/D-H (2048/224 bits and higher). See the current version of NIST Special Publication 800-57 Part 1 (http://csrc.nist.gov/publications/) for more guidance on cryptographic key strengths and algorithms.

_Note: The above examples are appropriate for persistent storage of cardholder data. The minimum cryptography requirements for transaction- based operations, as defined in PCI PIN and PTS, are more flexible as there are additional controls in place to reduce the level of exposure.

It is recommended that all new implementations use a minimum of 128-bits of effective key strength._

There is no need to default to 3072 for an RSA key used with a web server.

First of all, you should only be using ciphers that provide FS. This way past sessions that are logged are still secure even if your private key is later discovered.

With something like GnuPG you may want to use larger (I use 4096) because FS is not an option, the client and server don't negotiate their own key when the connection is established. Even with GnuPG you really don't need > 2048 and if you do, EC gives more bang for the buck, but since it isn't well supported yet by clients and a GnuPG encrypted message may be sensitive for decades, 4096 is at least justifiable,

It is not justifiable with HTTPS if FS is used. When FS is used, you should use ECDHE ciphers when possible so that they key used between client and server is stronger. If your server still supports DHE keys then it is the DH group where you should worry about how many bits, and there, most people use the pre-defined 2048 DH group that is published in the RFC. Generating your own 2048 bit DHE group is reasonable.

For the server private key, 2048 bit can not be easily cracked and therefore is sufficient. Suggesting a default of 3072 or 4096 is like suggesting a 20 pound paperweight instead of 15 pound. Yes the 20 pound is heavier but no there is no real benefit and your 2048 bit RSA key will be long long long replaced before it is no longer safe.

Always use forward secrecy and use ECDHE ciphers whenever possible. The private key, RSA 2048 is good enough and if you really want better, use ECDSA not RSA for the key.

I guess what I'm trying to say, and correct me if I am wrong, is that when your TLS requires ciphers that use Forward Secrecy then the only real purpose of the server long term private key is identity.

The actual encryption between client and server that takes places uses a temporary key negotiated between client and server that is unrelated to the key used with the x.509 certificate.

That's what you need to worry about, never using DH parameters < 2048 and preferably using ECDHE. The private key for the server then only has relevance in establishing the identity of the server, and 2048 bit RSA is certainly good enough for that especially if you follow best practices to generate a fresh key at least once a year.

Thus this issue should be closed, and the emphasis on security should be placed on proper cipher suite selection.

Interesting, but when both Applebaum and Snowden recommend me to use RSA key size 4096 bit, well, hmm, I don't know, I think I might just follow up on that advice. Isn't identity precisely what we're trying to hide/protect here?

@jult Just stick with 4096-bit, especially if you're using GnuPG. Not until everyone upgrades to 2.1 will we have access to ed25519 OpenPGP keys. and they're not that much stronger. (I do love my ed25519 SSH + GPG keys, so do encourage everyone to move away from RSA!)

2048-bit RSA keys are expected to be breakable by an attacker with a large amount of computational resources within the next years or so. 768-1024 bits already broken routinely.

I don't think 3072 is a reasonable default, it's kind of out of the ordinary.

2048-bit will not be recommended past 2030, I guarantee you, and the sunset will be gradual like it was for SHA-1. I've read plenty of those government standards documents and such...

For a while people were in the practice of making 8192-bit keys and above... It's only a fun two or three-line change to the source, I'm not sure how well operable they are with others though.

.@WikiLeaks publishes an unusually strong 8192-bit RSA PGP encryption key for submissions https://t.co/xGnu72HTUN pic.twitter.com/8TZyql1NN6

— K.M. Gallagher (@ageis) May 27, 2015

@ageis Also, there's a fairly hard limit on 16384. It would require *a lot* more hacking to go bigger; I tried.

— isis agora lovecruft (@isislovecruft) September 8, 2014

@jult I won't address the Appelbaum and Snowden thing. I'm loosely acquainted with them too but I see it as a sort've rock-star appeal to authority you're making. Look at all sources, I can recommend a few especially:

- http://csrc.nist.gov/publications/nistpubs/800-57/sp800-57_part1_rev3_general.pdf

- https://pages.nist.gov/800-63-3/sp800-63b.html

- http://www.secg.org/sec2-v2.pdf

- http://www.ecc-brainpool.org/download/Domain-parameters.pdf

- http://csrc.nist.gov/groups/ST/toolkit/documents/dss/NISTReCur.pdf

- https://safecurves.cr.yp.to/

Personally, I'm more keen on getting everybody updated to GnuPG 2.1, with the faster kbx format, and generating new ed25519 keys (thoughts @dkg or @floodyberry?), and it's my hope that the next Yubico series will support them. In fact I can reach out to check; as I did some beta testing for the YubiKey 4. I don't like how keys must be protected/passworded to be exported however, because it makes importation to the smartcard a challenge.

While I'm around this issue, I'll drop a great chart from NIST Special Publication 800-57. Many more where that came from. Perfect thing to be starting at on a Saturday night, huh?

Again, the extra load on size during client-handshake is not worth the extra risk with a smaller keysize. The ones recommending against using 4096 bits RSA fail to come up with valid arguments not to. Not to mention the fact that we're relying on proper generation of the keys, and if ever that ends up being flawed, we're way better off with 4096 bits keys.

Check this; https://github.com/certbot/certbot/issues/489#issuecomment-114083162

You want to make sure you generate Diffie Helman keys of >=4096 bits as well, stuff like this

openssl dhparam -dsaparam -out /etc/ssl/dh4096.pem 4096

if you want it to make any sense when you've enabled DH(E) in your cypher suites.

I ran a synthetic benchmark for RSA signatures just now and saw this:

(1024, 0.0008035948276519776)

(2048, 0.0025235090255737304)

(3072, 0.006322009086608887)

(4096, 0.013043954849243164)

The first number is the modulus size and the second is the average number of seconds required per signature operation.

I found @AliceWonderMiscreations's comments about forward secrecy somewhat persuasive; does anyone happen to have some current statistics about the fractions of TLS connections which are negotiated with various ciphersuites today? If very few are now using non-PFS ciphers, then the RSA key length parameter will have minimal long-term security consequences.

FS and non-FS is a separate issue. Yes, we should encourage FS ciphersuites, and both clients and servers should be disabling non-FS ciphersuites where they know it to be possible to do so. (TLS 1.3 has no non-FS ciphersuites left, yay!) That doesn't mean that we should ignore the importance of a strong cryptographic identity key, or that we should play one off against the other. Remember that a forged identity key makes it possible to MiTM even a FS connection.

RSA 3072 appears to be the sweet spot where recommendations (like ENISA and NIST) come down on a strong security margin for keys intended for use over the next decade.

Hi @dkg,

FS and non-FS is a separate issue. Yes, we should encourage FS ciphersuites, and both clients and servers should be disabling non-FS ciphersuites where they know it to be possible to do so. (TLS 1.3 has no non-FS ciphersuites left, yay!) That doesn't mean that we should ignore the importance of a strong cryptographic identity key, or that we should play one off against the other. Remember that a forged identity key makes it possible to MiTM even a FS connection.

In the default case, we currently only use an individual RSA subject key for 60 days (with the active MITM risk continuing for the 90-day lifetime of the certificate), and then switch to a different one. It seems like there's a considerable difference between "an attacker who can break the 2048-bit key in 90 days can perform an active attack" and "an attacker who can break the 2048-bit key at any time can recover plaintext of old sessions that it was involved in". The former is true and the latter is not true in the case of FS ciphersuites.

The recommendations about keylength typically seem to assume a threat model in which the attacker breaks a key some time after that key is used—for example the recommendations that you mention below appear to be based on a threat model in which an attacker takes years to break an individual key. With FS ciphersuites, that threat model shouldn't be that relevant for deciding the length of the identity key because a given identity key has long since ceased to be used.

RSA 3072 appears to be the sweet spot where recommendations (like ENISA and NIST) come down on a strong security margin for keys intended for use over the next decade.

The point that I appreciate in @AliceWonderMiscreations's argument is that the 2048-bit keys that we generate aren't individually intended for use over a period of a decade, but rather for use over a period of several months, when they're used to authenticate Diffie-Hellman negotiations. So, these recommendations may be considering a different security context in most cases. Conceivably, we should be more worried about the DH modulus in most threat models because it's the limiting parameter that directly affects the long-term security level.

I'll try to get some practical data about the frequency of use of FS ciphersuites and the impact of 2048 vs. 3072-bit keys on server performance.

As an update, I heard rough estimates from a few different sources that "about 10%", "less than 10%", and "about 1%" of HTTPS connections now use non-FS ciphersuites. This is a very difficult target to define because it depends on whether it's from the browser's perspective, from the server's perspective, in a particular country, on a particular web site, for a particular type of web site, etc., and those kinds of differences appear to account for the 1%-10% discrepancy.

Personally, I'm more concerned in this regard with the fact that we keep old private keys on disk (which I filed a separate issue about, https://github.com/certbot/certbot/issues/4635). I think this should be a higher priority because I think attackers compromising a server in order to read old traffic is generally much more likely than attackers breaking 2048-bit RSA in order to do so.

I suggest the following:

I know @dkg has been doing a lot of work on the security of DH key exchanges in TLS in general. Can you may make or find some tools that help scan sites to estimate the strength of the DH exchange that's likely to occur when a client connects to each site¹? (I know that in some of the areas that you're concerned about, we can't really tell from the client side how secure the exchange is, but I'm just thinking about trying to map out the DH exchange strength to the extent that we can see and determine it.) We also know a lot of academic researchers who've been interested in this kind of topic...

I'll still try to fix https://github.com/certbot/certbot/issues/4635 so that old, no-longer-used private keys aren't present on web servers.

Maybe we can think about ongoing steps to encourage users to upgrade their web browsers, to increase the amount of DH that gets used in TLS.

With that in mind, I'll reiterate that the 2048-bit keys that we generate by default are usually not used to directly protect the confidentiality of session keys, but rather to authenticate DH key exchanges, where an attack would have to be an online, interactive, active attack within the 90-day validity of the certificate. Otherwise, the attacker will have to choose some other avenue of attack instead. It's hard for me to see that any sources of key length advice are arguing that 2048-bit keys aren't suitable for authentication during a 90-day window. They are more directly relevant to the long-term confidentiality of 1%-10% of connections, but perhaps we can put some more effort into reducing that percentage.

¹ for example, with respect to algorithm and with respect to public parameter size and re-use

I'm doing some more research on a related suggestion about RSA keys, but I'm planning to close this issue soon unless anyone has any responses to my three suggestions in my most recent reply.

How about closing this after #3349 has been done?

How about closing this after #3349 has been done?

I'd say #6492 is more relevant and that we should close this after #6492 has been done (and #4635).

Folks, 4096 bit crypto is not free. It is massively slow in low-power CPUs. Even modern phones suffer when using it. Are you sure going from uncrackable crypto to even more uncrackable crypto is worth ruining everyone's performance and battery life?

4096bit keylength is a huge problem in IoT because this devices often have very limited CPU power.

The speed of private RSA tanks from 3.6 to 0.6 for going from 2048 to 4096 on one of our older embedded device.

As mentioned before, IoT and other devices without hardware acceleration can use ECDSA (#6492).

As mentioned before, IoT and other devices without hardware acceleration can use ECDSA (#6492).

Is there hardware acceleration of RSA in many devices or is that still generally handled via software?

Hardware acceleration is available on some MCU, but not the majority of them (it is more expensive). Also it is often limited to 3072, as it is the recommendation (https://www.keylength.com/)

Note that the RSA verification is quite fast. Only the generation and signature are very slow.

OPENSSL_TLS_SECURITY_LEVEL=3 requires at least 3072 bits.

Why is this issue still open? It's rather clear that the users that are requesting it seem to misunderstand the relevance in TLS, especially in 2020.

TL;DR

- RSA certs only matter for proving the identity of the server can be trusted, and are being replaced every 90 days. It won't be compromised due to key length of 2048-bit within that time just to impersonate you.

- That's assuming that since you care about security so much, you're only allowing cipher suites that offer FS (DHE/ECHDE), and DHE isn't really a concern for you either since for websites, no modern web browser is supporting DHE for key exchange anymore (Safari and Chrome dropped support by around 2016).

- 1024-bit RSA vs 2048-bit RSA is ~4 billion times more difficult to attack. 768-bit RSA was broken in 2010 (it took 2 years with parallel computing equivalent to 2000 years of a single machine), In 2020 we've only managed to reach 829-bit, 512-bit vs 1024-bit is ~1 billion times in difficulty, whilst 2048-bit vs 3072-bit is only ~65k times more difficult...

- Your certificate identity is verified in a chain of trust against CA certificates, which have longer validity duration but are still 2048-bit RSA, you have no control over that. An attacker will target that if they want to impersonate you, increasing your key length beyond that isn't providing any additional benefit when the certificate isn't used for anything else.

For the majority of deployments, you should be ensuring PFS cipher suites only, your RSA certificate has no benefit here beyond 2048-bit, you're just reducing the amount of handshakes the server can support concurrently due to the additional overhead. Context of advice needs to be taken into consideration, not just parroted blindly.

Use DHE / ECDHE for key exchange only, with web servers you'll find that since 2015 browsers have progressively been removing support for DHE as well. Safari did so as a response to Logjam in 2015, Chrome dropped DHE support in 2016 (Chrome 53 (Aug 2016)) because of how many servers still negotiated with 1024-bit DH groups, and Firefox finally joined in this year (Firefox 78 (June 2020)). So basically for web, you're only dealing with ECDHE, unless you're actually aware of clients that can't support that for some reason, web browsers have supported ECC for a long time.

What possible concern are you left with to worry about now? Your certs are being renewed/replaced within 3 months, the only security concern with RSA certs is identity, that some attacker can impersonate you, but for that to happen, the attacker needs to be successful within that <90 day window.

In 2020, the record so far is breaking 829-bit RSA (10 years since we were aware of 768-bit being breakable), the previous record of 795-bit RSA cite having better algorithms and hardware available to achieve their results in about half the compute time. Just look at the 900-2000 compute years these achievements were equivalent to in their parallel computing power (which for 768-bit was reduced down to 2 years).

512-bit RSA is roughly around 50 bits of symmetric key strength in security. 1024-bit RSA is known as 80-bits, and 2048-bit RSA, 112-bits, whilst 3072-bit as noted in this issue is 128-bits. Right, fantastic so 768-bit RSA (which is somewhere between 50-80 bits of symmetric security) in 2010 could be broken over the span of 2 years and a tonne of money, a decade later we've managed to do a few symmetric equivalent bits more. For anyone where this is sounding like jibberish, each additional bit in symmetric key strength is doubling the amount of work to calculate if you're to brute force all possibilities (on average you'd have success about half way through all that processing though).

Now compare 512-bit RSA to 1024-bit, there's roughly 30-bits difference in symmetric security, 2^30 is about 1 billion, that is 50-bits (1,125,899,906,842,624 potential keys) multiplied by 1 billion (1,125,899,906,842,624,000,000,000), or put simply, 1 billion times more effort/time to break. With RSA 1024-bit vs 2048-bit, you've got 32 bits difference, 2^32 is a little over 4 billion. So we've got (4,503,599,627,370,496,000,000,000,000,000,000). Now, bumping up to 3072-bit is a more modest 16-bits of extra security, 2^16 == 65,536... yeah that'll make it more "secure", not quite the same leap as our 4 billion multiplier, but we already have a significant increase in difficulty with 2048-bit RSA.

Now let that soak in and think. 768-bit RSA in 2010 took 2 years of parallel processing equivalent to 2000 years from a single machine, and a decade later we can do a little better with all those advancements since. Still a long way from 1024-bit RSA unless someone with a tonne of money and time to waste wants to break your RSA cert, it's not like shared DH groups, the value is considerably smaller of a return, unless you're in possession of some data that's ridiculously valuable and can't be obtained through cheaper means such as physical torture / blackmail.

Even if someone had such resources to waste on breaking your RSA certificate, it's not 1024-bits, the difficulty of 2048-bit is 4 billion times that, it's not going to happen without some major breakthrough, likely a weakness where the key length isn't a concern (just look at all the other vulnerabilities in TLS that have worked around such). If you went beyond 3072-bit RSA and that was used for your encryption which happened to use 128-bit keys, then your RSA cert doesn't matter, and the 128-bit encryption key for AES can just be attacked instead afaik.

All that aside though, as stated we're just talking about RSA only having relevance for identity if you're using only PFS cipher suites, whatever your resources are, there are better ways to compromise your server or users than the cost to break 2048-bit RSA cert in less than 90 days...

OPENSSL_TLS_SECURITY_LEVEL=3 requires at least 3072 bits.

And this has relevance why? There's a level 5 there mandating a minimum RSA key length of 15360 bits, why stop at 3072 bits with that logic?

As the linked document states, the default is 1 unless configured otherwise. Do you know of any mainstream software that is compiling OpenSSL with level 3? Most of the time when OpenSSL is used, it's linked to the systems copy, since you want to receive security updates without being dependent upon each application updating their own bundled copies (assuming the software is regularly maintained/updated for such in the first place, which doesn't always happen in this scenario).

scan sites to estimate the strength of the DH exchange that's likely to occur when a client connects to each site

@schoen Chrome in 2016 dropped DHE support, they note 95% of DHE connections was with 1024-bit DH params. Not that it should matter much now since modern browsers don't support DHE cipher suites anymore.

For other servers like mail, it might be useful still (although ECDHE support has been pretty good for sometime, with some Linux distros like RHEL not having support until 6.5 around 2014). DHE should use the official FFDHE groups from RFC 7919, which have a minimum of 2048-bits. None of the old clients with the 1024-bit limit should matter, for niche situations where it does the sysadmin would be in a paid position to address it, but there's a host of security issues that can be run into at that point including potential chain of trust problems due to expired root certs on a system.

Maybe we can think about ongoing steps to encourage users to upgrade their web browsers, to increase the amount of DH that gets used in TLS.

When you made this suggestion, Safari and Chrome no longer offered DHE afaik. This would have the opposite outcome, reducing DHE, assuming that users are even able to upgrade the browsers / clients on devices that are running outdated software. Some old OS or software is locked to old TLS implementations (like macOS / iOS Secure Transport), which affects TLS version support, supported cipher suites (macOS lacked AES-GCM until late 2015 release IIRC).

Regarding anyone who thinks 2048-bit RSA is insufficient for identity protection for 90 days, ignoring other safeguards like OCSP and CT logs, are you aware of chain of trust? Your certificate is verified against the LetsEncrypt root and intermediate certs, if someone wants to attack a certificate, it's more valuable to compromise those than one issued to a specific site.

If the cert is being used only for authenticity and not being compromised to decrypt any recorded traffic, then you can increase your websites key length all you like, but what are you gaining in protection from this theoretical attack where you're safer than an earlier cert in the chain of trust being compromised? (which use 2048-bit RSA, ~including LE root~ (EDIT: My bad, LE RSA root is 4096-bit), which have much longer validity periods, 5 years for intermediate, and until 2035 for the LE root)

Why is this issue still open? It's rather clear that the users that are requesting it seem to misunderstand the relevance in TLS, especially in 2020.

TL;DR

* RSA certs only matter for **proving the identity of the server can be trusted**, and are being **replaced every 90 days**. It won't be compromised due to key length of 2048-bit within that time just to impersonate you.

Seems you don't understand X.509 (and not TLS :rofl:) better… :rofl:

Cert is not the trouble but the key is. And in X.509 world, the key is not possible to revoke… So the 90 days rollover is not always relevant here, and it's a trouble for server not using ECDHE/DHE but plain old RSA auth. And this is quite a lot the case also in 2020…

You count also wrong for the RSA-2048 strength. You have to calculate not only the time the best current way to break a given key but also the future one at the point in the future your key is no more meaningfull. In case of not ECDHE/DHE (which is, i repeat, not so quite rare), it's not 90d but 10-30y. And it's not only the time to be take into account, but also cost, technology evolution and crypto weakness/trick discover. And it's specially visible in your explanation case.

RSA-256 was crack in ~60s on a standard ~$1000 laptop in 09/2019. But RSA-512 was crack in ~4h and ~$75 in 2015.

https://www.doyler.net/security-not-included/cracking-256-bit-rsa-keys

https://eprint.iacr.org/2015/1000.pdf

There is definitively not [insert here a big number] times difference between both, but more or less 1.8 at constant cost.

It's also pretty visible in cado-nfs benchmark for factoring RSA key. RSA-120 is 1.9h, RSA-130 is 7.5h (and not 81d as expected), RSA-140 is 23h (and not 227y) and RSA-155 is 5.3d (and not 7452 millenium).

This is why entity like ANSSI or NIST base their recommandations not only on the current state of the art computer abilities or cryptography knowledges, but also on future improvements expected to occur in the timeframe of the key usage/meaningfullness

it's a trouble for server not using ECDHE/DHE but plain old RSA auth. And this is quite a lot the case also in 2020

So your advice to improve security here is to encourage using a larger key size instead of encouraging PFS only cipher suites? Why?

You count also wrong for the RSA-2048 strength

You have to calculate not only the time the best current way to break a given key but also the future one at the point in the future your key is no more meaningfull

Please elaborate further. In what case are you aware of in the past decade has this been wrong. There is no known case of 1024-bit RSA being factored/broken, let alone 2048-bit which has 2^32 bits more of entropy when looking at it's symmetric key strength.

- 2048-bit RSA is ~4 billion times stronger than 1024-bit RSA.

- 3072-bit is only ~65k times stronger (when comparing 112-bits to 128-bits of symmetric security as defined by NIST officially).

- If we assume 1024-bit (80-bits symmetric key strength) can be compromised within the span of 1 year compute power. That's 4 billion years for 2048-bit.

- You're claiming some unknown technological advancement could greatly reduce that computation time, but not threaten 3072-bit RSA?

We could compare 1024-bit as breakable within 1 second, then 2048-bit is equivalent of 136 years still ((1/31536000) * 2^32). Such an achievement is a long way off from happening, if you want to insist that it's somehow a valid concern, are you aware of even 512-bit being factored in 1 second? 512-bit RSA was first factored over 6 months in 1999, since 2015 there's been a Github project (FaaS) which enables you to easily leverage AWS for less than $100 and few hours to factor 512-bit RSA.

With that in mind, 1024-bit RSA being around 2^28 (~250k) to 2^30 (1 billion) more times difficult (I don't have exact numbers sorry, but seems to be around that), and current academics still being far off from that, I'm not sure how you think 1024-bit RSA is anywhere near being computed that fast, which it'd need to be before 2048-bit RSA is even something to worry about being broken in this manner.

The usual approach here is GNFS (General Number Field Sieve), which grows more infeasible with required resources the larger the key size. There's also this nice chart of RSA factoring history, and a related answer that pinned 2048-bit RSA at being factored by 2048 based on that, and 1024-bit by 2015-2020, which academically has not been achieved yet.

Considering the above, I'd love you to back up how 3072-bit RSA is offering much more of an added security advantage. If 2048-bit RSA is attacked in any other way or advances at such a pace technologically, then I don't see how 3072-bit RSA would offer much additional protection?

In case of not ECDHE/DHE (which is, i repeat, not so quite rare)

I like how you claim it's "not so quite rare", how common is it that you encounter your handshake uses an RSA key exchange instead of DHE or ECDHE these days? Do you even have one example?

it's not 90d but 10-30y

I believe I was quite clear in stating 90 day renewal period for the certificate when it's purpose was only serving authentication, not key exchange.

And it's not only the time to be take into account, but also cost, technology evolution and crypto weakness/trick discover. And it's specially visible in your explanation case.

Yes, cost. Resources I've linked you to cite 1024-bit RSA requiring Terabytes of RAM to perform GNFS on, with the linear reduction step being the mostly costly/difficult part to handle. That's the thing with exponential difficulty, something that's cheap/easy can rise in difficulty very fast.

RSA-256 was crack in ~60s on a standard ~$1000 laptop in 09/2019. But RSA-512 was crack in ~4h and ~$75 in 2015.

I've already linked to Github project that can perform the 512-bit factoring on AWS. I don't know exact amount of symmetric entropy bits of 256-bit RSA, perhaps around 30-bits? (so roughly 1,048,576 times diff between 512-bit RSA). The 512-bit paper you link to mentions 432 CPUs (12 instances with 36 vCPUs + 60GiB RAM each) and total of 2770 CPU hours with 1.5-2GB of memory or storage for the matrix (I'm not too familiar with this computation, if you are feel free to chime in).

My math is probably bad here, but lets try compare the two systems compute power based on that information, 2770 hours to minutes would be 2770 * 60 = 166200 which we can compare to 1 minute of the 256-bit RSA as log2(166200) = 17.34, ~2^17, not too far from the 2^20 estimate, and since I don't know the exact symmetric bits for either of these it may be fairly accurate, with some wiggle room for improvements in technology and algorithms, but nothing shocking?

In 1991, 330-bit RSA was factored within days, a later single Intel Core2Duo CPU could handle it in a little over an hour. 256-bit RSA would have been less than that then, lets give it an optimistic 60 minutes and say that within ~30 years it's progressed down to ~1 minute, a grand reduction of 60 times. That's equivalent to say 2^6 (64)... 6 bits. As pointed out earlier, just considering time wise, not resources like increased RAM requirements, that's still way off from being a valid threat. Knock off 6 more bits in the next 30 years, and you've managed what 1 second factoring of 256-bit RSA, congrats, long way to go to get to 2048-bit RSA in a reasonable time frame.

Even the 15 years for 512-bit RSA improving from 6 months to 4 hours is 24hr * (6month * 30day) = 4320hr / 4hr = log2(1080hr) = 10 ~2^10. That is most certainly better of a reduction, ~1k times better, but still not an alarming rate in comparison. I think that's further supported by how progress has slowed with academics being able to factor larger RSA key sizes.

It's also pretty visible in cado-nfs benchmark for factoring RSA key. RSA-120 is 1.9h, RSA-130 is 7.5h (and not 81d as expected), RSA-140 is 23h (and not 227y) and RSA-155 is 5.3d (and not 7452 millenium).

Where are these expected times to compute being sourced from? RSA-155 (aka 512-bit RSA) was factored in 6 months back in 1999, RSA-120 (397-bit RSA) and RSA-130 (430-bit RSA) likewise are likely only about 1-2-symmetric bits different? So an increase of approx 4 times is to be expected...?

This is why entity like ANSSI or NIST base their recommandations not only on the current state of the art computer abilities or cryptography knowledges, but also on future improvements expected to occur in the timeframe of the key usage/meaningfullness

You've not provided anything substantial that indicates 2048-bit RSA being anywhere near weak, even with historical context where notable improvements have been made, that doesn't look like it's all that relevant with the exponential increase. You seem to be taking a somewhat linear view? I would hope you're aware of why 128-bit AES is considered secure (apparently may be weak to a Quantum Computer one day using Grover's algorithm) and that 256-bit AES is said to require the entire energy of our solar system to be exhausted to crack by bruteforce.

Furthermore, take into account what data is sensitive that you're trying to protect it without considering best practice like using only PFS-capable cipher suites, where this data is also considered problematic to potentially be decrypted many decades from now (quite a bit of sensitive data isn't as important decades from now as it is in the present).

TL;DR

It comes down to this pretty much. Breaking 2048-bit, even if decades from now, is going to still be ridiculously expensive, but lets be optimistic and say 1 million dollars and 10 years (or 1 if you like).

- Is this attacker interested in you specifically right now that they're going to be equipped to record your servers traffic for N users (where N doesn't matter beyond storage requirements, since you're insecurely using the same key for all encryption anyhow).

- This traffic is going to be recorded for X years, where every 90 days your certificate is changing requiring a separate attack for each one (time and $$$$)? Or the attacker knows in advance when to record specific data they're interested in, even though it'll be several decades before they believe they can break the encryption?

- This is somehow a better strategy and cheaper than hacking your server or users client directly and compromising that system (I mean they've already put themselves into a MitM attack to capture traffic and are dead set on accessing your secrets with adequate funding anyhow right?), would they not have the available resources to choose the more practical attack?

- Would it not be cheaper & easier to threaten or blackmail someone directly to get access to the RSA private key? Then the key length doesn't matter, if you were smart and used PFS only cipher suites, this strategy would be irrelevant.

Sooo basically...

Encourage PFS cipher suites (DHE / ECDHE) not minor improvements to bandaid bad security practices

So your advice to improve security here is to encourage using a larger key size instead of encouraging PFS only cipher suites? Why?

Because LE has the hands on the default key size, not on the httpd config. And LE already take action in the past (https disabling on HTTP-01, removable of TLS-SNI-01…) instead of enforcing httpd config.

Please elaborate further.

Sure. And simple see the benchmark of cado-nfs. Time for cracking is not the expected theorical time.

Theorical difficulty gap is not observed in practice because of two bias. We can only compare crack time for a given state of the art and at the same current time and expect the theorical gap (and in practice, it's not the case, see cado-nfs), but nothing ensure that X+1 bits is 2 times difficult than X if we compare current knowledge & calculation power and what we will have 1 month later.

And see also the 2 examples I give. There is only a 2 times gap between 256 and 512 bits crack between 2015 & 2019 given the same power & costs, where in theory there is 10⁷⁷ times gap.

And we are not able to ensure there will not have a 3072 RSA break trick tomorrow where 4096 bits will stay safer.

I've already linked to Github project that can perform the 512-bit factoring on AWS. I don't know exact amount of symmetric entropy bits of 256-bit RSA, perhaps around 30-bits? (so roughly 1,048,576 times diff between 512-bit RSA). The 512-bit paper you link to mentions 432 CPUs (12 instances with 36 vCPUs + 60GiB RAM each) and total of 2770 CPU hours with 1.5-2GB of memory or storage for the matrix (I'm not too familiar with this computation, if you are feel free to chime in).

Yeah, it seems to be huge number and impractical… At the time of the first 512 bits break (1999), it was considered as unpractical because requiring super-computer nobody have. But in fact, the corresponding AWS bill for such infrastructure in 2015 is… $100 for 4h and IS practical few years later, largely quicker than the billion of billions of billions gap expected in 1999.

https://arstechnica.com/information-technology/2015/10/breaking-512-bit-rsa-with-amazon-ec2-is-a-cinch-so-why-all-the-weak-keys/

There is only a 2 times gap between 256 and 512 bits crack between 2015 & 2019 given the same power & costs, where in theory there is 10⁷⁷ times gap.

What... no. I've been very clear about this to you in my posts that the difference between 256-bit and 512-bit is not 2^256(your base 10, 10^77), but more like 2^20 or less (in the comparison I did of your two cited factoring examples it was about 2^17).

I'm not sure how you're claiming a "2 times gap" either? Same power and costs? The 256-bit RSA was factored in roughly 1 minute, with the 512-bit RSA being equivalent to 166,200 minutes (math was cited in my earlier post, feel free to correct me if I made a mistake).

And we are not able to ensure there will not have a 3072 RSA break trick tomorrow where 4096 bits will stay safer.

You're literally going to be fine with 2048-bit RSA, 3072-bit RSA has "marginal" improvement in security, I've already explained this, you seem to have skipped it or misunderstood? The only way 2048-bit RSA becomes insecure in a much shorter span of time is when your 3072-bit RSA is going to be threatened from the rate of advancement.

If you want to be safer, don't use RSA for key exchange. I have asked you to cite one website where RSA key exchange is required ((EC)DHE not supported/negotiated for whatever reason), why have you not been able to answer this, you claimed it's such a common occurrence that it justifies upping the default RSA key length? Please back that statement up. You may find servers that offer RSA as a key exchange, they'll likely have server cipher suite selection enabled however and it won't be negotiated by any client that can support PFS cipher suites.

But in fact, the corresponding AWS bill for such infrastructure in 2015 is… $100 for 4h and IS practical few years later, largely quicker than the billion of billions of billions gap expected in 1999.

The cost of the hardware is considerably higher than $100, not ridiculous amounts, but yes the availability to easily rent/access computing resources like that has made it more accessible. Note the hardware used, it wasn't cheap/small EC2 instances, these were fairly big machines when it comes to renting compute resources, we have had 768-bit RSA factored for over a decade now, yet you don't see FaaS being able to cheaply handle that? 2048-bit RSA is a long way to go.

Do you have a source of anyone claiming 512-bit required over a billion to compute? Pretty sure the hardware resources in 1999 that factored in a mere 6 months was a long way from even billions in cost. Where is this price coming from? From more recent statements about higher bits of security? That's due to the exponential increase in difficulty, which not only requires more computation time, but other resources like RAM. Those 512-bit RSA factoring AWS instances had 60GB of RAM, each (720GB total)...

Quick look at some processing insights:

RSA-250 (829-bits RSA) that was factored in 2020 was achieved within 3 months with tens of thousands of computers and using CADO-NFS that you like to bring up, it seems that they've achieved the factoring in ~roughly the same amount of compute time~ (EDIT: my mistake, years, not hours) as the AWS factoring of 512-bit RSA in 2015 achieved (roughly 2700 CPU hours, normalized AFAIK to a referenced Intel Xeon Gold 6130 2.1GHz). RSA-240 (795-bit RSA) factored in Dec 2019 is interesting in that it was by the same team and on the same hardware that factored 768-bit RSA in 2010/2016 (also using CADO-NFS). They noted algorithm improvements in the CADO-NFS software since is what allowed for a ~3x performance gain.

Here's the paper on the 2010 factoring of 768-bit RSA, look at the RAM requirements:

The first stage was split up into eight independent jobs run in parallel on those clusters, with each of the eight sequences check-pointing once every 214 steps. Running a first (or third) stage sequence required 180 GB RAM, a single 64 bits wide ¯b took 1.5 gigabytes, and a single mi matrix 8 kilobytes, of which 565 000 were kept, on average, per first stage sequence. Each partial sum during the third stage evaluation required 12 gigabytes.

Most of the time the available 896 GB RAM sufficed, but during a central part of the calculation more memory was needed (up to about 1 TB)

And lets contrast that to 512-bit RSA factoring (2009, not the AWS one in 2015):

By 2009, Benjamin Moody could factor an RSA-512 bit key in 73 days using only public software (GGNFS) and his desktop computer (a dual-core Athlon64 with a 1,900 MHz cpu). Just less than five gigabytes of disk storage was required and about 2.5 gigabytes of RAM for the sieving process.

That should give a rough scale of RAM requirements scaling as the key length is increased. I'm sure there are probably other bottlenecks to be aware of with the processing, especially when doing so in a distributed fashion. Feel free to look into that further and identify how much RAM would be required for attacking 2048-bit RSA.

Who are you actually trying to protect yourself from that is equipped to target you or your users traffic specifically to record it and perform an attack decades from now?

Not some script kiddy waiting on $100 AWS solution, they'd have moved on to better things by then and compromising your network to record traffic would cost them much more, otherwise it's some malware where no one specific was targeted, congratulations your recorded data segmented into 90 day periods of traffic per RSA key is now in a sea of many others, thousands, millions perhaps, $100 AWS even if possible would not be very cheap to attack all that, especially when the data has unknown value, there would be a lot of junk to throw money away on.

No, if someone wants your secrets there's more affordable ways.

For some reason, you have the belief that 4 billion times stronger security of 1024-bit to 2048-bit RSA is inadequate, but 65k times more security from 2048-bit to 3072-bit RSA is adequate. Your argument is that the security theory of 2048-bit being safe computationally is invalid, but some how that same logic for 3072-bit RSA doesn't apply and it's that much safer? Is a glass door safer because I add bigger and more secure locks?

TL;DR

Please go over the previous TL;DR, but I'll re-iterate..

- Someone wants to record your encrypted traffic using a weak 2048-bit RSA key.

- They're capable of performing this MitM attack, thus they have skills or money and it's probably a targeted attack.

- Your data must retain it's value in the future that it's desirable to acquire now even though it may only be decrypted in 20+ years by your estimates.

- The attack needs to be justifiable for the cost to perform to obtain the value of the secret, it must be known which 90 day window to capture, otherwise the attack must be computed multiple times in hopes of acquiring said secret.

- This attack is somehow more effective than any other method to acquire said data in a quicker / cheaper means?

Who in their right mind is going to do that? That's only going to be practical on a high value target where no other means of getting that information are viable. Said target is somehow in possession of such valuable secrets but doesn't bother to pay a professional or consider best practices in securing them (they apparently can't setup a server with copy/paste of Mozilla's cipher suite advice).

This is not very convincing. This is not LetsEncrypt, you're assuming a user with valuable secrets uses Certbot along with RSA only key exchange cipher suites supported by their server (no default config does this), and that 2048-bit RSA is so much weaker than it is? Whomever this user is, their security practices elsewhere are probably weak enough to compromise them directly, why wait 20-30 supposed years to decrypt data when you can perform an active attack and get all the juicy data in the present?

The correct solution is to setup cipher suites properly, and this project Certbot does exactly that if you're inexperienced in the area and want to rely on it taking care of that for you. But for some reason, that doesn't apply to this discussion?

I have asked you to cite one website where RSA key exchange is required ((EC)DHE not supported/negotiated for whatever reason)

https://cryptcheck.fr/https/bankofamerica.com (yep, you read it correctly)

https://cryptcheck.fr/https/caf.fr (state administration for France, equivalent of the US Supplement Security Income)

https://cryptcheck.fr/https/xn--trkiye-3ya.gov.tr

https://cryptcheck.fr/https/pfisng.dsna-dti.aviation-civile.gouv.fr

https://cryptcheck.fr/https/reader.xsnews.nl

https://cryptcheck.fr/https/protecpo.inrs.fr

https://cryptcheck.fr/https/tiscali.it

I count 68 non PFS domains on my CryptCheck v2 over 7221 handshakes analysis (~0.9%) and 2091 over 349961 handshakes analysis (~0.6%) on my v1.

https://www.bankofamerica.com/

Protocol: TLS 1.2

Key exchange: ECDHE_RSA

Key exchange group: P-256

Cipher: AES_128_GCM

CA: Entrust

https://cryptcheck.fr/https/bankofamerica.com is supposedly identifying additional server IPs for the same domain that don't provide the ECDHE key exchange?

Protocol: TLS 1.2

Key exchange: RSA

Cipher: AES_128_CBC with HMAC-SHA1

CA: Certinga

I have no clue what this website is about or what sensitive secrets it has to exploit?

Protocol: TLS 1.2

Key exchange: RSA

Cipher: AES_128_CBC with HMAC-SHA1

CA: GlobalSign

I was redirected to this from your weird looking domain name that I can't imagine anyone would visit directly and consider it legit/trustworthy of a name.

This like many others following is clearly a government website. They're often lagging behind and need to be accessible by a wide audience, RSA shouldn't be the default key exchange though, but again, what communications are happening here that you're wanting to protect yourself from? The government themselves don't seem to be too concerned obviously.

https://pfisng.dsna-dti.aviation-civile.gouv.fr/jts/auth/authrequired

Protocol: TLS 1.2

Key exchange: RSA

Cipher: AES_128_GCM

CA: Certinga

Again, no clue what this is other than a government service with login. Is there any sensitive information beyond the login details? What are the concerns here regarding access to sensitive information 20-30 years from now? That I use the same username and password elsewhere and still continue to do so in 20-30 years time?

Wouldn't a phishing scam be just as effective if login details were desired (and the value of the data on accounts). Could that be achieved in a shorter time or smaller budget than attacking the RSA certificate?

Over one minute spent trying to load/resolve the URL, I could not access this website.

https://protecpo.inrs.fr/ProtecPo/jsp/Accueil.jsp

Protocol: TLS 1.2

Key exchange: RSA

Cipher: AES_128_GCM

CA: GEANT

This appears to be a website for some software you can use to help make a purchasing decision. What sensitive information is at risk here?

Protocol: TLS 1.2

Key exchange: RSA

Cipher: AES_256_CBC with HMAC-SHA1

CA: Thawte

A news site? Again what sensitive information is of concern with interacting with this website?

Summary

There's nothing from any of these results that indicate a need to protect sensitive communications beyond the estimated 20-30 years of 2048-bit RSA being compromised. These websites would still require an effective MitM attack to capture that traffic inbetween the client and server which isn't likely a valid concern for any of them?

None of these use LetsEncrypt issued certificates. Making a change for Certbot is only beneficial if you know for sure those websites are using Certbot specifically as an ACME client to those CAs (assuming they have third-party ACME support).

Thank you for actually pointing out some websites where RSA is the negotiated key exchange (barring Bank of America which seems to be incorrect and has only been validated as cipher suite to public facing website, not any actual sensitive exchanges).

I count 68 non PFS domains on my CryptCheck v2 over 7221 handshakes analysis (~0.9%) and 2091 over 349961 handshakes analysis (~0.6%) on my v1.

Ok, so your "not rare" is less than 1% of 7,221 and 349,961 websites you've scanned with CryptCheck? Now consider the audience and actual measurable value offered by using 3072-bit RSA instead to secure those communications, is it actually securing anything worthwhile and would it be anymore secure against cheaper alternatives to compromise security? (as in, was 2048-bit weaker/cheaper than any other alternative targeted attack, where 3072-bit raises the lower bound of attack)

How many of the Alexa top 1 million sites are negotiating RSA key exchange? How many of those found use LetsEncrypt as the CA?

I calculated symmetric bits for 512-bit and 256-bit RSA (based on equation from NIST), and we get 40-bits (256-bit RSA), and 57-bits (512-bit RSA).

Interestingly, that matches the 17-bit difference cited earlier when comparing the two compute wise with recent advancements (it appears the NIST document I referenced was last updated in 2020).

It also reveals that the distance to 1024-bit RSA is only 23-bits of equivalent symmetric key strength, 8,388,608. And we can see that current progress for 768-bit and 795-bit RSA or the current record of 829-bit RSA is:

768 == 69.74588053597235

795 == 70.91595938496408

829 == 72.35600867501326

- 768-bit RSA record since 2010 with 829-bit RSA as record in 2020, less than 3-bits improvement over the span of a decade. 2000 vs 2700 CPU years (single-core). 795-bit RSA was down to 900 CPU years in Nov 2019.

- 57-bit (512-bit RSA) to 72-bit (829-bit RSA) with ~2700 compute hours~ (EDIT: hours vs years, whoops) between 2015 and 2020. ~15-bits (32,768) improvement~? (829-bit RSA took 3 months and tens of thousands of machines by comparison). The 768-bit (Dec 2009) & 795-bit (Nov 2019) RSA factorings highlighted being about 3x faster due to software/algorithm improvements alone.

- 2^6 (64) estimate for the 256-bit RSA improvement since 1991.

- ~2^10 (1024) for the 1080 x difference with 512-bit over 16 years (1999 - 2015) 6 months vs 4 hours, unclear how accurate of a comparison it is, 8,000 MIPS years vs 2770 years of the Intel CPU usually referenced. Main diff here was probably due to access to rent hardware cheaply with cloud compute to bring time down, while leveraging 15 years of HW improvements and algorithm improvements to the software since.

I've just realized I've made a mistake earlier when citing 829-bit RSA factoring and comparing it to the AWS 512-bit RSA factoring time. They both cite ~2700 compute units of time, but I tripped up with 829-bit RSA being years, and 512-bit RSA being hours. That appears to be a difference of about 8,760 ((8760hrs * 2700yrs) / 2700hrs, where 8,760 is hours in a year) times (2^13) which I think is 4 years ((4hrs * 8760hrs) / 24hrs / 365days) which would be close to a million dollars of compute time on AWS? (ignoring the fact you probably need quite a bit more RAM)

256-bit RSA 1 minute compute time vs 829-bit RSA 2700 years compute time = log2(525600mins * 2700yrs) (minutes in a year) equates to roughly 2^30. Again, similar to 512-bit RSA compute difficulty, this is pretty close of a delta to the assumed 2^32 bit difference in strength. We might be able to factor the RSA keys faster, but the compute difficulty seems to be roughly maintained?

Not the greatest information, but enough that we get a rough idea of the rate of progress and improvements.

- The difficulty to compute is roughly accurate and consistent over decades?

- Rate of improved computation to perform factoring faster has improved nicely, but not by a significant amount?

1024-bit is still going to remain out of reach for some time, and 2048-bit even more so based on historical progress covered above. There's little evidence that 3072-bit RSA with it's additional 2^16 (65k) to 2^22 (4 million) bits of extra difficulty will amount to much more of a security advantage than the 2^30 (1 billion) to 2^32 (4 billion) 2048-bit has over 1024-bit RSA. (not that it doesn't add notable additional difficulty, but based on the assumption that 2048-bit RSA itself won't hold up sufficiently when it should)

Based on current 829-bit RSA effort, and that there's roughly 38-bit difference to the security level of bits for 2048-bit RSA. If we take the constant computing power that factored 829-bit RSA in 3 months (4 three month durations in a year), we get 68,719,476,736 years (2^38 / 4), even if we had advancements to get that down to 1 billion times less work (2^30), it's still about 64 years, and that's with tens of thousands of machines.

If you still think 3072-bit as a minimum is worthwhile despite my (possibly bad?) math, cost-benefit analysis, and common sense reasoning... then I don't think I have anything else I can contribute to convince you otherwise.

Just remember that you're advocating for a change with Certbot, not LetsEncrypt enforcing a minimum to issue. Certbot already helps users by configuring proper cipher suites which avoid the whole problem anyway, so who's really going to benefit here - while everyone else (using Certbot) who doesn't have RSA being used as the key exchange is adding more load and bandwidth on their servers (even if minor for most).

Issue should be closed.

You already contributed that information 4 years ago in this thread. It doesn't appear that you understand the context of the advice from that website and how it applies here.

If you'd feel more comfortable with 3072-bit RSA or larger key lengths for your certs, explicitly opt-in for such as you already can, there is no justified reason when caring about pragmatic security to adopt 3072-bit as a new default. 2048-bit RSA is sufficient.

You already contributed that information 4 years ago in this thread. It doesn't appear that you understand the context of the advice from that website and how it applies here.

You don't understand more. ANSSI advice is for example

As part of the development of teleservices and electronic exchanges between the administration and users, the administrative authorities must guarantee the security of their information systems in charge of the implementation of these services.

The General Safety Reference is specifically imposed on the information systems implemented by the administrative authorities in their relations with one another and in their relations with users (these are teleservices such as the payment of fines to the Administration ).

Indirectly, the General Security Reference is intended for all service providers who assist administrative authorities in securing the electronic exchanges they implement, as well as to manufacturers whose activity is to offer products. of security.

In general, for any other organization wishing to organize the management of the security of its information systems and electronic exchanges, the General Security Reference is presented as a guide of good practices in accordance with the state of the art.

And so is applicable in France for quite ANY PURPOSE AND ANY MEANS, and is not restricted at all to sensible context like military purpose.

And one of the advice is "It is recommended to use modules of at least 3072 bits, even for a use not to exceed 2030". And 3072 bits is no more an advice but is mandatory if usage/impact expected after 2030.

Even the CNIL, french entity about privacy & good practice, use the ANSSI paper in it standard recommendation for any website: https://www.cnil.fr/fr/securite-securiser-les-sites-web

ANSSI has published on its site specific recommendations for implementing TLS or for securing a website.

(And is also in opposition with Let's Encrypt because stating that you have to you must authorize only incoming IP network flows on this machine on port 443 and block all the other ports. but this)

The NIST advices are not bound to sensibility of convey information, but is general advices applicable in quite all case you have to manage key or cert too.

It's not the first time LE use default configuration in opposition with state of the art advice from government agency or other…

Oh, and the BSI review it advices this year. It states than

Important Note: It is reasonable to use a key length of 3000 bits for RSA, DH, and DSS, in order to achieve a homogenous security level for all asymmetric mechanisms. From 2023, a key length of at least 3000 bits is required for cryptographic implementations that shall comply with this Technical Guideline.

@aeris even if France were imposing 3072-bit RSA as mandatory, that is not law elsewhere. Do note advice and recommendation do not equate to mandatory requirement either.

I would be surprised if they also suggest using RSA key exchange in cipher suites as best practice for security/privacy. If you're wanting to handle security and privacy correctly, adopt PFS only cipher suites, your RSA certificate will be irrelevant from that point onwards as it only plays a role in authentication then.

Anyway, I feel I am repeating myself here and I'm not interested in an endless back and forth. If you have to comply with certain security authorities/advisories, then do so by being explicit about it. I've already detailed rather well that 2048-bit is secure and shall remain so for the foreseeable future, if it were not to be, 3072-bit RSA isn't anymore likely to be secure either.

As you're rather passionate about this topic please try and grasp what I've relayed to you, instead of reciting NIST / ANSSI / BSI / etc, it's in their best interest to advise a bit higher than needed, similar to how AES-128 was introduced as the lowest/weakest security level for AES but considerably secure in it's own right.

All you're gaining from 3072-bit is a false sense of "more secure", due to 2048-bit being more than enough that if it were not, there's no reason to think 3072-bit is going to be anymore secure since it's additional 16-bits of security isn't going to keep up with such advances. Do the smart thing and don't keep using RSA as a key exchange... if you only rely on a larger RSA key length as your sense of being secure instead of applying security practices elsewhere, you're fooling yourself.

If you want to continue arguing for the sake of compliance rather than actual security benefit, I'll leave you to it. Personally it's not a worthwhile change to default to, and for majority of Certbot users is likely unwanted due to drawbacks and no pragmatic gain in actual security.

It's not the first time LE use default configuration in opposition with state of the art advice from government agency or other…

Probably because they know better, despite what you might think. Even with all the information presented to you earlier, you don't seem interested or open to it at all.

While it probably won't convince you any further, this is what 2^128-bit symmetric strength requires to attack successfully:

The current total world energy consumption is around 500 EJ (5×10^20 J) per year (or so says this article). Assuming that the total energy production of the Earth is diverted to a single computation for ten years, we get a limit of 5×10^36, which is close to 2^122.

This would lead to a grand total of 2^138 in year 2040 -- and this is for a single ten-year-long computation which mobilizes all the resources of the entire planet.

To sum up: even if you use all the dollars in the World (including the dollars which do not exist, such as accumulated debts) and fry the whole planet in the process, you can barely do 1/1000th of an exhaustive key search on 128-bit keys.

256-bit symmetric encryption keys btw would exceed all the energy available within our solar system to crack:

The laws of the physical universe mean that even keys of these modest sizes will never be brute forced. That is not to say they can never be broken. Flaws can be discovered in algorithms

there is nothing stronger than "cannot break it" so comparing strengths beyond 100 bits or so is kinda meaningless anyway. - Source

Now all those quotes are focused on 128-bit keys like 3072-bit RSA, but the 110-112 bits of 2048-bit RSA is still quite considerable. However it is much cheaper to just take the XKCD advised route :)

Looks like the arguments have shifted from security to compliance.

Looks like the arguments have shifted from security to compliance.

Or not. This is just state of the art advices from well known entities related to security ecosystem about what to use by default to avoid shooting in your feet as we see regularly in TLS/X.509 world since at least a decade.

Most helpful comment

I would even suggest 4096 (I do it on almost all of my systems).

Thanks,

Martin