Kubernetes: Better support for sidecar containers in batch jobs

Consider a Job with two containers in it -- one which does the work and then terminates, and another which isn't designed to ever explicitly exit but provides some sort of supporting functionality like log or metric collection.

What options exist for doing something like this? What options should exist?

Currently the Job will keep running as long as the second container keeps running, which means that the user has to modify the second container in some way to detect when the first one is done so that it can cleanly exit as well.

This question was asked on Stack Overflow a while ago with no better answer than to modify the second container to be more Kubernetes-aware, which isn't ideal. Another customer has recently brought this up to me as a pain point for them.

@kubernetes/goog-control-plane @erictune

All 116 comments

/sub

Also using a liveness problem as suggested here http://stackoverflow.com/questions/36208211/sidecar-containers-in-kubernetes-jobs doesn't work since the pod will be considered failed and the overall job will not be considered successful.

How about we declared a job success probe so that the Job can probe it to detect success instead of waiting for the pod to return 0.

Once the probe returns success, then the pod can be terminated.

Can probe run against a container that has already exited, or would there

be a race where it is being torn down?

Another option is to designate certain exit codes as having special meaning.

Both "Success for the entire pod" or "failure for the entire pod" are both

useful.

This would need to be on the Pod object, so that is a big API change.

On Thu, Sep 22, 2016 at 1:41 PM, Ming Fang [email protected] wrote:

How about we declared a job success probe so that the Job can probe it to

detect success instead of waiting for the pod to return 0.Once the probe returns success, then the pod can be terminated.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/kubernetes/kubernetes/issues/25908#issuecomment-249021627,

or mute the thread

https://github.com/notifications/unsubscribe-auth/AHuudjrpVtef6U35RWRlZr3mDKcCRo7oks5qsugRgaJpZM4IiqQH

.

@erictune Good point; we can't probe an exited container.

Can we designate a particular container in the pod as the "completion" container so that when that container exits we can say the job is completed?

The sidecar containers tends to be long lived for things like log shipping and monitoring.

We can force terminate them once the job is completed.

Can we designate a particular container in the pod as the "completion" container so that when that container exits we can say the job is completed?

Have you looked into this doc point 3, described in details in here where you basically don't set .spec.completions and as soon as first container finishes with 0 exit code the jobs is done.

The sidecar containers tends to be long lived for things like log shipping and monitoring.

We can force terminate them once the job is completed.

Personally, these look to me more like RS, rather than a job, but that's my personal opinion and most importantly I don't know full details of your setup.

Generally, there are following discussions https://github.com/kubernetes/kubernetes/issues/17244 and https://github.com/kubernetes/kubernetes/issues/30243 that are touching this topic as well.

@soltysh the link you sent above, point 3 references pod completion and not container completion.

The two containers can share an emptyDir, and the first container and write an "I'm exiting now" message to a file and the other can exit when it sees that message.

@erictune I have a use case which I think falls in this bucket and I am hoping you could guide me in the right direction since there doesn't seem to be any official recommended way to solve this problem.

I am using the client-go library to code everything below:

So, I have a job that basically runs a tool in a one container pod. As soon as the tool finishes running, it is supposed to produce a results file. I can't seem to capture this results file because as soon as the tool finishes running, the pod deletes and I lose the results file.

I was able to capture this results file if I used HostPath as a VolumeSource and since I am running minikube locally, the results file gets saved onto my workstation.

But, I understand that's not recommended and ideal for production containers. So, I used EmptyDir as suggested above. But, again, if I do that, I can't really capture it because it gets deleted with the pod itself.

So, should I be solving my problem using the sidecar container pattern as well?

Basically, do what you suggested above. Start 2 containers in the pod whenever the job starts. 1 container runs the job and as soon as the job gets done, drops a message that gets picked up by the other container which then grabs the result file and stores it somewhere?

I fail to understand why we would need 2 containers in the first place. Why can't the job container do all this by itself? That is, finish the job, save the results file somewhere, access it/read it and store it somewhere.

@anshumanbh I'd suggest you:

- use a persistent storage, you save the result file

- use

hostPathmount, which is almost the same as 1, and you've already tried it - upload the result file to a known remote location (s3, google drive, dropbox), generally any kind of shared drive

@soltysh I don't want the file to be stored permanently. On every run, I just want to compare that result with the last result. So, the way I was thinking of doing this was committing to a github repository on every run and then doing a diff to see what changed. So, in order to do that, I just need to store the result temporarily somewhere so that I can access it to send it to Github. Make sense?

@anshumanbh perfectly clear, and still that doesn't fall into the category of side-car container. All you want to achieve is currently doable with what jobs provide.

@soltysh so considering I want to go for option 3 from the list you suggested above, how would I go about implementing it?

The problem I am facing is that as soon as the job finishes, the container exits and I lose the file. If I don't have the file, how do I upload it to shared drive like S3/Google Drive/Dropbox? I can't modify the job's code to automatically upload it somewhere before it quits so unfortunately I would have to first run the job and then save the file somewhere..

If you can't modify job's code, you need to wrap it in such a way to be able to upload the file. If what you're working with is an image already just extend it with the copying code.

@soltysh yes, that makes sense. I could do that. However, the next question I have is - suppose I need to run multiple jobs (think about it as running different tools) and none of these tools have the uploading part built in them. So, now, I would have to build that wrapper and extend each one of those tools with the uploading part. Is there a way I can just write the wrapper/extension once and use it for all the tools?

Wouldn't the side car pattern fit in that case?

Yeah, it could. Although I'd try with multiple containers inside the same pod, pattern. Iow. your pod is running the job container and alongside additional one waiting for the output and uploading that. Not sure how feasible is this but you can give it a try already.

Gentle ping -- sidecar awareness would make management of microservice proxies such as Envoy much more pleasant. Is there any progress to share?

The current state of things is that each container needs bundled tooling to coordinate lifetimes, which means we can't directly use upstream container images. It also significantly complicates the templates, as we have to inject extra argv and mount points.

An earlier suggestion was to designate some containers as a "completion" container. I would like to propose the opposite -- the ability to designate some containers as "sidecars". When the last non-sidecar container in a Pod terminates, the Pod should send TERM to the sidecars. This would be analogous to the "background thread" concept found in many threading libraries, e.g. Python's Thread.daemon.

Example config, when container main ends the kubelet would kill envoy:

containers:

- name: main

image: gcr.io/some/image:latest

command: ["/my-batch-job/bin/main", "--config=/config/my-job-config.yaml"]

- name: envoy

image: lyft/envoy:latest

sidecar: true

command: ["/usr/local/bin/envoy", "--config-path=/my-batch-job/etc/envoy.json"]

For reference, here's the bash madness I'm using to simulate desired sidecar behavior:

containers:

- name: main

image: gcr.io/some/image:latest

command: ["/bin/bash", "-c"]

args:

- |

trap "touch /tmp/pod/main-terminated" EXIT

/my-batch-job/bin/main --config=/config/my-job-config.yaml

volumeMounts:

- mountPath: /tmp/pod

name: tmp-pod

- name: envoy

image: gcr.io/our-envoy-plus-bash-image:latest

command: ["/bin/bash", "-c"]

args:

- |

/usr/local/bin/envoy --config-path=/my-batch-job/etc/envoy.json &

CHILD_PID=$!

(while true; do if [[ -f "/tmp/pod/main-terminated" ]]; then kill $CHILD_PID; fi; sleep 1; done) &

wait $CHILD_PID

if [[ -f "/tmp/pod/main-terminated" ]]; then exit 0; fi

volumeMounts:

- mountPath: /tmp/pod

name: tmp-pod

readOnly: true

volumes:

- name: tmp-pod

emptyDir: {}

I would like to propose the opposite -- the ability to designate some containers as "sidecars". When the last non-sidecar container in a Pod terminates, the Pod should send TERM to the sidecars.

@jmillikin-stripe I like this idea, although I'm not sure if this follows the principal of treating some containers differently in a Pod or introducing dependencies between them. I'll defer to @erictune for the final call.

Although, have you checked #17244, would this type of solution fit your use-case? This is what @erictune mentioned a few comments before:

Another option is to designate certain exit codes as having special meaning.

@jmillikin-stripe I like this idea, although I'm not sure if this follows the principal of treating some containers differently in a Pod or introducing dependencies between them. I'll defer to @erictune for the final call.

I think Kubernetes may need to be flexible about the principal of not treating containers differently. We (Stripe) don't want to retrofit third-party code such as Envoy to have Lamprey-style lifecycle hooks, and trying to adopt an Envelope-style exec inversion would be much more complex than letting Kubelet terminate specific sidecars.

Although, have you checked #17244, would this type of solution fit your use-case? This is what @erictune mentioned a few comments before:

Another option is to designate certain exit codes as having special meaning.

I'm very strongly opposed to Kubernetes or Kubelet interpreting error codes at a finer granularity than "zero or non-zero". Borglet's use of exit code magic numbers was an unpleasant misfeature, and it would be much worse in Kubernetes where a particular container image could be either a "main" or "sidecar" in different Pods.

Maybe additional lifecycle hooks would be sufficient to solve this?

Could be:

- PostStop: with a means to trigger lifecycle events on other containers in the pod (I.e trigger stop)

- PeerStopped: signal that a "peer" container in the pod has died - possibly with exit code as an argument

This could also define a means to define custom policies to restart a container - or even start containers that are not started by default to allow some daisy chaining of containers (when container a finishes then start container b)

Also missing this. We run a job every 30 min that needs a VPN client for connectivity but there seem to be a lot of use cases where this could be very useful (for example stuff that needs kubectl proxy). Currently, I am using jobSpec.concurrencyPolicy: Replace as a workaround but of course this only works if a.) you can live without parallel job runs and b.) Job execution time is shorter than scheduling interval.

EDIT: in my usecase, it would be completely sufficient to have some property in the job spec to mark a container as the terminating one and have the job monitor that one for exit status and kill the remaining ones.

I too, have a need for this. In our case it's a job that makes use of the cloudsql-proxy container as a sidecar service.

What about possibly adding an annotation that maps to the name of the 'primary' container in the pod? That way the pod spec does not need to be modified in anyway.

By nature of how Pods are designed this seems like a very common use-case. @soltysh @erictune any plans to work on this soon? Glad to help where possible :)

Also need this feature. For our use case:

pod A has to containers

- container A1 : a run-to-complete container which print log to files

- container A2: sidecar container, which just tail logs from file to stdout

What i want: when container A1 complete with success, the pod A complete with success. Can we just label container A1 as main container, when main container exits, pod exits? @erictune ( This idea is also described by @mingfang )

Hey Guys, I see this issue has been open for a month. What is the latest on this? We have a use-case, where we want to run a job. The job runs a main container with a few side-car containers. We want the job to exit when the main container exits. Is the state of art to share a file to send a signal between the containers?

I wouldn't mind starting some work on this, would like to know if anyone could review upcoming PRs if I do (maybe after kubecon).

cc @erictune @a-robinson @soltysh

@andrewsykim what approach would you take. Also, i know i am adding it here, what would it take to add support for dependencies. Like the main container should not start until the sidecars have initialized

Like the main container should not start until the sidecars have initialized

I think this case is not a problem since main should be able to check when the sidecar is initialized (or use a readiness probe). This isn't the case for this issue cause main would have exited :)

I ended up writing a simple script that watches the kubernetes API and terminates jobs with a matching annotation and whose main container has exited. It's not perfect but it addresses core need. I can share it if people are interested.

@ajbouh I'd personally appreciate if you shared that as a gist. I was about to write something similar

@nrmitchi Here's a gist of the yaml I wrote. It's very shell scripty, but perhaps it's a good starting point for you, in terms of which APIs to use and how to get something that works. I can answer questions about what it's doing if you have any questions.

https://gist.github.com/ajbouh/79b3eb4833aa7b068de640c19060d126

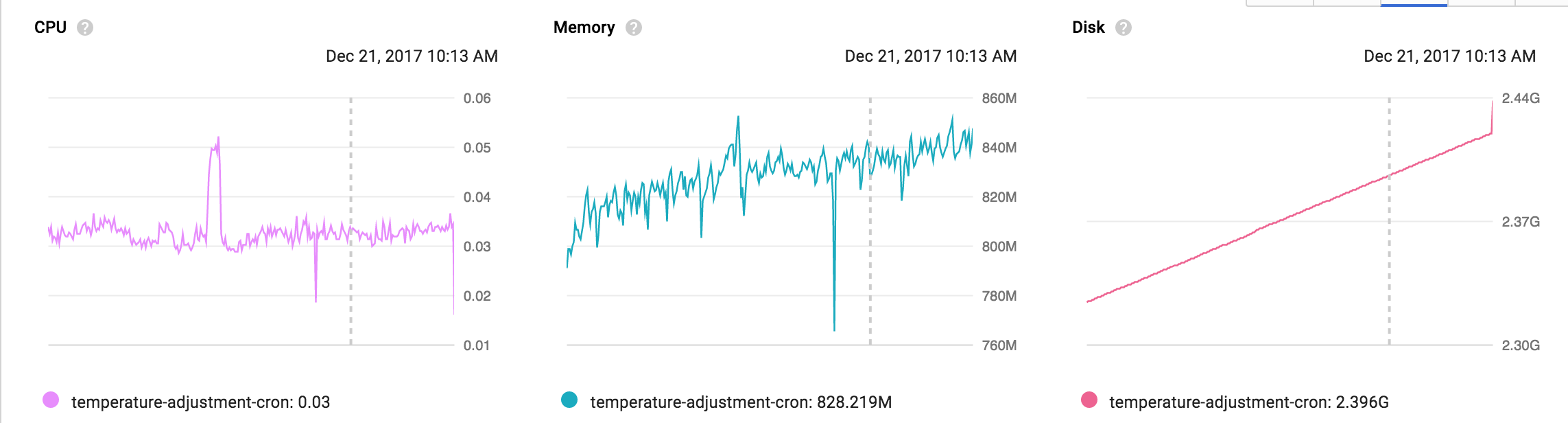

I have the same Cloud SQL proxy use case as @mrbobbytables. In order to securely connect to cloud SQL it is recommended to use the proxy, but that proxy isn't terminating when the job is done, which results in crazy hacks or monitoring that looks like the following. Is there any path forward on this?

@amaxwell01 Regarding Cloud SQL Proxy's involvement in this, I had opened an issue with Google that you could star or watch for updates: https://issuetracker.google.com/issues/70746902 (edit: and I do regret some phrasing I used there in the heat of the moment; unfortunately I can't edit it)

Thanks @abevoelker I'm following your post there. Plus your comments made me laugh 👍

We are also impacted by this issue.

We have several django management commands on our micro-services which can run on k8s cronjobs but fail to succeed because of the cloudsqlproxy sidecar which does not get stopped on job completion.

Any update on when we could have a solution ?

The sidecar container pattern is used more and more and a lot of us won't be able to use k8s cronjobs and jobs until this gets resolved.

Just wanted to throw in my +1 for this. I've got the same GCE Cloud SQL Proxy issue as everyone else. It's killing me... helm deploy fails which in turn fails my terraform apply.

Would really like to see some kind of resolution on this... jist from @ajbouh looks like it could work... but jeez, that's hacky.

For anybody else requiring cloudsql-proxy, would it fit your use-case to run the cloudsql-proxy as a DaemonSet? In my case I had both a persistent Deployment and a CronJob requiring the proxy, so it made sense to detach it from individual pods and instead attach one instance per node.

Yes,

We have decided to remove the cloudsql proxy sidecars and built a pool of

cloudsql proxies in their central namespace, it works perfectly and allows

move scalability and easier deployments.

Now we can run jobs and cronjobs without any issue.

On Wed, Feb 7, 2018 at 9:37 AM, Rob Jackson notifications@github.com

wrote:

For anybody else requiring cloudsql-proxy, would it fit your use-case to

run the cloudsql-proxy as a DaemonSet? In my case I had both a persistent

Deployment and a CronJob requiring the proxy, so it made sense to detach

it from individual pods and instead attach one instance per node.—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

https://github.com/kubernetes/kubernetes/issues/25908#issuecomment-363710890,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ACAWMwetx6gA_SrHL_RRbTMJVOhW1FKLks5tSW7JgaJpZM4IiqQH

.

Interesting, using a deamonset sounds like a good option. @RJacksonm1 & @devlounge - how does discovery of the cloud sql proxy work when using daemonsets?

Found this which looks like it'll do the trick...

https://buoyant.io/2016/10/14/a-service-mesh-for-kubernetes-part-ii-pods-are-great-until-theyre-not/

Basically involves using something like this to get the host ip:

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

@RJacksonm1 - Was there anything special you did to get hostPort working? I'm constantly getting connection refused when using it in conjunction with the fieldPath: spec.nodeName approach 🤔

Edit: I have made sure spec.nodeName is getting through correctly and I'm on GKE v1.9.2-gke.1

@cvallance I've got a Service set up to expose the DaemonSet, which my application can then access via DNS. This doesn't guarantee the application will talk to the cloudsql-proxy instance running on the same host as itself, but it does guarantee the cloudsql-proxy will scale with the cluster as a whole (originally I had the proxy as a Deployment and a HorizontalPodAutoscaler, but found it scaling up/down too much – causing MySQL has gone away errors in the app). I guess this isn't in the true spirit of a DaemonSet... 🤔

@RJacksonm1 - got it working with hostPort and spec.nodeName ... now they will connect directly to the DaemonSet on their node 😄

CloudSql Proxy command not working:

-instances={{ .Values.sqlConnectionName }}=tcp:{{ .Values.internalPort }}

Working:

-instances={{ .Values.sqlConnectionName }}=tcp:0.0.0.0:{{ .Values.internalPort }}

🤦♂️

Is there anything we can do to get some traction on this issue?

It's been open for almost 2 years and still we only have workarounds

I suspect even if I volunteer myself to implement this I won't be able to since it needs some approval from the internal guys regarding which solution to implement, API changes, etc.

Anything I can do to help get this done?

For reference I made a cloud-sql-proxy sidecar version of @jmillikin-stripe's workaround where a file in a shared volume communicates the state to the sidecar.

It works OK, but is by far the nastiest hack in my K8s configuration :(

apiVersion: batch/v1

kind: Job

metadata:

name: example-job

spec:

template:

spec:

containers:

- name: example-job

image: eu.gcr.io/example/example-job:latest

command: ["/bin/sh", "-c"]

args:

- |

trap "touch /tmp/pod/main-terminated" EXIT

run-job.sh

volumeMounts:

- mountPath: /tmp/pod

name: tmp-pod

- name: cloudsql-proxy

image: gcr.io/cloudsql-docker/gce-proxy:1.11

command: ["/bin/sh", "-c"]

args:

- |

/cloud_sql_proxy --dir=/cloudsql -instances=example:europe-west3:example=tcp:3306 -credential_file=/secrets/cloudsql/credentials.json &

CHILD_PID=$!

(while true; do if [[ -f "/tmp/pod/main-terminated" ]]; then kill $CHILD_PID; echo "Killed $CHILD_PID as the main container terminated."; fi; sleep 1; done) &

wait $CHILD_PID

if [[ -f "/tmp/pod/main-terminated" ]]; then exit 0; echo "Job completed. Exiting..."; fi

volumeMounts:

- name: cloudsql-instance-credentials

mountPath: /secrets/cloudsql

readOnly: true

- name: cloudsql

mountPath: /cloudsql

- mountPath: /tmp/pod

name: tmp-pod

readOnly: true

restartPolicy: Never

volumes:

- name: cloudsql-instance-credentials

secret:

secretName: cloudsql-instance-credentials

- name: cloudsql

emptyDir:

- name: tmp-pod

emptyDir: {}

backoffLimit: 1

Can anyone internal to the project please comment on the progress of this issue?

Same issue here

cc @kubernetes/sig-apps-feature-requests @kubernetes/sig-node-feature-requests

Would it make sense to allow users to specify (by name) the containers in a Job that they wish to see complete successfully in order to mark the job pod as done (with other containers stopped), like so:

apiVersion: batch/v2beta1

kind: Job

metadata:

name: my-job

namespace: app

spec:

template:

spec:

containers:

- name: my-container

image: my-job-image

...

- name: cloudsql-proxy

image: gcr.io/cloudsql-docker/gce-proxy:1.11

...

backoffLimit: 2

jobCompletedWith:

- my-container

I.e. the pod would run, wait until my-container exits successfully, and then just terminate cloudsql-proxy.

EDIT: Scrolling up this thread, I now see this has been proposed previously. Can @erictune or someone else maybe re-elaborate on why this would not work?

yes i think that would be perfect. Just something that allows you to watch the status of the job, and continue the pipeline once its completed

Yeah, that’d be perfect.

I like this idea @jpalomaki

One concern I have with the approach of solving this purely within the Job controller is that the Pod will continue to run after the Job finishes. Currently, the Pod enters Terminated phase and the Node can free up those resources.

You could have the Job controller delete the Pod when the controller decides it's done, but that would also be different from the current behavior, where the Terminated Pod record stays around in the API server (without taking up Node resources).

For these reasons, it seems cleaner to me to address this at the Pod API level, if at all. The Node is the only thing that should be reaching in and killing individual containers because the "completion" containers you care about already terminated. This could either take the form of a Pod-level API that lets you specify the notion of which containers it should wait for, or a Pod-level API to let external agents (e.g. the Job controller) force the Pod to terminate without actually deleting the Pod.

I am also looking for a solution to upload files produced by a container iff the processor container exited successfully.

I am not sure I understand the argument by @mingfang made against having the sidecar container watch the container status through the k8s API to know if and when to start uploading or exiting. When the sidecar container exits the pod and job should be exited successfully.

Another thought, which does seem like a hack, but I'd like to know how bad it would be to make the data producing container into an init container, and have the data uploading container (which would no longer need to be a sidecar container) automatically started only after the processor container has exited successfully. In my case I would also need a data downloader container as the first init container, to provide data to the processing container.. If this is a particularly bad idea, I would love to learn why.

Wouldn't promoting the sidecar to a first-class k8s concept solve this problem? Kubelet will be able to terminate the pod if all the running containers in it are marked as sidecar ones.

FWIW, I worked around this by just deploying Cloud SQL proxy as a regular deployment (replicas: 1) and made my Job and CronJob use it via a type: ClusterIP service. Jobs complete fine now.

I'd love an official position on this.

If we're not going to have some support from the API, we should at least have the alternative solutions officially documented so people know what to do when they hit this problem.

I'm not sure who to ping or how to get attention to this...

It would be really nice for this to be addressed. In addition to the Job never going away, the overall Pod status is apparently incorrect:

Init Containers:

initializer:

State: Terminated

Reason: Completed

Exit Code: 0

Started: Wed, 21 Mar 2018 17:52:57 -0500

Finished: Wed, 21 Mar 2018 17:52:57 -0500

Ready: True

Containers:

sideCar:

State: Running

Started: Wed, 21 Mar 2018 17:53:40 -0500

Ready: True

mainContainer:

State: Terminated

Reason: Completed

Exit Code: 0

Started: Wed, 21 Mar 2018 17:53:41 -0500

Finished: Wed, 21 Mar 2018 17:55:12 -0500

Ready: False

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

What's interesting is note the State and Ready for the initContainer (Terminated, Completed, Ready=True) and the main app container (Terminated, Completed, Ready=False). That seems to be driving the over Pod Ready state of False - in my view, incorrectly. This is causing this Pod to be flagged as having an issue on our dashboards.

I have another customer encountering this issue with the Cloud SQL proxy specifically. They would like to not have to run it as a persistent service to allow cron jobs to access Cloud SQL.

@yuriatgoogle Easiest solution is still bash and emptyDir "magic" such as: https://github.com/kubernetes/kubernetes/issues/25908#issuecomment-365924958

It's a hack, but it'll have to do. No offense intended to @phidah.

It definitely seems like plenty of people want this for a variety of reasons. It would be good to have some official support. I had the same problem with our own sidecar and jobs, so I had the sidecar use the kube api to watch status of the other container in the pod, if it terminated with a completed the sidecar would exit 0, if it errored the sidecar would exit 1. Maybe not the most elegant solution but it did the trick without needing our developers to change much. code's here if anyone's interested: https://github.com/uswitch/vault-creds/blob/master/cmd/main.go#L132.

This reminds me of a Gorillaz song M1 A1...

Hello? Hellooooooo? Is anyone there?

Yes, lets please get some traction +1

So the proposed solutions that requires an upstream changes are:

sidecar: trueby @jmillikin-stripe- Additional lifecycle hooks by @msperl

jobCompletedWithby @jpalomaki

Temporary solution for sidecar, a hacky one (but works):

- For

cloudsql-proxysidecar by @phidah

I would love to see the response from Kubernetes maintainer regarding the proposed solutions and please give us recommendation on how to solve this use case using the existing kubernetes version. Thanks!

Just discovered this thread after spending a day trying to write a log agent that would upload the stdout / stderr of my rendering task to a database, only to discover that the presence of the agent in the pod would mean that the job would never terminate.

Of the suggestions given above, I like the 'sidecar: true' one the best, as it is simple and to the point - very understandable to a developer like myself. I would probably call it something slightly different, since 'sidecar' is really a pod design pattern that applies to more than just jobs and implies other things besides completion requirements. If you would excuse my bikeshedding, I'd probably call it something like 'ambient: true' to indicate that the Job can be considered completed even if this task is still running. Other words might be 'ancillary' or 'support'.

I've run into this issue as well, for the same workflow that many others have described (a sidecar container that's used for proxying connections or collecting metrics, and has no purpose after the other container in the pod exits successfully).

An earlier suggestion was to designate some containers as a "completion" container. I would like to propose the opposite -- the ability to designate some containers as "sidecars". When the last non-sidecar container in a Pod terminates, the Pod should send TERM to the sidecars.

This is my ideal solution as well. I might suggest SIGHUP instead of SIGTERM - this seems to be the exact use case for which the semantics of SIGHUP are relevant! - but I'd be happy with either one.

As it is, running jobs on Kubernetes requires either manually patching upstream container images to handle Kubernetes-specific inter-container communication when the non-sidecar container finishes, or manually intervening to terminate the sidecar for every job so that the zombie pod doesn't hang around. Neither is particularly pleasant.

I'd be willing to make a patch for this but would like some guidance from @kubernetes/sig-apps-feature-requests before digging into any code. Are we okay with adding a sidecar field to the pod spec to make this work? I hesitate to make any changes to pod spec without being certain we want it. Maybe use annotations for now?

@andrewsykim I've been following this issue for a while (just have not gotten around to trying to address it myself), but I would suggest just using annotations for now.

My reasoning is that:

- This issue has been around for almost 2 years, and hasn't really drawn a lot of attention from the kubernetes core. Therefore, if we wait to make changes to the pod spec, or wait for direct input, we'll probably be waiting for a long time.

- A workable PR is much easier to get some attention to than a old issue.

- It should be pretty each to switch an annotation approach to use a pod attribute in the future

Thoughts?

Hi, I talked to some of the sig-apps guys at kubecon about this issue, basically it's not something that's on their immediate roadmap but it is something that they think is a valid use-case. They're very much open to someone from the community tackling this.

I've created a PR for an enhancement proposal to solve this so I hope this generates some discussion https://github.com/kubernetes/community/pull/2148.

Thanks for putting that together @Joseph-Irving! Seems like there are more details that need to be addressed for this so I'll hold off on doing any work til then :)

persistent-long-term-problem :(

cc @kow3ns @janetkuo

Without meaning to complicate the matter further, it would also be useful to be able to run a "sidecar" style container alongside initContainers.

My use case is similar to people here, I need to run cloud sql proxy at the same time as an initContainer that runs database migrations. As initContainers are run one at a time I cannot see a way to do this, except to run the proxy as a deployment+service, but I expect there are other use cases (log management etc) where that wouldn't be a suitable work around.

@mcfedr There's a reasonably active enhancement proposal which might appreciate that observation regarding init container behaviour. It's unclear to me whether that's in-scope for this proposal, or a related improvement, but I think it's sufficiently related that it makes sense to raise for consideration.

Potential implementation/compatibility problems notwithstanding, your ideal model would presumably be for sidecar init containers to run concurrently with non-sidecar init containers, which continue to run sequentially as now, and for the sidecars to terminate prior to the main sequence containers starting up?

for what its worth, I would also like to express the need for ignoring sidecars still running like CloudSQL Proxy et.al.

I managed to kill cloudsql container after 30 seconds since I know my script wouldn't take this long. Here is my approach:

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: schedule

spec:

concurrencyPolicy: Forbid

schedule: "*/10 * * * *"

startingDeadlineSeconds: 40

jobTemplate:

spec:

completions: 1

template:

spec:

containers:

- image: someimage

name: imagename

args:

- php

- /var/www/html/artisan

- schedule:run

- command: ["sh", "-c"]

args:

- /cloud_sql_proxy -instances=cloudsql_instance=tcp:3306 -credential_file=some_secret_file.json & pid=$! && (sleep 30 && kill -9 $pid 2>/dev/null)

image: gcr.io/cloudsql-docker/gce-proxy:1.11

imagePullPolicy: IfNotPresent

name: cloudsql

resources: {}

volumeMounts:

- mountPath: /secrets/cloudsql

name: secretname

readOnly: true

restartPolicy: OnFailure

volumes:

- name: secretname

secret:

defaultMode: 420

secretName: secretname

And it is working for me.

Do you guys see any drawback in this approach?

Since I think they are related and easily adaptable for CronJobs as well, this is my solution: https://github.com/GoogleCloudPlatform/cloudsql-proxy/issues/128#issuecomment-413444029

It is based on one of the workarounds posted here but uses preStop because it's meant to be for deployments. Trapping the sidecar would work wonderfully tho.

Following this issue. Also using cloud_sql_proxy container as side car in cronjob

I used the timeout implementation by @stiko

Just adding to the conversation the solution proposed by @oxygen0211 on using Replace is a decent workaround for now, be sure to check it out if you run into this issue like I did.

https://github.com/kubernetes/kubernetes/issues/25908#issuecomment-327396198

We have got this KEP provisionally approved https://github.com/kubernetes/community/pull/2148, we still have a few things we need to agree on but hopefully it will get to a place where work can start on it relatively soon. Note KEPs will be moving to https://github.com/kubernetes/enhancements on the 30th, so if you want to follow along it will be over there.

Until the sidecar support arrives you can use a docker-level solution which can be easily removed later: https://gist.github.com/janosroden/78725e3f846763aa3a660a6b2116c7da

It uses a privileged container with a mounted docker socket and standard kubernetes labels to manage containers in the job.

We were having the same issue with Istio and its side car, and what we've decided to do is delete the pod via curl + preStop hook like this

give your job a minimal RBAC rule like this

apiVersion: v1

kind: ServiceAccount

metadata:

name: myservice-job

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: myservice-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["delete"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: myservice-job-rolebinding

subjects:

- kind: ServiceAccount

name: myservice-job

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: myservice-role

and POD_NAME and POD_NAMESPACE to your ENV like so

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

and finally, add a preStop hook like

lifecycle:

preStop:

exec:

command:

- "/bin/bash"

- "-c"

- "curl -X DELETE -H "Authorization: Bearer $(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" --cacert /var/run/secrets/kubernetes.io/serviceaccount/ca.crt https://$KUBERNETES_SERVICE_HOST/api/v1/namespaces/$POD_NAMESPACE/pods/$POD_NAME?gracePeriodSeconds=1"

Kind of messy but a little safer and less fincky than trying to kill the right docker container.

Just throwing this in here, but i threw together a controller a while back which was meant to monitor running pod changes, and send a SIGTERM to sidecar containers appropriately. It's definitely not the most robust, and honestly I haven't used it in a while, but may be of help.

Thanks to @jpalomaki at https://github.com/kubernetes/kubernetes/issues/25908#issuecomment-371469801 for the suggestion to run cloud_sql_proxy as a deployment with ClusterIP service, and to @cvallance at https://github.com/kubernetes/kubernetes/issues/25908#issuecomment-364255363 for the tip on setting tcp:0.0.0.0 in the cloud_sql_proxy instances parameter to allow non-local connections to the process. Together those made it painless to let cron jobs use the proxy.

long-term-issue (note to self)

Same issue. Looking for a way or an official docs about how to use GKE cron job with Cloud SQL

Side note:

Google updated their Cloud SQL -> Connecting from Google Kubernetes Engine documentation, now in addition to the Connecting using the Cloud SQL Proxy Docker image you can Connecting using a private IP address

So if you are here for the same reason Im here (because of the cloud_sql_proxy), you can use now the new feature of Private IP

Side note:

Google updated their Cloud SQL -> Connecting from Google Kubernetes Engine documentation, now in addition to theConnecting using the Cloud SQL Proxy Docker imageyou canConnecting using a private IP address

So if you are here for the same reason Im here (because of the cloud_sql_proxy), you can use now the new feature of Private IP

Private IP feature seems need to delete entire cluster and recreate one........?

@cropse That is only needed if your cluster is not VPC-native.

I made a workaround for this issue, not the great solution but worked, hope this help before the feature added, and VPC is one way to sloved, but delete entire cluster is still painful.

Just to add my two cents: helm tests also break if injection of istio sidecar occurs as the pod never completes.

@dansiviter you can check my workaround, I already tested in my project with helm.

looking forward to see this implemented! :)

we do have the same issue with normal jobs when an Istio proxy gets injected to it, above that we also want this because we want to run CI jobs with Prow.

e.g. Rails app container + sidecar database container for testing purposes.

@cropse Thanks. I've not tried it as we'd need to configure this for all tests. We're just allowing the Pod (Helm Tests don't allow Job unfortunately) to fail and relying on manually inspecting the log until this issue is fixed long term. However, it's also becoming a problem for other Jobs too so we may have reconsider that position.

FYI, the tracking issue for this feature is over here https://github.com/kubernetes/enhancements/issues/753 if people want to follow along, we've got a KEP, done some prototyping (there's a POC branch/video), still need to iron out some of the implementation details before it will be in an implementable state.

Side note:

Google updated their Cloud SQL -> Connecting from Google Kubernetes Engine documentation, now in addition to theConnecting using the Cloud SQL Proxy Docker imageyou canConnecting using a private IP address

So if you are here for the same reason Im here (because of the cloud_sql_proxy), you can use now the new feature of Private IP

I was here due to the same reason, however, our Cloud SQL was provisioned before this feature was ready. I combined previous suggestions and came out this (probably not ideal, but it works) for my dbmate migrator helm chart.

containers:

- name: migrator

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

command: ["/bin/bash", "-c"]

args:

- |

/cloud_sql_proxy -instances={{ .Values.gcp.project }}:{{ .Values.gcp.region }}:{{ .Values.gcp.cloudsql_database }}=tcp:5432 -credential_file=/secrets/cloudsql/credentials.json &

ensure_proxy_is_up.sh dbmate up

env:

- name: DATABASE_URL

valueFrom:

secretKeyRef:

name: mysecret

key: DATABASE_URL

volumeMounts:

- name: cloudsql-instance-credentials

mountPath: /secrets/cloudsql

readOnly: true

volumes:

- name: cloudsql-instance-credentials

secret:

secretName: cloudsql-instance-credentials

ensure_proxy_is_up.sh

#!/bin/bash

until pg_isready -d $(echo $DATABASE_URL); do

sleep 1

done

# run the command that was passed in

exec "$@"

Would it be a good time to a notion of sidecar containers in Kubernetes and allow pod clean up based on whether non-sidecar containers have finished?

@Willux I'm on my phone atm, so it's harder to locate links for reference, but what you've just proposed is already well underway.

@krancour thanks for the update. I must've missed that detail. There wasn't much activity happening here lately, so just wanted to make sure there is something ongoing :)

For reference I made a cloud-sql-proxy sidecar version of @jmillikin-stripe's workaround where a file in a shared volume communicates the state to the sidecar.

It works OK, but is by far the nastiest hack in my K8s configuration :(

apiVersion: batch/v1 kind: Job metadata: name: example-job spec: template: spec: containers: - name: example-job image: eu.gcr.io/example/example-job:latest command: ["/bin/sh", "-c"] args: - | trap "touch /tmp/pod/main-terminated" EXIT run-job.sh volumeMounts: - mountPath: /tmp/pod name: tmp-pod - name: cloudsql-proxy image: gcr.io/cloudsql-docker/gce-proxy:1.11 command: ["/bin/sh", "-c"] args: - | /cloud_sql_proxy --dir=/cloudsql -instances=example:europe-west3:example=tcp:3306 -credential_file=/secrets/cloudsql/credentials.json & CHILD_PID=$! (while true; do if [[ -f "/tmp/pod/main-terminated" ]]; then kill $CHILD_PID; echo "Killed $CHILD_PID as the main container terminated."; fi; sleep 1; done) & wait $CHILD_PID if [[ -f "/tmp/pod/main-terminated" ]]; then exit 0; echo "Job completed. Exiting..."; fi volumeMounts: - name: cloudsql-instance-credentials mountPath: /secrets/cloudsql readOnly: true - name: cloudsql mountPath: /cloudsql - mountPath: /tmp/pod name: tmp-pod readOnly: true restartPolicy: Never volumes: - name: cloudsql-instance-credentials secret: secretName: cloudsql-instance-credentials - name: cloudsql emptyDir: - name: tmp-pod emptyDir: {} backoffLimit: 1Can anyone internal to the project please comment on the progress of this issue?

Is it fair to assume that this is the best option for those of us working on GKE's stable release channel, which probably won't catch up to Kubernetes 1.18 for a few months at the very least?

@Datamance at this point the KEP to address this issue looks like it's indefinitely on hold.

I posted a while back this comment, which was my old solution. I'm not trying to push my own stuff here, just that comment has been lost in the "100 more comments..." of github and figured resurfacing it may be useful again.

@nrmitchi thanks for reposting that. I'm one who had overlooked it in the sea of comments and this looks like a fantastic near-term solution.

We figure out a different approach, if you add following to your Pod containers:

securityContext: capabilities: add: - SYS_PTRACE

then you will be able to grep the Pid in other containers, we will run following at the end of our main container:

sql_proxy_pid=$(pgrep cloud_sql_proxy) && kill -INT $sql_proxy_pid

@krancour glad it helped. If you look at the network in that repository there are a couple forks which are almost-definitely in a better place than my original one, and might be better to build off of/use.

IIRC the lemonade-hq fork had some useful additions.

@nrmitchi, I've been glancing at the code, but it may be quicker to just ask you...

Can you briefly comment on whatever pre-requisites may exist that aren't mentioned in the README?

For instance, do the images your sidecars are based on require any special awareness of this workaround? e.g. Do they need to listen on a specific port for a signal from the controller? Or perhaps must they include a certain shell (bash?)

@krancour I'll preface my response with a note that this solution was written a couple years ago and my memory may be a bit rusty.

It was designed at the time such that the containers in question did not need to be aware of the work-around. We were using primarily third-party applications in sidecar (for example, I believe stripe/veneur was one), and did not want to be forking/modifying.

The only requirement of the sidecars is that they properly listen for a SIGTERM signal, and then shut down. I recall having some issues with third-party code running in sidecars that were expecting a different signal and had to be worked around, but really the controller should have just allowed the signal sent to be specified (ie, SIGINT instead of SIGTERM).

They don't need to listen to any ports for a signal, since the controller uses an exec to signal the main process of the sidecar directly. IIRC at the time that functionality was copied out of the kubernetes code because it didn't exist in the client. I believe this exists in the official client now and should probably be updated.

We figure out a different approach, if you add following to your Pod containers:

securityContext: capabilities: add: - SYS_PTRACEthen you will be able to grep the Pid in other containers, we will run following at the end of our main container:

sql_proxy_pid=$(pgrep cloud_sql_proxy) && kill -INT $sql_proxy_pid

@ruiyang2015 thanks for this hack.

If anyone implementing it though, be sure to understand the implications of sharing a process ns between the containers

@nrmitchi

uses an exec to signal the main process of the sidecar directly

That is part of why I asked... I guess, specifically, I am wondering if this doesn't work for containers based on images that are built FROM scratch.

@krancour Fair point, I never went and tested it with containers that were off of scratch. Looking at the code (or my originaly version; this could have changed in forked) it looks like it's going to be dependent on bash, but should be able to be modified.

it's going to be dependent on bash, but should be able to be modified

Sure, but as long as it's exec'ing, it's always going to be dependent on some binary that's present in the container and for a scratch container, there's _nothing_ there except whatever you put there explicitly. 🤷♂

Given that limitation, I can't use this for a use case where the containers that are running might be totally arbitrary and specified by a third party. Oh-- and then I have Windows containers in play, too.

I'll mention what I am going to settle on instead. It's probably too heavy-handed for most use cases, but I'm mentioning it in case someone else's use case is similar enough to mine to get away with this...

I can afford the luxury of simply _deleting_ a pod whose "primary" container has exited, as long as I record the exit status first. So I'm going to end up writing a controller that will monitor some designated (via annotation) container for completion, record its success or failure in a datastore that already tracks "job" status, and then delete the pod entirely.

For good measure, I will probably put a little delay on the pod delete to maximize my central log aggregation's chances of getting the last few lines of the primary container's output before it's torpedoed.

Heavy-handed, but may work for some.

@krancour Totally true. As is, the controller will not work for arbitrary use-bases. Honestly I never went back and attempted to abstract some of the implementation to support other cases because I really thought that the previously mentioned KEP would have been merged and made the need for this functionality moot.

Given that this issue is like 4 years old, the KEP hasn’t gone anywhere yet, and the state of the art is a hacky inline shell script replacing every entrypoint, I decided to codify the “standard” hack (tombstones in a shared volume) into a Go binary that can be easily baked into container images using a multi-stage build.

https://github.com/karlkfi/kubexit

There’s a few ways to use it:

- Bake it into your images

- Side load it using an init container and an ephemeral volume.

- Provision it on each node and side load it into containers using a host bind mount

Edit: v0.2.0 now supports “birth dependencies” (delayed start) and “death dependencies” (self-termination).

Drive-by comment: this looks exactly like https://github.com/kubernetes/enhancements/issues/753

@vanzin as has been noted before, that KEP is on indefinite hold.

My use case for this is that Vault provides credentials for a CronJob to run. Once the task is done the Vault sidecar is still running with the Job in a pending state and that triggers the monitoring system thinking that something is wrong. It's a shame what happened to the KEP.

Most helpful comment

For reference, here's the bash madness I'm using to simulate desired sidecar behavior: