Kubernetes: `kubectl get` should have a way to filter for advanced pods status

What happened:

I'd like to have a simple command to check for pods that are currently not ready

What you expected to happen:

I can see a couple of options:

- There is some magic flag I am not aware of

- Having a flag for

kubectl getto filter the output using go/jsonpath - Distinguish between Pod Phase

Running&ReadyandRunning - Flag to filter on ready status

How to get that currently:

kubectl get pods --all-namespaces -o json | jq -r '.items[] | select(.status.phase != "Running" or ([ .status.conditions[] | select(.type == "Ready" and .state == false) ] | length ) == 1 ) | .metadata.namespace + "/" + .metadata.name'

All 54 comments

/kind feature

/sig cli

Same here, It sound incredible to use a complex syntax to only list non running container...

Ideally I would be able to say something like:

kubectl get pods --namespace foo -l status=pending

I had to make a small modification to .status == "False" to get it to work

kubectl get pods -a --all-namespaces -o json | jq -r '.items[] | select(.status.phase != "Running" or ([ .status.conditions[] | select(.type == "Ready" and .status == "False") ] | length ) == 1 ) | .metadata.namespace + "/" + .metadata.name'

50140 provides a new flag --field-selector to filter these pods now.

$ kubectl get pods --field-selector=status.phase!=Running

/close

@dixudx

kubectl version

Client Version: version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.4", GitCommit:"9befc2b8928a9426501d3bf62f72849d5cbcd5a3", GitTreeState:"clean", BuildDate:"2017-11-20T19:11:02Z", GoVersion:"go1.9.2", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.0", GitCommit:"0b9efaeb34a2fc51ff8e4d34ad9bc6375459c4a4", GitTreeState:"clean", BuildDate:"2017-11-29T22:43:34Z", GoVersion:"go1.9.1", Compiler:"gc", Platform:"linux/amd64"}

kubectl get po --field-selector=status.phase==Running -l app=k8s-watcher

Error: unknown flag: --field-selector

@asarkar --field-selector is targeted for v1.9, which is coming out soon.

@dixudx thanks for the PR for the field-selector. But I think this is not what I had in mind. I wanted to be able figure out pods that have one or more container that are not passing the readiness checks.

Given that I have non ready pod (kubectl v1.9.1) READY 0/1:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

pod-unready 0/1 Running 0 50s

This pod is still in Phase running, so I can't get it using your proposed filter:

$ kubectl get pods --field-selector=status.phase!=Running

No resources found.

/reopen

Got the same issue.

I would be glad to have something like:

kubectl get pods --field-selector=status.ready!=True

Hm, can I use it for getting nested array items? Like I want to do

kubectl get pods --field-selector=status.containerStatuses.restartCount!=0

But it returns error, tried status.containerStatuses..restartCount, but it also doesn't work and returns same error Error from server (BadRequest): Unable to find "pods" that match label selector "", field selector "status.containerStatuses..restartCount==0": field label not supported: status.containerStatuses..restartCount

@artemyarulin try status.containerStatuses[*].restartCount==0

Thanks, just tried with kubectl v1.9.3/cluster v1.9.2 and it returns same error - Error from server (BadRequest): Unable to find "pods" that match label selector "", field selector "status.containerStatuses[*].restartCount!=0": field label not supported: status.containerStatuses[*].restartCount. Am I doing something wrong? Does it work for you?

Sadly, the same thing happens for v1.9.4:

What I'm trying to do here is to get all pods with a given parent uid...

$ kubectl get pod --field-selector='metadata.ownerReferences[*].uid=d83a23e1-37ba-11e8-bccf-0a5d7950f698'

Error from server (BadRequest): Unable to find "pods" that match label selector "", field selector "ownerReferences[*].uid=d83a23e1-37ba-11e8-bccf-0a5d7950f698": field label not supported: ownerReferences[*].uid

Waiting anxiously for this feature •ᴗ•

--field-selector='metadata.ownerReferences[].uid=d83a23e1-37ba-11e8-bccf-0a5d7950f698'

field label not supported: ownerReferences[].uid

This filter string is not supported.

For pods, only "metadata.name", "metadata.namespace", "spec.nodeName", "spec.restartPolicy", "spec.schedulerName", status.phase", "status.podIP", "status.nominatedNodeName", "sepc.nodeName" are supported.

@migueleliasweb If you want to filer out the pod in your case, you can use jq.

$ kubectl get pod -o json | jq '.items | map(select(.metadata.ownerReferences[] | .uid=="d83a23e1-37ba-11e8-bccf-0a5d7950f698"))'

Also you can use JSONPath Support of kubectl.

Thanks @dixudx . But let me understand a litle bit better. If I'm running this query in a cluster with a few thousand pods:

- Does the APIServer fetch all of them from ETCD and them apply in-memory filtering ?

- Or does my kubectl receive all pods and apply locally the filter ?

- Or the filtering occours inside the ETCD ? So only the filtered results are returned ?

Does the APIServer will fetch all of them from ETCD and them apply in-memory filtering ?

Or does my kubectl will receive all pods and apply locally the filter ?

Or the filtering occours inside the ETCD ? So only the filtered results are returned ?

@migueleliasweb If --field-selector is issued when using kubectl, the filtering is in a cache of apiserver. APIServer will have a single watch open to etcd, watching all the objects (of a given type) without any filtering. The changes delivered from etcd will then be stored in a cache of apiserver.

For --sort-by, the filtering is on kubectl client side.

This work great for me with kubectl get, but it would also be nice if it could apply to delete and describe

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle stale

/remove-lifecycle stale

I'm using kubectl get po --all-namespaces | grep -vE '1/1|2/2|3/3' to list all not-full-Ready pods.

IMHO this is more than just a feature, it's pretty much a must. The fact that you can have a pod that is in a CrashBackoffLoop that isn't listed by the --field-selector=status.phase!=Running makes the whole field-selector thing pretty useless. There should be an easy way to get a list of pods that have problems without resorting to parsing json.

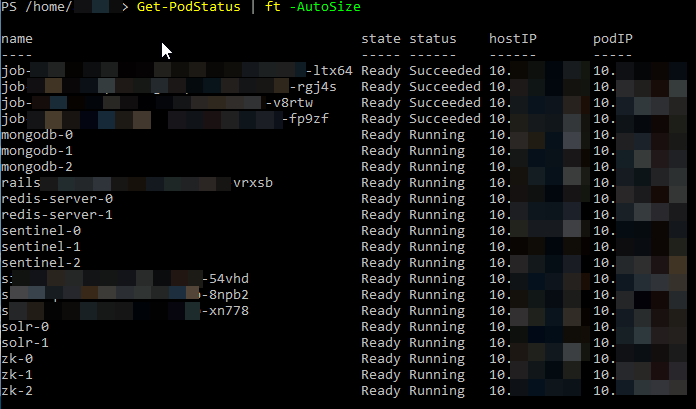

With PowerShell, like this, you can do something like

To return all statuses

Get-PodStatus | ft -autosize

To filter them out

Get-PodStatus | where { ($_.status -eq "Running") -and ($_.state -eq "ready") } | ft -AutoSize

FYI:

kubectl get pods --field-selector=status.phase!=Succeeded

Can be used to filter out completed jobs, which appear to be included by default as of version 217.

Not:

kubectl get pods --field-selector=status.phase!=Completed which, to me, would be more rational, given that the STATUS is displayed as 'Completed'.

Is this supposed to work on status.phase? I terminated a node and all of the pods display as Unknown or NodeLost but they aren't filtered by the field-selector:

$ kubectl get pods --field-selector=status.phase=Running --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-78fcdf6894-9gc7n 1/1 Running 0 1h

kube-system coredns-78fcdf6894-lt58z 1/1 Running 0 1h

kube-system etcd-i-0564e0652e0560ac4 1/1 Unknown 0 1h

kube-system etcd-i-0af8bbf22a66edc1d 1/1 Running 0 1h

kube-system etcd-i-0e780f1e91f5a7116 1/1 Running 0 1h

kube-system kube-apiserver-i-0564e0652e0560ac4 1/1 Unknown 0 1h

kube-system kube-apiserver-i-0af8bbf22a66edc1d 1/1 Running 1 1h

kube-system kube-apiserver-i-0e780f1e91f5a7116 1/1 Running 1 1h

kube-system kube-controller-manager-i-0564e0652e0560ac4 1/1 Unknown 1 1h

kube-system kube-controller-manager-i-0af8bbf22a66edc1d 1/1 Running 0 1h

kube-system kube-controller-manager-i-0e780f1e91f5a7116 1/1 Running 0 1h

kube-system kube-router-9kkxh 1/1 NodeLost 0 1h

kube-system kube-router-dj9sp 1/1 Running 0 1h

kube-system kube-router-n4zzw 1/1 Running 0 1h

kube-system kube-scheduler-i-0564e0652e0560ac4 1/1 Unknown 0 1h

kube-system kube-scheduler-i-0af8bbf22a66edc1d 1/1 Running 0 1h

kube-system kube-scheduler-i-0e780f1e91f5a7116 1/1 Running 0 1h

kube-system tiller-deploy-7678f78996-6t84j 1/1 Running 0 1h

I wouldn't expect those the non-Running pods to be listed with this query...

Should this field selector work for other object types as well? Doesn't seem to work for pvc.

$ kubectl get pvc --field-selector=status.phase!=Bound

Error from server (BadRequest): Unable to find {"" "v1" "persistentvolumeclaims"} that match label selector "", field selector "status.phase!=Bound": "status.phase" is not a known field selector: only "metadata.name", "metadata.namespace"

This field selector syntax is still confusing to me, for some reason I can't filter "Evicted" status positively (they can only be shown for not Running). What did I do wrong here?

I did read through https://kubernetes.io/docs/concepts/overview/working-with-objects/field-selectors/ still can't get it working.

$ kubectl get po --field-selector status.phase!=Running

NAME READY STATUS RESTARTS AGE

admin-55d76dc598-sr78x 0/2 Evicted 0 22d

admin-57f6fcc898-df82r 0/2 Evicted 0 17d

admin-57f6fcc898-dt5kb 0/2 Evicted 0 18d

admin-57f6fcc898-jqp9j 0/2 Evicted 0 17d

admin-57f6fcc898-plxhr 0/2 Evicted 0 17d

admin-57f6fcc898-x5kdz 0/2 Evicted 0 17d

admin-57f6fcc898-zgsr7 0/2 Evicted 0 18d

admin-6489584498-t5fzf 0/2 Evicted 0 28d

admin-6b7f5dbb5d-8h9kt 0/2 Evicted 0 9d

admin-6b7f5dbb5d-k57sk 0/2 Evicted 0 9d

admin-6b7f5dbb5d-q7h7q 0/2 Evicted 0 7d

admin-6b7f5dbb5d-sr8j6 0/2 Evicted 0 9d

admin-7454f9b9f7-wrgdk 0/2 Evicted 0 38d

admin-76749dd59d-tj48m 0/2 Evicted 0 22d

admin-78648ccb66-qxgjp 0/2 Evicted 0 17d

admin-795c79f58f-dtcnb 0/2 Evicted 0 25d

admin-7d58ff6cfd-5pt9p 0/2 Evicted 0 4d

admin-7d58ff6cfd-99pzq 0/2 Evicted 0 3d

admin-7d58ff6cfd-9cbjd 0/2 Evicted 0 3d

admin-b5d6d84d6-5q67l 0/2 Evicted 0 12d

admin-b5d6d84d6-fh2ck 0/2 Evicted 0 13d

admin-b5d6d84d6-r4d8b 0/2 Evicted 0 14d

admin-c56558f95-bxxq5 0/2 Evicted 0 7d

api-5445fd6b8b-4jts8 0/2 Evicted 0 3d

api-5445fd6b8b-5b2jp 0/2 Evicted 0 2d

api-5445fd6b8b-7km72 0/2 Evicted 0 4d

api-5445fd6b8b-8tsgf 0/2 Evicted 0 4d

api-5445fd6b8b-ppnxp 0/2 Evicted 0 2d

api-5445fd6b8b-qqnxr 0/2 Evicted 0 2d

api-5445fd6b8b-z77wp 0/2 Evicted 0 2d

api-5445fd6b8b-zjcmg 0/2 Evicted 0 2d

api-5b6647d48b-frbhj 0/2 Evicted 0 9d

api-9459cb775-5cz7f 0/2 Evicted 0 1d

$ kubectl get po --field-selector status.phase=Evicted

No resources found.

$ kubectl get po --field-selector status.phase==Evicted

No resources found.

$ kubectl get po --field-selector status.phase=="Evicted"

No resources found.

$ kubectl get po --field-selector status.phase="Evicted"

No resources found.

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"10", GitVersion:"v1.10.7", GitCommit:"0c38c362511b20a098d7cd855f1314dad92c2780", GitTreeState:"clean", BuildDate:"2018-08-20T10:09:03Z", GoVersion:"go1.9.3", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"10+", GitVersion:"v1.10.6-gke.11", GitCommit:"42df8ec7aef509caba40b6178616dcffca9d7355", GitTreeState:"clean", BuildDate:"2018-11-08T20:06:00Z", GoVersion:"go1.9.3b4", Compiler:"gc", Platform:"linux/amd64"}

There ought to be a way to just list Running pods that have (or have not) passed their readiness check.

Also, where is it documented what the values in the Ready column mean? (0/1, 1/1)

@ye It's not working because Evicted isn't a status.phase value: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/

Evicted belong to a Status' reason: https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.13/#podstatus-v1-core

Which unfortunately isn't queryable with field-selector at the moment.

Shouldn't CrashLoopBackOff be included, because its a status.phase according to the pod-lifecycle docs?

17:18:13 $ kubectl get pods --field-selector=status.phase!=Running

No resources found.

17:19:32 $ kubectl get pods|grep CrashLoopBackOff

kubernetes-dashboard-head-57b9585588-lvr5t 0/1 CrashLoopBackOff 2292 8d

17:22:45 $ kubectl version

Client Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.3", GitCommit:"721bfa751924da8d1680787490c54b9179b1fed0", GitTreeState:"clean", BuildDate:"2019-02-01T20:08:12Z", GoVersion:"go1.11.5", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"cff46ab41ff0bb44d8584413b598ad8360ec1def", GitTreeState:"clean", BuildDate:"2019-01-10T23:28:14Z", GoVersion:"go1.11.4", Compiler:"gc", Platform:"linux/amd64"}

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle stale

/remove-lifecycle stale

still and issue, 2 years later

I cannot believe this has been around for so long without a solution.

i'm using kubectl get pods --all-namespaces | grep -Ev '([0-9]+)/\1'

This can already be done in kubectl using jsonpath output:

Eg: this will print the namespace and name of each pod in Running state.

kubectl get pods --all-namespaces -o jsonpath="{range .items[?(@.status.phase == 'Running')]}{.metadata.namespace}{' '}{.metadata.name}{'\n'}{end}"

@albertvaka that won't show if you have a pod with CrashLoopBackOff

$ kubectl get pods --all-namespaces -o jsonpath="{range .items[?(@.status.phase != 'Running')]}{.metadata.namespace}{' '}{.metadata.name}{'\n'}{end}"

default pod-with-sidecar

my-system glusterfs-brick-0

my-system sticky-scheduler-6f8d74-6mh4q

$ kubectl get pods --all-namespaces | grep -Ev '([0-9]+)/\1'

NAMESPACE NAME READY STATUS RESTARTS AGE

default pod-with-sidecar 1/2 ImagePullBackOff 0 3m

default pod-with-sidecar2 1/2 CrashLoopBackOff 4 3m

my-system glusterfs-brick-0 0/2 Pending 0 4m

my-system sticky-scheduler-6f8d74-6mh4q 0/1 ImagePullBackOff 0 9m

Also i need output format similar to kubectl get pods

even doing this didn't help

$ kubectl get pods --field-selector=status.phase!=Running,status.phase!=Succeeded --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default pod-with-sidecar 0/2 ContainerCreating 0 37s

my-system glusterfs-brick-0 0/2 Pending 0 3m

my-system sticky-scheduler-6f8d74-6mh4q 0/1 ImagePullBackOff 0 7m

@albertvaka that won't show if you have a pod with

CrashLoopBackOff

That's the point.

Also i need output format similar to

kubectl get pods

You can customize the columns you want to display with jsonpath.

@albertvaka I believe the point here is that there should be a simple way to get all pods that aren't ready, without writing obtuse json path syntax (which I don't think will work anyway due to CrashLoopBackOff) being excluded from the filter. The fact that pods in a CrashLoopBackOff state are excluded from a query such as kubectl get pods --field-selector=status.phase!=Running is pretty bizzare. Why can't we just have something simple like kubectl get pods --not-ready, or something straightforward.

Still an issue. I agree that if i do do this to see a "running" pod:

kubectl get -n kube-system pods -lname=tiller --field-selector=status.phase=Running

NAME READY STATUS RESTARTS AGE

tiller-deploy-55c564dc54-2lfpt 0/1 Running 0 71m

I should also be able to do something like this to return containers that are not ready:

kubectl get -n kube-system pods -lname=tiller --field-selector=status.containerStatuses[*].ready!=true

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle stale

/remove-lifecycle stale

kubectl get po --all-namespaces|grep -v RunningThis can help to filter non running pod

I wanted to be able to filter pods by a ready state (fully ready), and posted a StackOverflow question here, with some possible answers (works for me)

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle stale

/remove-lifecycle stale

Adding my voice to this - agree that it would be awesome to be able to do kubectl get pods --ready or similar. I was wanting to add a step to a pipeline which would wait until all pods were ready (and fail after a timeout otherwise) and have had to rely on grep... which is brittle if the output of kubectl ever changes. Would much rather be querying the status of the pods more directly.

As @luvkrai stated, I have to use grep to find containers in CrashLoopBackOff status. Here's how I'm filtering right now. I sort by nodeName to show if I have a node that is causing more problems than others. Seems like a super hard problem somehow. Funny we can get all these output in the "STATUS" column consistently, but can't filter on that column without additional tools.

oc get pods --all-namespaces -o wide --sort-by=.spec.nodeName | grep -Ev "(Running|Completed)"

If I had more Golang knowledge, I think how this is accomplished to build the "STATUS" column would be clear here and might lead to a better solution.

https://github.com/kubernetes/kubectl/blob/7daf5bcdb45a24640236b361b86c056282ddcf80/pkg/describe/describe.go#L679

@alexburlton-sonocent To avoid some of the brittle aspects of grep you could use the --no-headers --output custom-columns= option to specify which columns you want, but you may run into the same problem that the complete info put out in the STATUS column isn't dependably found in the pod definition.

Here's something I use to find all pods that are not fully running (eg some containers are failing)

kubectl get po --all-namespaces | gawk 'match($3, /([0-9])+\/([0-9])+/, a) {if (a[1] < a[2] && $4 != "Completed") print $0}'

NAMESPACE NAME READY STATUS RESTARTS AGE

blah blah-6d46d95b96-7wsh6 2/4 Running 0 33h

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-testing, kubernetes/test-infra and/or fejta.

/lifecycle stale

/remove-lifecycle stale

see here

Most helpful comment

50140 provides a new flag

--field-selectorto filter these pods now./close