There is an unintuitive functionality of the bleu_score module.

>>> bleu_score.sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0.5, 0.5))

0.5

>>> bleu_score.sentence_bleu([[1,2,3,4]],[5,6,1,2], weights=(0.5, 0.5))

0.408248290463863

>>> bleu_score.sentence_bleu([[1,2,3,4]],[5,6,2,3], weights=(0, 0.5))

0.5773502691896257

>>> bleu_score.sentence_bleu([[1,2,3,4]],[5,6,7,3], weights=(0, 0.5))

1.0

>>> bleu_score.sentence_bleu([[1,2,3,4]],[5,6,8,7], weights=(0, 0.5))

0

The default SmoothingFunction.method0 skips any fractions that are equal to zero making bleu scores in these cases quite unintuitive. In my opinion this would be more intuitive to have the log(0) evaluate to -inf resulting in the overall bleu score in these cases evaluating to 0. This along with a clearer warning would make this much more intuitive.

To clarify, as seen below it gives a higher bleu for a worse match when i believe it should receive a bleu score of 0.

>>> bleu_score.sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0.5, 0.5))

0.5

>>> bleu_score.sentence_bleu([[1,2,3,4]],[5,6,1,2], weights=(0.5, 0.5))

0.408248290463863

All 17 comments

Just a clarification before diving in. Do you get a warning when you try these fringe cases?

Yes, I receive the warning.

Good. Let's go through the rational of why the outputs are as such:

Firstly, note that un-smoothed BLEU has never been meant for sentence level evaluation, it is advisable to find the optimal smoothing method that your data require, please look at https://github.com/alvations/nltk/blob/develop/nltk/translate/bleu_score.py#L425

If user wants to skip the step to find the optimal smoothing, let's look at the examples you've listed.

First scenario, when your n-gram order is larger than the overlap that exists between the reference and hypothesis, corpus/sentence BLEU will not work in this case. So the results of this cannot be taken into account:

>>> from nltk.translate.bleu_score import sentence_bleu

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0.5, 0.5))

/usr/local/lib/python2.7/site-packages/nltk/translate/bleu_score.py:490: UserWarning:

Corpus/Sentence contains 0 counts of 2-gram overlaps.

BLEU scores might be undesirable; use SmoothingFunction().

warnings.warn(_msg)

0.5

Next we have an example where there is 1 bigram and 2 unigram existing overlaps the reference and hypothesis. Seems like it gives a reasonable score.

>>> sentence_bleu([[1,2,3,4]],[5,6,1,2], weights=(0.5, 0.5))

0.408248290463863

Going to the next example using weights=(0, 0.5), this is rather un-intuitive because not assigning scores for lower n-gram defeats the purpose of BLEU where we are matching geometrically across the order of n-grams. But lets see:

>>> sentence_bleu([[1,2,3,4]],[5,6,2,3], weights=(0, 0.5))

0.5773502691896257

From we see that it has the same 1 bigram and 2 unigram match but have a higher score. And this is because the we weigh the bigrams heavier than the unigrams and unigrams has 0 influence. Since there are lesser bigrams than unigrams, naturally the resulting BLEU is higher.

>>> import math

>>> from nltk import ngrams

>>> from nltk.translate.bleu_score import modified_precision

>>> ref, hyp = [1,2,3,4], [5,6,2,3]

>>> list(ngrams(ref, 2)) # 3 bigrams.

[(1, 2), (2, 3), (3, 4)]

>>> list(ngrams(ref, 1)) # 4 unigrams.

[(1,), (2,), (3,), (4,)]

The description of more matches = better BLEU score is intuitive but actually this is only part of the BLEU formulation reflected in the modified precision:

>>> modified_precision([ref], hyp, 2)

Fraction(1, 3)

>>> modified_precision([ref], hyp, 1)

Fraction(2, 4)

>>> m1 = modified_precision([ref], hyp, 1)

>>> m2 = modified_precision([ref], hyp, 2)

If we move forward to the geometric logarithmic mean, we see the modified precision getting a little distorted:

>>> import math

>>> math.log(m1)

-0.6931471805599453

>>> math.log(m2)

-1.0986122886681098

>>> math.exp(0.5 * math.log(m1) + 0.5 * math.log(m2))

0.408248290463863

>>> math.exp(0 * math.log(m1) + 0.5 * math.log(m2))

0.5773502691896257

So from the first 3 examples you've given, the first is invalid (thus the warning), the 2nd and 3rd might not be easy to understand but they are mathematically sound:

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0.5, 0.5))

0.5

>>> sentence_bleu([[1,2,3,4]],[5,6,1,2], weights=(0.5, 0.5))

0.408248290463863

>>> sentence_bleu([[1,2,3,4]],[5,6,2,3], weights=(0, 0.5))

0.5773502691896257

Note that I've skipped the brevity penalty step but trust me it's 1.0 since the length of the hypothesis is the same as the reference. Please test out for yourself too =)

Moving on, to the 4th example, we use the same modified precision, then compute the geometric logarithmic mean step:

>>> sentence_bleu([[1,2,3,4]],[5,6,7,3], weights=(0, 0.5))

/Users/liling.tan/git-stuff/nltk-alvas/nltk/translate/bleu_score.py:491: UserWarning:

Corpus/Sentence contains 0 counts of 2-gram overlaps.

BLEU scores might be undesirable; use SmoothingFunction().

warnings.warn(_msg)

1.0

>>> ref, hyp = [1,2,3,4], [5,6,7,3]

>>> modified_precision([ref], hyp, 1)

Fraction(1, 4)

>>> modified_precision([ref], hyp, 2)

Fraction(0, 3)

>>> m1 = modified_precision([ref], hyp, 1)

>>> m2 = modified_precision([ref], hyp, 2)

>>> math.exp(0 * math.log(m1) + 0.5 * math.log(m2))

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ValueError: math domain error

So the usage of bigram BLEU is not valid too without the proper smoothing function.

Perhaps, the warning didn't show up when you use the BLEU because the warning filter is set to default. I think it's a good idea to force this warning but it does irritate users when it appears too often in a fringe dataset.

Last example is valid, since it returns the expect 0 for 0 matching unigram. In summary:

>>> import warnings

>>> warnings.simplefilter("always")

>>> from nltk.translate.bleu_score import sentence_bleu

# Invalid use of BLEU.

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0.5, 0.5))

/Users/liling.tan/git-stuff/nltk-alvas/nltk/translate/bleu_score.py:491: UserWarning:

Corpus/Sentence contains 0 counts of 2-gram overlaps.

BLEU scores might be undesirable; use SmoothingFunction().

warnings.warn(_msg)

0.5

# Valid BLEU score.

>>> sentence_bleu([[1,2,3,4]],[5,6,1,2], weights=(0.5, 0.5))

0.408248290463863

# Logical BLEU score.

>>> sentence_bleu([[1,2,3,4]],[5,6,2,3], weights=(0, 0.5))

0.5773502691896257

# Invalid use of BLEU.

>>> sentence_bleu([[1,2,3,4]],[5,6,7,3], weights=(0, 0.5))

/Users/liling.tan/git-stuff/nltk-alvas/nltk/translate/bleu_score.py:491: UserWarning:

Corpus/Sentence contains 0 counts of 2-gram overlaps.

BLEU scores might be undesirable; use SmoothingFunction().

warnings.warn(_msg)

1.0

# Valid BLEU score.

>>> sentence_bleu([[1,2,3,4]],[5,6,8,7], weights=(0, 0.5))

0

Now going to the last 2 example. Actually, the first instance is an invalid use of BLEU, you should see this:

>>> from nltk.translate.bleu_score import sentence_bleu

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0.5, 0.5))

/Users/liling.tan/git-stuff/nltk-alvas/nltk/translate/bleu_score.py:491: UserWarning:

Corpus/Sentence contains 0 counts of 2-gram overlaps.

BLEU scores might be undesirable; use SmoothingFunction().

warnings.warn(_msg)

0.5

>>> sentence_bleu([[1,2,3,4]],[5,6,1,2], weights=(0.5, 0.5))

0.408248290463863

Whenever the warning shows up at the nth ngram order (in this case, bigrams), it's usually an misuse of BLEU and it will only take into the account the weights of the n-1th order (in this case, the unigrams).

So if we see the first example with the only weights for the unigram:

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0,))

1.0

The geometric logarithmic mean is rather mind-warping but the key is the sum of the weights to the n-gram orders must sum to 1.0 to fit the BLEU original idea. E.g.

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(1,))

0.25

In general, whenever the warning pops up, it's an un-BLEU situation where BLEU will fail and there's no active research to patch this particular pain, other than researchers creating new "better" scores that has other achilles heel.

In the case that the warning pops-up and user needs a quick-fix, the emulate_multibleu option exist but the weights must be set to the default uniform weights for all 4-grams, e.g.

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], emulate_multibleu=True)

0.0

And if we use multi-bleu.perl:

$ cat ref

1 2 3 4

$ cat hyp

5 6 8 1

$ perl ~/mosesdecoder/scripts/generic/multi-bleu.perl ref < hyp

BLEU = 0.00, 25.0/0.0/0.0/0.0 (BP=1.000, ratio=1.000, hyp_len=4, ref_len=4)

So the question then becomes:

Do we need a feature to automatically readjust weights if the weights users give is "un-BLEU-like"? Currently, the

auto_reweighfunction works only with the defaultweights = (0.25, 0.25, 0.25, 0.25).- I'm against this idea since (i) users using custom weights should better understand the BLEU mechanism and tune the weights appropriately if necessary and (ii) if users doesn't want the hassle, they should use the default weights and/or

emulate_multibleuoption

- I'm against this idea since (i) users using custom weights should better understand the BLEU mechanism and tune the weights appropriately if necessary and (ii) if users doesn't want the hassle, they should use the default weights and/or

Should the warning filter be set to always whenever a user uses BLEU?

If there's any active research and proven solution(s) to these sentence BLEU fringe cases, we'll be open to re-implementation of these solutions.

But if it's hot-fixes that results in more wack-a-moles situation of BLEU, then I think the current BLEU version is stable enough with the warnings in-place =)

@benleetownsend I hope the explanation makes sense and show that the current BLEU implementation with the various options is sufficient to overcome the examples you've given.

My suggestion is to (i) avoid the weights hacking unless you use some information theoretic approaches to estimate them, (ii) use the emulate_multibleu=True option with the default (0.25, 0.25, 0.25, 0.25) weights or (iii) experiment with the various smoothing methods to get the appropriate modified precision but still avoid the weights hacking.

Thankyou for your detailed response. I agree with everything you've said however I guess my underlying point is that to me the results when you back off to n-1 grams just seems wrong based on the literature I have seen.

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0.5, 0.5))

/Users/liling.tan/git-stuff/nltk-alvas/nltk/translate/bleu_score.py:491: UserWarning:

Corpus/Sentence contains 0 counts of 2-gram overlaps.

BLEU scores might be undesirable; use SmoothingFunction().

warnings.warn(_msg)

0.5

In this above case I would say that following the BLEU equation and returning 0 for this case is "undesirable" but unless i've missed something in the literature, the result of 0.5 for this is wrong.

I would suggest either a clearer warning that states that the results are wrong (instead of undesirable) or setting the default "smoothing" mechanism to return sys.float_info.min therefore resulting in a bleu score of 0 in the "invalid use of BLEU" cases.

If you disagree feel free to close this issue. I have resolved this in my project with a custom smoothing function containing the above functionality.

Thanks again for your time.

Short questions.

Which dataset are you working on? And how often this happens?

Also, smoothing is the way to go.

Going back to why adding the sys.float_info.min is not right in the non-smoothing version of BLEU.

When we add sys.float_info.min to the precision for the specific order of n-gram to the unsmoothed BLEU, we have technically provided a laplace-like smoothen precision score. That's liken to the method1 in https://github.com/nltk/nltk/blob/develop/nltk/translate/bleu_score.py#L496

You could do this if you want the functionality of a smoothen BLEU:

>>> from nltk.translate.bleu_score import sentence_bleu

>>> from nltk.translate.bleu_score import SmoothingFunction

>>> import sys

>>> smoother = SmoothingFunction()

# Laplacian smoothing.

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], smoothing_function=smoother.method1)

0.08034284189446518

# Laplacian smoothing, with altered weights.

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0.5, 0.5), smoothing_function=smoother.method1)

0.09128709291752769

# Canonical multi-bleu smoothing, aka method4().

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], smoothing_function=smoother.method4)

0.19564209772076444

But we cannot add that in SmoothingFunction.method0 since that's the method0() is default non-smoothen BLEU.

Returning a sys.float_info.min instead of the backoff is not right too.

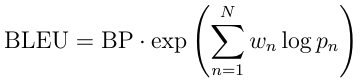

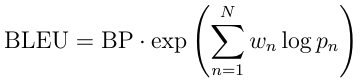

The BLEU formula is

One reason why hacking the weights without empirical truth is a bad idea because the weights in BLEU is subjected to exp().

So if we walk down the BLEU calculations, with the usual 0.25 uniform weights, the BLEU score extremely small to 0.

>>> p_n = [0.25, sys.float_info.min, sys.float_info.min, sys.float_info.min]

>>> weights = (0.25, 0.25, 0.25, 0.25)

>>> math.exp(sum(w*math.log(p_i) for w, p_i in zip(weights, p_n)))

1.288229753919562e-231

Using the weights=(0.5, 0.5), goes to 0 too.

>>> p_n = [0.25, sys.float_info.min]

>>> weights = (0.5, 0.5)

>>> math.exp(sum(w*math.log(p_i) for w, p_i in zip(weights, p_n)))

7.458340731200295e-155

Maybe it's not evident but returning the sys.float_info.min backoff will ALWAYS end up with a super small BLEU score.

If we imagine the usage on a relatively good or almost perfect translation, here we see a counter example:

>>> m1 = modified_precision([[1,2,3,4]], [2,3,4,5], 1)

>>> m2 = modified_precision([[1,2,3,4]], [2,3,4,5], 2)

>>> m3 = modified_precision([[1,2,3,4]], [2,3,4,5], 3)

>>> m4 = modified_precision([[1,2,3,4]], [2,3,4,5], 4)

>>> [m1, m2, m3, m4]

[Fraction(3, 4), Fraction(2, 3), Fraction(1, 2), Fraction(0, 1)]

>>> [m1, m2, m3, sys.float_info.min]

[Fraction(3, 4), Fraction(2, 3), Fraction(1, 2), 2.2250738585072014e-308]

>>> p_n = [m1, m2, m3, sys.float_info.min]

>>> weights = (0.25, 0.25, 0.25, 0.25)

>>> math.exp(sum(w*math.log(p_i) for w, p_i in zip(weights, p_n)))

8.636168555094496e-78

The main reason why I've asked about the dataset and how often the problem is occurring is because you might not be anticipating the wave of other problems as the one above.

Once we try to return sys.float_info.min, it creates a whole wave of problems that you have not considered outside your dataset.

Alternatively, if we return the backoff n-gram:

>>> p_n = [m1, m2, m3]

>>> weights = (0.25, 0.25, 0.25)

>>> math.exp(sum(w*math.log(p_i) for w, p_i in zip(weights, p_n)))

0.7071067811865475

In any case, when using sentence BLEU on really loose translation or short sentences, always smooth:

# Laplacian smoothing, with altered weights.

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], weights=(0.5, 0.5), smoothing_function=smoother.method1)

0.09128709291752769

# Canonical multi-bleu smoothing, aka method4().

>>> sentence_bleu([[1,2,3,4]],[5,6,8,1], smoothing_function=smoother.method4)

0.19564209772076444

@alvations Thanks for all the great code in NLTK. We've been using it to some extent when experimenting with dialogue-response modelling systems (or chat-bots) at True AI.

I definitely see the point of warning the user about not using a smoothing function when any of the n-grams evaluates to 0. After having read up on the smoothing functions, we have now started using smoothing function 1 as default.

However, I was still very surprised when I got the results with smoothing function inactivated in the cases when it didn't find any n-grams of a particular order. I got results that I did not expect looking at the equations in Chen et. al (which is one of the papers you mention in the documentation).

The main equation, used to calculate the BLEU score (without smoothing) that you posted above (eq 2 in Chen et. al), and which you use in the code is:

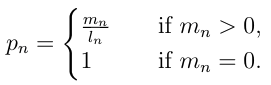

where p_m is defined as (eq 3 in Chen et. al):

However, you're calculating p_m as:

In conclusion you are using a different equation than what you advertise. You mention in the documentation that smoothing function 0 means that no smoothing is used, and that you use the base equations to calculate BLEU in that case. This is simply not true. You are not using the base equation, you are using a modified version to calculate p_m compared to what is mentioned in the paper.

Ah ha, that's a gotcha between the original BLEU vs the Eqn (2) from Chen and Cherry (2014).

Note that in the original BLEU (1992), the p_n needs to move from the decimal space to the logarithmic space, which leads to the the math domain error when p_n = 0:

So in that case, the "hacks" are put to overcome all the fringe cases. The multi-bleu.perl had another strategy to overcome this.

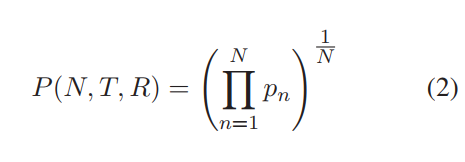

But in the Chen and Cherry formulation, it's a product of the decimals with the inverse power of the N, i.e.

Which would avoid the math domains errors without the log and exp space. But maybe it will also lead to another wave of errors, most probably coming from underflow issues or maybe not. As long as the unittest for BLEU checks out, I think an implementation of this would be an encouraged PR too ;P

I guess the decision to go with the original log+exp formulation because we decided to go with the implementation that follows closer to the multi-bleu.perl and mteval-v13a.pl implementation that focused more on requiring users to smooth when the matching ngrams are smaller than 4, which usually doesn't happen in machine translation.

The decision to back-off p_n was purely implementation to avoid the math domain error. But if there's other suggestions of how to overcome the math domain error from math.log(0) and keeping to the first formulation with log+exp, we're open to pull-requests / suggestions =)

Or would anyone vote for the NLTK implementation to use the Chen and Cherry (2014) formulation instead?

But I see now why you would have more problems when using BLEU in dialog systems where the sentences/segments might be really short.

Actually, after the implementation of the SmoothingFunction, I had always wanted the default to be set to smoothened BLEU. But we have to decide on which smoothing method to use as a default so we go with the non-smoothen BLEU with strange backoffs and warnings.

BTW, for my personal use, I would always set the default smoothing to SmoothingFunction().method4 .

The two equations are actually identical from a mathematical point of view (apart from w_n). The difference is that one has to be careful about what to do when evaluating log(0). Here log(0) should be evaluated to -inf (or something similar if -inf isn't available in the library used to handle math). Evaluating it to 0 breaks the math as pointed out above. Evaluating it to 0, means that a particular sentence will get a better BLEU score if no n-grams were found of a particular order, than if just one n-gram was found, which is counter intuitive.

Evaluating log(0) is never straight-forward. And need to be handled on a case-by-case basis. math solves this by throwing a ValueError and trusting the user to handle it appropriately. numpy on the other hand returns -inf by default together with a RuntimeWarning. There are arguments both for and against using -inf as the result.

However, in this particular application the correct thing would definitely be to use -inf.

Briefly looking at the code the correct way of fixing this would be to change what follows this else-statement to:

p_n_new.append(sys.float_info.min)

or

return [sys.float_info.min]

The warning could be preserved if you'd like.

It could alternatively be merged with the elif _emulate_multibleu and i < 5: statement and just have one else at this stage.

@bamattsson Precisely, they are the same implementation and if the rework of using sys.float_info.min is to be used, the geometric mean computation should also change.

From the example in my previous comment, appending sys.float_info.min may be a bad idea depending on the corpus.

>>> m1 = modified_precision([[1,2,3,4]], [2,3,4,5], 1)

>>> m2 = modified_precision([[1,2,3,4]], [2,3,4,5], 2)

>>> m3 = modified_precision([[1,2,3,4]], [2,3,4,5], 3)

>>> m4 = modified_precision([[1,2,3,4]], [2,3,4,5], 4)

>>> [m1, m2, m3, m4]

[Fraction(3, 4), Fraction(2, 3), Fraction(1, 2), Fraction(0, 1)]

>>> [m1, m2, m3, sys.float_info.min]

[Fraction(3, 4), Fraction(2, 3), Fraction(1, 2), 2.2250738585072014e-308]

>>> p_n = [m1, m2, m3, sys.float_info.min]

>>> weights = (0.25, 0.25, 0.25, 0.25)

>>> math.exp(sum(w*math.log(p_i) for w, p_i in zip(weights, p_n)))

8.636168555094496e-78

Which led to the failing unittest on https://nltk.ci.cloudbees.com/job/pull_request_tests/430/TOXENV=py35-jenkins,jdk=jdk8latestOnlineInstall/testReport/junit/nltk.translate/bleu_score/sentence_bleu/ and thus the failing checks on #1844.

The fix is VERY dependent on what kind of dataset one is working on. This should NEVER be a problem in corpus BLEU because the assumption at corpus level has always been that there are at least 1 4-gram matches between the hypotheses and their references.

So my suggestion is to rework your hotfix as a flag / argument /option that one would pass to sentence bleu. And if you would like, make the flag the default and still go back to the ngram backoff technique when turned off. There were many considerations taken to make the ngram backoff choice. Perhaps reading #1330 might help too.

Actually, looking at the PR carefully. Is it right that the outputs of the test cases using the hotfix you're proposing gives the same output as emulate_multibleu=True?

Resolved in #1844

Thanks @alvations, @bamattsson, @benleetownsend

Most helpful comment

Good. Let's go through the rational of why the outputs are as such:

Firstly, note that un-smoothed BLEU has never been meant for sentence level evaluation, it is advisable to find the optimal smoothing method that your data require, please look at https://github.com/alvations/nltk/blob/develop/nltk/translate/bleu_score.py#L425

If user wants to skip the step to find the optimal smoothing, let's look at the examples you've listed.

First scenario, when your n-gram order is larger than the overlap that exists between the reference and hypothesis, corpus/sentence BLEU will not work in this case. So the results of this cannot be taken into account:

Next we have an example where there is 1 bigram and 2 unigram existing overlaps the reference and hypothesis. Seems like it gives a reasonable score.

Going to the next example using

weights=(0, 0.5), this is rather un-intuitive because not assigning scores for lower n-gram defeats the purpose of BLEU where we are matching geometrically across the order of n-grams. But lets see:From we see that it has the same 1 bigram and 2 unigram match but have a higher score. And this is because the we weigh the bigrams heavier than the unigrams and unigrams has 0 influence. Since there are lesser bigrams than unigrams, naturally the resulting BLEU is higher.

The description of more matches = better BLEU score is intuitive but actually this is only part of the BLEU formulation reflected in the modified precision:

If we move forward to the geometric logarithmic mean, we see the modified precision getting a little distorted:

So from the first 3 examples you've given, the first is invalid (thus the warning), the 2nd and 3rd might not be easy to understand but they are mathematically sound:

Note that I've skipped the brevity penalty step but trust me it's 1.0 since the length of the hypothesis is the same as the reference. Please test out for yourself too =)

Moving on, to the 4th example, we use the same modified precision, then compute the geometric logarithmic mean step:

So the usage of bigram BLEU is not valid too without the proper smoothing function.

Perhaps, the warning didn't show up when you use the BLEU because the warning filter is set to default. I think it's a good idea to force this warning but it does irritate users when it appears too often in a fringe dataset.

Last example is valid, since it returns the expect 0 for 0 matching unigram. In summary: