Numpy: polyfit and eig regression tests fail after Windows 10 update to 2004

Tests are failing:

FAILED ....\lib\tests\test_regression.py::TestRegression::test_polyfit_build - numpy.linalg.LinAlgError: SVD did not...

FAILED ....\linalg\tests\test_regression.py::TestRegression::test_eig_build - numpy.linalg.LinAlgError: Eigenvalues ...

FAILED ....\ma\tests\test_extras.py::TestPolynomial::test_polyfit - numpy.linalg.LinAlgError: SVD did not converge i...

with exceptions:

err = 'invalid value', flag = 8

def _raise_linalgerror_lstsq(err, flag):

> raise LinAlgError("SVD did not converge in Linear Least Squares")

E numpy.linalg.LinAlgError: SVD did not converge in Linear Least Squares

err = 'invalid value'

flag = 8

and

err = 'invalid value', flag = 8

def _raise_linalgerror_eigenvalues_nonconvergence(err, flag):

> raise LinAlgError("Eigenvalues did not converge")

E numpy.linalg.LinAlgError: Eigenvalues did not converge

err = 'invalid value'

flag = 8

Steps taken:

- Create a VM

- Install latest Windows 10 and update to the latest version 2004 (10.0.19041)

- Install Python 3.8.3

pip install pytestpip install numpypip install hypothesis- run tests in the package

Same happens issue happens when running on tests in the repository.

Version 1.19.0 of numpy

Am I missing any dependencies? Or is it just Windows going bonkers?

All 171 comments

Edit: You are obviously using pip.I have also had a strange result on Windows AMD64 in the recent past with linear algebra libraries and eigenvalue decompositions (in the context of running test for statsmodels).

If you have time, try using 32 bit Python and pip and see if you get the same issues? I couldn't see them on 32-bit windows but they were repeatable on 64-bit windows.

If I use conda, which ships MKL, I don't have the errors.

Edit: I also see them when using NumPy 1.18.5 on Windows AMD64.

tests fail after latest Windows 10 update

Were the tests failing before the update?

No @charris , before the update the test suite just passes.

@speixoto Do you know which update it was specifically? I'd be interested to see if it solves my issue with pip-installed wheels.

Update 1809 (10.0.17763) was not causing any failed test @bashtage

1909 wasn't causing anything as well. It only started happening with 2004.

I'm not 100% convinced it is 2004 or something after. I think 2004 worked.

FWIW I can't reproduce these crashes on CI (Azure or appveyor) but I do it is locally on 2 machines that are both AMD64 and update on 2004.

Have either of you tried to see if you get them on 32-bit Python?

Seems there have been a number of problems reported against the 2004 update. Maybe this should be reported also?

I just ran the following on fresh install of 1909 and 2004:

pip install numpy scipy pandas pytest cython

git clone https://github.com/statsmodels/statsmodels.git

cd statsmodels

pip install -e . --no-bulid-isolation

pytest statsmodels

On 1909 no failures. On 2004 30 failures all related to linear algebra functions.

When I run tests on 2004 in a debugger, I notice that the first call to a function often returns in incorrect result, but calling again produces the correct result (which remains correct if repeatedly called). Not sure if this is useful information as to guessing a cause.

Do earlier versions of NumPy also have problems? I assume you are running 1.19.0.

Using pip + 1.18.4, and scipy 1.4.1, I get the same set of errors.

These are really common:

ERROR statsmodels/graphics/tests/test_gofplots.py::TestProbPlotLongely::test_ppplot - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/graphics/tests/test_gofplots.py::TestProbPlotLongely::test_qqplot_other_array - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/graphics/tests/test_gofplots.py::TestProbPlotLongely::test_probplot - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/graphics/tests/test_regressionplots.py::TestPlot::test_plot_leverage_resid2 - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/regression/tests/test_regression.py::TestOLS::test_params - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/regression/tests/test_regression.py::TestOLS::test_scale - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/regression/tests/test_regression.py::TestOLS::test_ess - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/regression/tests/test_regression.py::TestOLS::test_mse_total - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/regression/tests/test_regression.py::TestOLS::test_bic - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/regression/tests/test_regression.py::TestOLS::test_norm_resids - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/regression/tests/test_regression.py::TestOLS::test_HC2_errors - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/regression/tests/test_regression.py::TestOLS::test_missing - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/regression/tests/test_regression.py::TestOLS::test_norm_resid_zero_variance - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/tsa/tests/test_stattools.py::TestADFConstant::test_teststat - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/tsa/tests/test_stattools.py::TestADFConstant::test_pvalue - numpy.linalg.LinAlgError: SVD did not converge

ERROR statsmodels/tsa/tests/test_stattools.py::TestADFConstant::test_critvalues - numpy.linalg.LinAlgError: SVD did not converge

When I run using 1.18.5 + MKL I get no errors. It is hard to say whether this is likely an OpenBLAS bug or a Windows bug. Probably the latter, but it will be really hard to get to and diagnosing is beyond my capabilities for low-level debugging.

On the same physical machine, when I run in Ubuntu using pip packages I don't see any errors.

This is the strangest behavior. Fails on first call, works on second and subsequent. One other hard to understand behavior is that if I run the failing test in isolation than I don't see the error.

One final update: if I test using a source build of NumPy without optimized BLAS I still see errors although they are not an identical set.

Maybe worth pinging the OpenBLAS devs. Does it happen with float32 as often as float64?

When I run a full test of NumPy 1.19.0, python -c "import numpy;numpy.test('full')" I see the same errors as above:

FAILED Python/Python38/Lib/site-packages/numpy/lib/tests/test_regression.py::TestRegression::test_polyfit_build - numpy.linalg.LinAlgError: SVD did not conv...

FAILED Python/Python38/Lib/site-packages/numpy/linalg/tests/test_regression.py::TestRegression::test_eig_build - numpy.linalg.LinAlgError: Eigenvalues did n...

FAILED Python/Python38/Lib/site-packages/numpy/ma/tests/test_extras.py::TestPolynomial::test_polyfit - numpy.linalg.LinAlgError: SVD did not converge in Lin...

I think if you only run the test exclusively it should pass if I remember correctly from pinging things around so that means even more strange behavior.

I have filed with Microsoft the only way I know how:

Posting in case others find this through search:

Windows users should use Conda/MKL if on 2004 until this is resolved

Here is a small reproducing example:

import numpy as np

a = np.arange(13 * 13, dtype=np.float64)

a.shape = (13, 13)

a = a % 17

va, ve = np.linalg.eig(a)

Produces

** On entry to DGEBAL parameter number 3 had an illegal value

** On entry to DGEHRD parameter number 2 had an illegal value

** On entry to DORGHR DORGQR parameter number 2 had an illegal value

** On entry to DHSEQR parameter number 4 had an illegal value

---------------------------------------------------------------------------

LinAlgError Traceback (most recent call last)

<ipython-input-11-bad305f0dfc7> in <module>

3 a.shape = (13, 13)

4 a = a % 17

----> 5 va, ve = np.linalg.eig(a)

<__array_function__ internals> in eig(*args, **kwargs)

c:\anaconda\envs\py-pip\lib\site-packages\numpy\linalg\linalg.py in eig(a)

1322 _raise_linalgerror_eigenvalues_nonconvergence)

1323 signature = 'D->DD' if isComplexType(t) else 'd->DD'

-> 1324 w, vt = _umath_linalg.eig(a, signature=signature, extobj=extobj)

1325

1326 if not isComplexType(t) and all(w.imag == 0.0):

c:\anaconda\envs\py-pip\lib\site-packages\numpy\linalg\linalg.py in _raise_linalgerror_eigenvalues_nonconvergence(err, flag)

92

93 def _raise_linalgerror_eigenvalues_nonconvergence(err, flag):

---> 94 raise LinAlgError("Eigenvalues did not converge")

95

96 def _raise_linalgerror_svd_nonconvergence(err, flag):

LinAlgError: Eigenvalues did not converge

Does LAPACK count from 0 or 1? All of the illegal values appear to be integers:

DGEBAL

DGEHRD

DORGHR

DHSEQR

It is seeming more like an OpenBlas issue (or something between 2004 and OpenBLAS). Here is my summary:

NumPy only python -c "import numpy;numpy.test('full')"

- No optimized BLAS: Pass full

- OpenBLAS: Fail full

- MKL: Pass full

statsmodels testing pytest statsmodels

- Pip NumPy and SciPy: Fail related to SVD and QR related code

- MKL NumPy and SciPy: Pass

- No optimized BLAS: Fail, but fewer that all involve

scipy.linalgroutines, which use OpenBLAS. - No optimized BLAS, no SciPY linalg: Pass

It would be nice to learn what changed in 2004. Maybe we need a different flag when compiling/linking the library?

_If_ it is an OpenBLAS bug, it is unlikely they will have seen it since none of the Windows-based CI are using build 19041 (aka Windows 10 2004) or later.

Just to be clear, it it true that none of these reports involve WSL?

No. All with either conda-provided python.exe or python.org provided python.exe

Does the test fail if the environment variable OPENBLAS_CORETYPE=Haswell or OPENBLAS_CORETYPE=NEHALEM is explicitely set ?

I tried Atom, SandyBridge, Haswell, Prescott and Nehalem, all with identical results.

The strangest thing is that if you run

import numpy as np

a = np.arange(13 * 13, dtype=np.float64)

a.shape = (13, 13)

a = a % 17

va, ve = np.linalg.eig(a) # This will raise, so manually run the next line

va, ve = np.linalg.eig(a)

the second (and any further) calls to eig succeeds.

SciPy has a similar error in

import numpy as np

import scipy.linalg

a = np.arange(13 * 13, dtype=np.float64)

a.shape = (13, 13)

a = a % 17

va, ve = scipy.linalg.eig(a)

which raises

** On entry to DGEBAL parameter number 3 had an illegal value

** On entry to DGEHRD parameter number 2 had an illegal value

** On entry to DORGHR DORGQR parameter number 2 had an illegal value

** On entry to DHSEQR parameter number 4 had an illegal value

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-58-8dfe8125dfe3> in <module>

4 a.shape = (13, 13)

5 a = a % 17

----> 6 va, ve = scipy.linalg.eig(a)

c:\anaconda\envs\py-pip\lib\site-packages\scipy\linalg\decomp.py in eig(a, b, left, right, overwrite_a, overwrite_b, check_finite, homogeneous_eigvals)

245 w = _make_eigvals(w, None, homogeneous_eigvals)

246

--> 247 _check_info(info, 'eig algorithm (geev)',

248 positive='did not converge (only eigenvalues '

249 'with order >= %d have converged)')

c:\anaconda\envs\py-pip\lib\site-packages\scipy\linalg\decomp.py in _check_info(info, driver, positive)

1348 """Check info return value."""

1349 if info < 0:

-> 1350 raise ValueError('illegal value in argument %d of internal %s'

1351 % (-info, driver))

1352 if info > 0 and positive:

ValueError: illegal value in argument 4 of internal eig algorithm (geev)

Final note, converting to np.complex128 also raises, while converting to np.float32 or np.complex64 both work without issue.

Python parts of the code compiled with long integers but OpenBLAS built without INTERFACE64=1 in the fortran (LAPACK) parts ?

(This still would not explain why first call fails but any subsequent succeeds, nor why it worked before update 19041)

Windows 10 users can upvote this issue in Microsoft's Feedback Hub.

At @bashtage's request, I've dug into the code a little bit, and my guess is that some aspect of the floating point state is now different on entry. That would seem to be confirmed by this:

converting to np.complex128 also raises, while converting to np.float32 or np.complex64 both work without issue.

The first warning message (which doesn't seem to appear when successful) "On entry to DGEBAL parameter number 3 had an illegal value" is caused by this condition https://github.com/xianyi/OpenBLAS/blob/ce3651516f12079f3ca2418aa85b9ad571c3a391/lapack-netlib/SRC/dgebal.f#L336 which could be caused by any number of prior calculations going to NaN.

Playing around with the repro steps, I found that doing the mod as float32 "fixes" it:

import numpy as np

a = np.arange(13 * 13, dtype=np.float64)

a.shape = (13, 13)

a = (a.astype(np.float32) % 17).astype(np.float64) # <-- only line changed

va, ve = np.linalg.eig(a)

So my guess is there's something in that code path that is properly resetting the state, though I'm not sure what it is. Hopefully someone more familiar with numpy's internals might know where that could be happening.

Kind of reminds me of an old bug we saw with mingw on WIndows, where the floating point register configuration was not inherited by worker threads, leading to denormals not getting flushed to zero and messing up subsequent calculations sometimes.

Not sure if this is in any way relevant to current Windows on current hardware but it might be interesting to know if your

OpenBLAS build was done with CONSISTENT_FPCSR=1 (or if adding that build option helps).

@mattip Do you know if CONSISTENT_FPCSR=1 in the OpenBLAS build?

Looks like it does, unfortunately:

Well, at least this is out of the way then. the connection seemed fairly remote anyway.

Hi! I've been experiencing a similar issue for some time now and all my testings point to something fishy between windows 10 (2004) and OpenBlas. I usually run my programs on 3 PC (2 Windows 10 workstations and an older Windows 7 laptop). My python scripts started to misbehave with errors related to linalg and svd() convergence around the time I upgraded my 2 workstations to version 2004 of Windows 10. I also experienced crashes on the first time I ran the script, but most of the times it worked at a second try (running Jupyter notebooks). The old laptop rig kept running the same programs without errors! I have miniconda, python 3.6 and all the packages are installed using pip (the env is exactly the same on the 3 machines).

Today I removed the pip default numpy (1.19.0) install and installed the numpy+mkl (numpy-1.19.1+mkl-cp36-cp36m-win_amd64.whl) version from https://www.lfd.uci.edu/~gohlke/pythonlibs/. So far, the errors related to linalg and svd() convergence disappeared. If I find something else, I'll get back here and report it.

Dário

It is strange that the OpenBLAS dll is being created by the VC9 version of lib.exe? This is the version that was used with Python 2.7. It may not matter, but feels strange given that VC9 isn't used anywhere.

The next step for someone (perhaps me) would be to build NumPy master with a single-threaded OpenBLAS (USE_THREAD=0) to see if this fixes the problems.

I have tried a few experiments with no success:

- Disable threads

USE_THREADS=0 - ILP64

INTERFACE64=1<- Segfault in NumPy's test with access violation

Can you run the LAPACK testsuite with that Win10 setup ? I had recently fixed the cmake build to produce it, maybe it provides some clue without any numpy involved.

--> LAPACK TESTING SUMMARY <--

SUMMARY nb test run numerical error other error

================ =========== ================= ================

REAL 409288 0 (0.000%) 0 (0.000%)

DOUBLE PRECISION 410100 0 (0.000%) 0 (0.000%)

COMPLEX 420495 0 (0.000%) 0 (0.000%)

COMPLEX16 13940 0 (0.000%) 0 (0.000%)

--> ALL PRECISIONS 1253823 0 (0.000%) 0 (0.000%)

Although I do see a lot of lines like

--> Testing DOUBLE PRECISION Nonsymmetric Eigenvalue Problem [ xeigtstd < nep.in > dnep.out ]

---- TESTING ./xeigtstd... FAILED(./xeigtstd < nep.in > dnep.out did not work) !

so I'm not sure if these are trustworthy.

Looks like the majority of tests are segfaulting, but the surviving ones are flawless... this is a bit more extreme than I expected.

(The COMPLEX and COMPLEX16 are a bit demanding in terms of stack size, so failures there are much more likely with default OS settings, but REAL and DOUBLE would normally show around 1200000 tests run. This has me wondering if MS changed something w.r.t default stack limit or layout though)

Some background. Everything is being built with gcc.exe/gfortran.exe. These are used to produce a .a file, which is then packaged into a DLL. Specifically:

$ gcc -v

Using built-in specs.

COLLECT_GCC=C:\git\numpy-openblas-windows\openblas-libs\mingw64\bin\gcc.exe

COLLECT_LTO_WRAPPER=C:/git/numpy-openblas-windows/openblas-libs/mingw64/bin/../libexec/gcc/x86_64-w64-mingw32/7.1.0/lto-wrapper.exe

Target: x86_64-w64-mingw32

Configured with: ../../../src/gcc-7.1.0/configure --host=x86_64-w64-mingw32 --build=x86_64-w64-mingw32 --target=x86_64-w64-mingw32 --prefix=/mingw64 --with-sysroot=/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64 --enable-shared --enable-static --disable-multilib --enable-languages=c,c++,fortran,lto --enable-libstdcxx-time=yes --enable-threads=posix --enable-libgomp --enable-libatomic --enable-lto --enable-graphite --enable-checking=release --enable-fully-dynamic-string --enable-version-specific-runtime-libs --enable-libstdcxx-filesystem-ts=yes --disable-libstdcxx-pch --disable-libstdcxx-debug --enable-bootstrap --disable-rpath --disable-win32-registry --disable-nls --disable-werror --disable-symvers --with-gnu-as --with-gnu-ld --with-arch=nocona --with-tune=core2 --with-libiconv --with-system-zlib --with-gmp=/c/mingw710/prerequisites/x86_64-w64-mingw32-static --with-mpfr=/c/mingw710/prerequisites/x86_64-w64-mingw32-static --with-mpc=/c/mingw710/prerequisites/x86_64-w64-mingw32-static --with-isl=/c/mingw710/prerequisites/x86_64-w64-mingw32-static --with-pkgversion='x86_64-posix-seh-rev0, Built by MinGW-W64 project' --with-bugurl=http://sourceforge.net/projects/mingw-w64 CFLAGS='-O2 -pipe -fno-ident -I/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64/opt/include -I/c/mingw710/prerequisites/x86_64-zlib-static/include -I/c/mingw710/prerequisites/x86_64-w64-mingw32-static/include' CXXFLAGS='-O2 -pipe -fno-ident -I/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64/opt/include -I/c/mingw710/prerequisites/x86_64-zlib-static/include -I/c/mingw710/prerequisites/x86_64-w64-mingw32-static/include' CPPFLAGS=' -I/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64/opt/include -I/c/mingw710/prerequisites/x86_64-zlib-static/include -I/c/mingw710/prerequisites/x86_64-w64-mingw32-static/include' LDFLAGS='-pipe -fno-ident -L/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64/opt/lib -L/c/mingw710/prerequisites/x86_64-zlib-static/lib -L/c/mingw710/prerequisites/x86_64-w64-mingw32-static/lib '

Thread model: posix

gcc version 7.1.0 (x86_64-posix-seh-rev0, Built by MinGW-W64 project)

$ gfortran -v

Using built-in specs.

COLLECT_GCC=C:\git\numpy-openblas-windows\openblas-libs\mingw64\bin\gfortran.exe

COLLECT_LTO_WRAPPER=C:/git/numpy-openblas-windows/openblas-libs/mingw64/bin/../libexec/gcc/x86_64-w64-mingw32/7.1.0/lto-wrapper.exe

Target: x86_64-w64-mingw32

Configured with: ../../../src/gcc-7.1.0/configure --host=x86_64-w64-mingw32 --build=x86_64-w64-mingw32 --target=x86_64-w64-mingw32 --prefix=/mingw64 --with-sysroot=/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64 --enable-shared --enable-static --disable-multilib --enable-languages=c,c++,fortran,lto --enable-libstdcxx-time=yes --enable-threads=posix --enable-libgomp --enable-libatomic --enable-lto --enable-graphite --enable-checking=release --enable-fully-dynamic-string --enable-version-specific-runtime-libs --enable-libstdcxx-filesystem-ts=yes --disable-libstdcxx-pch --disable-libstdcxx-debug --enable-bootstrap --disable-rpath --disable-win32-registry --disable-nls --disable-werror --disable-symvers --with-gnu-as --with-gnu-ld --with-arch=nocona --with-tune=core2 --with-libiconv --with-system-zlib --with-gmp=/c/mingw710/prerequisites/x86_64-w64-mingw32-static --with-mpfr=/c/mingw710/prerequisites/x86_64-w64-mingw32-static --with-mpc=/c/mingw710/prerequisites/x86_64-w64-mingw32-static --with-isl=/c/mingw710/prerequisites/x86_64-w64-mingw32-static --with-pkgversion='x86_64-posix-seh-rev0, Built by MinGW-W64 project' --with-bugurl=http://sourceforge.net/projects/mingw-w64 CFLAGS='-O2 -pipe -fno-ident -I/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64/opt/include -I/c/mingw710/prerequisites/x86_64-zlib-static/include -I/c/mingw710/prerequisites/x86_64-w64-mingw32-static/include' CXXFLAGS='-O2 -pipe -fno-ident -I/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64/opt/include -I/c/mingw710/prerequisites/x86_64-zlib-static/include -I/c/mingw710/prerequisites/x86_64-w64-mingw32-static/include' CPPFLAGS=' -I/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64/opt/include -I/c/mingw710/prerequisites/x86_64-zlib-static/include -I/c/mingw710/prerequisites/x86_64-w64-mingw32-static/include' LDFLAGS='-pipe -fno-ident -L/c/mingw710/x86_64-710-posix-seh-rt_v5-rev0/mingw64/opt/lib -L/c/mingw710/prerequisites/x86_64-zlib-static/lib -L/c/mingw710/prerequisites/x86_64-w64-mingw32-static/lib '

Thread model: posix

gcc version 7.1.0 (x86_64-posix-seh-rev0, Built by MinGW-W64 project)

I really do think the issue is in the handoff between NumPy and OpenBLAS. I can't imagine how else this behavior could occur where the first call is an error, but the second and subsequent are all fine. How to resolve it (or TBH even accurately diagnose the deep issue) though...?

Abolish the MS platform and live happily ever after ? If it fails the lapack tests the handoff problem lies between Fortran (=LAPACK part of OpenBLAS) and C, or Fortran and "anything else".

I would be curious if it is possible to build Numpy with OpenBLAS using icc

and ifort so see if the issue persists. That is a big ask though.

On Wed, Aug 12, 2020, 19:04 Martin Kroeker notifications@github.com wrote:

Abolish the MS platform and live happily ever after ? If it fails the

lapack tests the handoff problem lies between Fortran (=LAPACK part of

OpenBLAS) and C, or Fortran and "anything else".—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/numpy/numpy/issues/16744#issuecomment-673026759, or

unsubscribe

https://github.com/notifications/unsubscribe-auth/ABKTSRLSHZ2XWF4OE7J3QO3SALKSJANCNFSM4OP5IXNQ

.

Would probably be sufficient to just build OpenBLAS with the Intel compilers (though that seems to be problematic enough at least with VS from what came up recently, and I do not have a valid license for ifc/ifort at the moment.

Windows users should use Conda/MKL if on 2004 until this is resolved

@bashtage Thank for your suggestion.

In my case, I've got some error when I use sqlite using by pandasql after applying Conda version of numpy.

but I've not got any problem when I use this version of numpy+mkl.

@bashtage it seems there is a new MSVC prerelease with a fix for that rust link issue, maybe worth a try?

FYI: related SciPy issue: https://github.com/scipy/scipy/issues/12747

There is a report that Windows build 17763.1397 (Aug 11) fixes the problem, see #17082.

There is a report that Windows build 17763.1397 (Aug 11) fixes the problem, see #17082.

https://support.microsoft.com/en-us/help/4565349/windows-10-update-kb4565349

Build 17763.1397 is only for Windows 10, version 1809(Applies to: Windows 10, version 1809, all editionsWindows Server version 1809Windows Server 2019, all editions).

There is no issues in this case for windows 10, version 1809.

This is recently update version of windows 10, version 2004.

https://support.microsoft.com/en-us/help/4566782

August 11, 2020—KB4566782 (OS Build 19041.450).

I have still a same issue on Windows 10, Version 2004

Same failures on 19042.487 (this is the 20H2 branch):

-- Docs: https://docs.pytest.org/en/stable/warnings.html

=============================================== short test summary info ===============================================

FAILED lib/tests/test_regression.py::TestRegression::test_polyfit_build - numpy.linalg.LinAlgError: SVD did not conve...

FAILED linalg/tests/test_regression.py::TestRegression::test_eig_build - numpy.linalg.LinAlgError: Eigenvalues did no...

FAILED ma/tests/test_extras.py::TestPolynomial::test_polyfit - numpy.linalg.LinAlgError: SVD did not converge in Line...

3 failed, 11275 passed, 559 skipped, 20 xfailed, 1 xpassed, 1 warning in 398.16s (0:06:38)

I can reproduce the (partial) failures in the lapack-test of OpenBLAS - only affecting the EIG(eigenvalue) tests, which are known to have some unusually high stacksize requirements. There the SIGSEGV occurs in __chkstk_ms_ which is entered even before main(). Doubling the default "stack reserved size" and "stack commit size" values of the respective executable with the peflags utility (e.g. peflags -x4194304 -X4194304 EIG/xeigtsts.exe) makes the programs work normally, suggesting that C/Fortran interaction and argument passing as such is not problematic. I have not yet tried to apply this to the numpy case (not sure even whose peflag setting to adjust there - python.exe ?) but OpenBLAS itself appears to function normally when built with the msys2 mingw64 version of gcc 9.1.0 on 19041.450 a.k.a 2004

@martin-frbg That looks like progress.

Went down a rabbit hole trying to get all dependencies installed for building numpy on Windows/MSYS2 so no further progress.

@martin-frbg , the Msys2 based numpy package can be installed with pacman. Msys2 build scripts and patches are available here: https://github.com/msys2/MINGW-packages/tree/master/mingw-w64-python-numpy and https://github.com/msys2/MINGW-packages/tree/master/mingw-w64-openblas.

In a latest triage meeting this came up as a relatively high priority, and @hameerabbasi and I are willing to help. What can we do?

@bashtage could you try making the stack bigger with editbin /STACK:3145728 python.exe

Is there a way we could try building NumPy with OpenBLAS's releases of the libraries instead of the ones we build? Perhaps our builds are off a bit.

It would probably be possible to replace the libopenblas.dll in your build with one created from either the current develop branch or an older release such as 0.3.8 or 0.3.9 to see if that has any effect ? (Not that I have any specific commit in mind right now that could have had any bearing on this). Unfortunately I am still experiencing installation errors even with the msys2 packages, currently cannot run the tests apparently due to self.ld_version returning nothing in a version check.

Thanks. I am trying to update my windows to build 2004, let's see if I finish before the weekend

Also, bashtage's "small reproducing example" from https://github.com/numpy/numpy/issues/16744#issuecomment-655430682 does not raise any error in my msys2 setup. (As far as I can tell, their libopenblas in mingw64/usr/lib is a single-threaded 0.3.10 built for Haswell)

@martin-frbg Is ti possible to built OpenBLAS using MSVC?

Plain MSVC ? Doable but gives a poorly optimized library as MSVC does not support the assembly kernels - practically the same as if you built OpenBLAS for TARGET=GENERIC.

Also, bashtage's "small reproducing example" from #16744 (comment) does not raise any error in my msys2 setup. (As far as I can tell, their libopenblas in mingw64/usr/lib is a single-threaded 0.3.10 built for Haswell)

I'm pretty sure it is the something to do with the interface between NumPy and OpenBLAS. The 32-bit build of NumPy also doesn't have this issue. The ideas from the Microsoft people I spoke with that this is likely a thread synchronization issue seems to be the most plausible. It could explain how the wrong value is present in the first call and then correct in subsequent calls.

Went down a rabbit hole trying to get all dependencies installed for building numpy on Windows/MSYS2 so no further progress.

It is a pain. I've done it but automating it to try some experiments was taking a long time. It basically requires copying the steps from the https://github.com/MacPython/openblas-libs to get the .a files, and then copying the steps from https://github.com/numpy/numpy/blob/master/azure-steps-windows.yml to build NumPy. It is particularly hard since some parts want standard MSYS2 and others needs these to be gone, so you end up installing and the uninstalling most of gcc each time you want to do an experiment.

The easiest way to get a DLL build is to open an appveyor account, and then clone https://github.com/MacPython/openblas-libs. At the end of your build, there is an artifact that you can find from the website to download which has the dll (and .a file). This is however slow, taking 3 hours to build all 3 versions.

Looking at https://github.com/MacPython/openblas-libs, OpenBLAS seems to be build with INTERFACE64=1. This is not the case for Msys2 build OpenBLAS and numpy.

@carlkl This issue isn't about the Msys2 build of NumPy or OpenBLAS. It is about the Win32/AMD64 build which makes use of Msys2 provided gfortran to compile fortran sources, but then builds a Win32 DLL from the compiled library using MS's lib.exe.

@bashtage I mentioned this, because it was reported https://github.com/numpy/numpy/issues/16744#issuecomment-689785607, that the segfault could not reproduced within msys2. And I guess the mentioned msys2 environment mentioned contains the msys2 provided openblas and numpy binary packages.

I have no idea, if the windows 64bit numpy is compiled with a similar flag with MSVC or not.

BTW: I can't find the flag -fdefault-integer-8 in the scipy repository.

I'm not sure if code compiled with -fdefault-integer-8 is ABI compatible with code compiled without this flag.

Another aspect cames into my mind: maybe -fdefault-integer-8 should be combined with -mcmodel=large.

EDIT: Remember: Windows64 has a LLP64 memory model, not ILP64.

LLP64 means: long: 32bit, pointer: 64bit

So, what size integers does the numpy build that experiences the Win10-2004 problem use ? It had better match what OpenBLAS got built for, but IIRC this topic came up earlier in this thread already (and a mismatch would probably lead to more pronounced breakage irrespective of the Windows patchlevel)

At least Openblas can be build in three variants with https://github.com/MacPython/openblas-libs (see appveyor.yml):

- 32 bit

- 64 bit

- 64 bit with INTEGER64

but if have no idea what is used for the numpy 64bit builds on windows.

_and a mismatch would probably lead to more pronounced breakage irrespective of the Windows patchlevel_

-fdefault-integer-8 only applies to the fortran part of Lapack. It does not changing the underlying memory model (LLP64), so I'm not sure if we should expect problems outside the Fortran Lapack parts.

hmm,

https://github.com/numpy/numpy/blob/60a21a35210725520077f13200498e2bf3198029/azure-pipelines.yml says

- job: Windows

pool:

vmImage: 'VS2017-Win2016'

...

Python38-64bit-full:

PYTHON_VERSION: '3.8'

PYTHON_ARCH: 'x64'

TEST_MODE: full

BITS: 64

NPY_USE_BLAS_ILP64: '1'

OPENBLAS_SUFFIX: '64_'

all other versions (Python36-64bit-full, Python37-64bit-full) are build without NPY_USE_BLAS_ILP64.

The Numpy binaries on pypi.org are all built with 32-bit integer openblas. https://github.com/MacPython/numpy-wheels/blob/v1.19.x/azure/windows.yml

For build process gfortran vs. MSVC details, see here. The Microsoft lib.exe is not involved in producing the openblas DLL, it's all mingw toolchain. Numpy uses the openblas.a file, and generates the DLL using gfortran. https://github.com/numpy/numpy/blob/74712a53df240f1661fbced15ae984888fd9afa6/numpy/distutils/fcompiler/gnu.py#L442 MSVC tools are used to produce the .lib file from the .def file, and Numpy C code compiled with MSVC is linked using that .lib file to the gfortran-produced DLL.

One theoretical possibility that might go wrong is if there is some sort of mingw version mismatch between mingw that produced the static openblas.a artifact, and the mingw version used when numpy is built. However, not sure if this is possible that it can cause problems.

choco install -y mingw --forcex86 --force --version=5.3.0 used in windows.yml seem to be outdated. Why not use 7.3.0 or 8.1.0? I can remember problems I had with gcc-5.x two or three years ago.

choco install -y mingw --forcex86 --force --version=5.3.0 used in windows.yml seem to be outdated

That's for the 32-bit windows build only, so probably not related to this issue.

all other versions (

Python36-64bit-full,Python37-64bit-full) are build without NPY_USE_BLAS_ILP64.

Same errors on Python 3.6 and 3.8.

There is one idea left (I'm digging in the dark):

OpenBLAS is now compiled with -fno-optimize-sibling-calls, see https://github.com/xianyi/OpenBLAS/issues/2154, https://github.com/xianyi/OpenBLAS/pull/2157 and https://gcc.gnu.org/bugzilla/show_bug.cgi?id=90329.

Edit: see also: https://gcc.gnu.org/legacy-ml/fortran/2019-05/msg00181.html

Numpy didn't apply this flag into its gfortran part of distutils. I know that numpy is compiled with MSVC. Therefore I would expect problems with scipy rather with numpy, so this could be again a wrong trip.

The Numpy binaries on pypi.org are all built with 32-bit integer openblas. https://github.com/MacPython/numpy-wheels/blob/v1.19.x/azure/windows.yml

Why are the build configurations used in testing different from the ones used in release? This sounds like a risk.

Could we add artifacts to the Azure builds? This would make it much simpler to get wheels for testing. My only concern here is that the free artifact limit is pretty small IIRC, 1GB, and I don't know what happens when you hit it (FIFO would be good, but may do other things including error).

Why are the build configurations used in testing different from the ones used in release?

We test all supported python versions in windows without NPY_USE_BLAS_ILP64, and test python 3.8 with NPY_USE_BLAS_ILP64 as well, see https://github.com/numpy/numpy/blob/v1.19.2/azure-pipelines.yml#L195. So the weekly builds are correct in using 32-bit integer openblas.

Could we add artifacts to the Azure builds?

Probably easy to try it this out and find out about limits if it errors. However the weekly wheels are meant to faithfully recreate the testing builds. Any discrepancies should be treated as errors and fixed.

Probably easy to try it this out and find out about limits if it errors. However the weekly wheels are meant to faithfully recreate the testing builds. Any discrepancies should be treated as errors and fixed.

I was thinking that it would be easier to experiment in a PR to the main repo and grab the artifact to test on 2004.

Makes sense.

You could turn on Azure pipelines in your repo bashtage/numpy

A little more information: the first call that fails is because DNRM2 inside OpenBLAS returns a NAN. For me, this is repeatable: every call to

a = np.arange(13 * 13, dtype=np.float64).reshape(13, 13)

a = a % 17

np.linalg.eig(a)

prints ** On entry to DGEBAL parameter number 3 had an illegal value, which is another way to say "DNRM2 returned NAN". The mod operation is critical, without it the call to eig does not print this error.

How could there be any interaction between the mod ufunc and the call to OpenBLAS?

The

modoperation is critical, without it the call toeigdoes not print this error.

Does constructing this exact same array manually repeatably trigger the error?

This code does not trigger the failure:

a = np.array([x % 17 for x in range(13 * 13)], dtype=np.float64)

a.shape = (13, 13)

np.linalg.eig(a)

Could the preceding mod leave a register in an undefined state ? I have not checked the nrm2.S again, but I think we had a few issues years ago from assembly XORing a register with itself to clear it, which would fail on NaN.

For those following along, float64 % ends up calling npy_divmod for each value.

... which, in the first line calls npy_fmod(), which is fmod(). Here are some changes I tried. "Error" means I get the "illegal value" message from OpenBLAS when running the code that calls a % 17; np.linalg.eig(a)

code | outcome

---| ---

mod = npy_fmod@c@(a, b); (original code)| Error

mod = 100; //npy_fmod@c@(a, b); | No error

mod = npy_fmod@c@(a, b); mod = 100.0; | Error

So it seems fmod from MSVC is doing something that confuses OpenBLAS.

That's interesting, and weird.

Can you use a naive (not platform supplied) version of fmod to see if that fixed it?

Or just force HAVE_MODF to 0.

I don't think we have a naive version of fmod, do we?

I see there is a HAVE_MODF macro, but where does this path lead?

Looks Iike it falls back to the double version if float and long double are missing. The double version is mandatory to build numpy. The undef is probably to avoid compiler inline functions, ISTR that that was a problem on windows long ago.

I wanted to "prove" that the problem is the fmod implementation, which comes from ucrtbase.dll. So I wrote a little work-around that uses ctypes to pull the function out of the dll and use it insted of directly calling fmod. The tests still fail. Then I switched to an older version of ucrtbase.dll (only for the fmod function). The tests pass. I opened a thread on the visual studio forum. If anyone knows a better way to reach out to microsoft that would be great.

diff --git a/numpy/core/src/npymath/npy_math.c b/numpy/core/src/npymath/npy_math.c

index 404cf67b2..675905f73 100644

--- a/numpy/core/src/npymath/npy_math.c

+++ b/numpy/core/src/npymath/npy_math.c

@@ -7,3 +7,27 @@

#define NPY_INLINE_MATH 0

#include "npy_math_internal.h"

+#include <Windows.h>

+

+typedef double(*dbldbldbl)(double, double);typedef double(*dbldbldbl)(double, double);

+

+dbldbldbl myfmod = NULL;

+

+typedef double(*dbldbldbl)(double, double);

+extern dbldbldbl myfmod;

+

+

+double __fmod(double x, double y)

+{

+ if (myfmod == NULL) {

+ HMODULE dll = LoadLibraryA("ucrtbase_old.dll");

+ //HMODULE dll = LoadLibraryA("c:\\windows\\system32\\ucrtbase.DLL");

+ myfmod = (dbldbldbl)GetProcAddress(dll, "fmod");

+ }

+ return myfmod(x, y);

+}

+

+long double __fmodl(long double x, long double y) { return fmodl(x, y); }

+float __fmodf(float x, float y) { return fmodf(x, y); }

+

+

diff --git a/numpy/core/src/npymath/npy_math_internal.h.src b/numpy/core/src/npymath/npy_math_internal.h.src

index 18b6d1434..9b0600a34 100644

--- a/numpy/core/src/npymath/npy_math_internal.h.src

+++ b/numpy/core/src/npymath/npy_math_internal.h.src

@@ -55,6 +55,11 @@

*/

#include "npy_math_private.h"

+double __fmod(double x, double y);

+long double __fmodl(long double x, long double y);

+float __fmodf(float x, float y);

+

+

/*

*****************************************************************************

** BASIC MATH FUNCTIONS **

@@ -473,8 +478,8 @@ NPY_INPLACE @type@ npy_@kind@@c@(@type@ x)

/**end repeat1**/

/**begin repeat1

- * #kind = atan2,hypot,pow,fmod,copysign#

- * #KIND = ATAN2,HYPOT,POW,FMOD,COPYSIGN#

+ * #kind = atan2,hypot,pow,copysign#

+ * #KIND = ATAN2,HYPOT,POW,COPYSIGN#

*/

#ifdef HAVE_@KIND@@C@

NPY_INPLACE @type@ npy_@kind@@c@(@type@ x, @type@ y)

@@ -484,6 +489,13 @@ NPY_INPLACE @type@ npy_@kind@@c@(@type@ x, @type@ y)

#endif

/**end repeat1**/

+#ifdef HAVE_FMOD@C@

+NPY_INPLACE @type@ npy_fmod@c@(@type@ x, @type@ y)

+{

+ return __fmod@c@(x, y);

+}

+#endif

+

#ifdef HAVE_MODF@C@

NPY_INPLACE @type@ npy_modf@c@(@type@ x, @type@ *iptr)

{

What if you add some code after the fmod call, so that ST(0) doesn't contain nan? Or set it to nan synthetically?

Do calling conventions put some constraints on how these registers are supposed to behave?

I wanted to "prove" that the problem is the

fmodimplementation, which comes fromucrtbase.dll. So I wrote a little work-around that uses ctypes to pull the function out of the dll and use it insted of directly callingfmod. The tests still fail. Then I switched to an older version ofucrtbase.dll(only for thefmodfunction). The tests pass. I opened a thread on the visual studio forum. If anyone knows a better way to reach out to microsoft that would be great.

At a minimum anyone with an azure account, which is probably many people here, can upvote it. I'll reach out to some contacts I made when this issue originally appeared who work in Azure ML to see if they can do anything.

At a minimum anyone with an azure account, which is probably many people here, can upvote it.

Thanks for the tip, upvoted!

I don't think we have a naive version of fmod, do we?

No. It is a tricky function that is required by the IEEE-754 spec to have certain behavior. It is also very slow, which is probably related to the spec.

The hard work of fmod is performed by the fprem x87 instuction, even in the VS2019 variant - see @mattip's gist (last line) https://gist.github.com/mattip/d9e1f3f88ce77b9fde6a285d585c738e. (fprem1 is the remainder variant btw.)

Care has to be taken when used in conjunction with MMX or SSE registers - as described here: https://stackoverflow.com/questions/48332763/where-does-the-xmm-instruction-divsd-store-the-remainder.

There are some alternative implementations available as described in https://github.com/xianyi/OpenBLAS/issues/2709#issuecomment-702634696. However: all these need gcc (mingw-w64) for compilation. OpenLIBM doesn't compile with MSVC AFAIK. And inline assembler code isn't allowed with MSVC. In principle one could build (mingw-w64) and use (MSVC) a fmod helper library during the numpy build.

What if you add some code after the fmod call, so that ST(0) doesn't contain nan? Or set it to nan synthetically? Do calling conventions put some constraints on how these registers are supposed to behave?

I guess we could add a little piece of assembly to clear out registers before calling into OpenBLAS on windows. I tried doing this in a little test set-up but my masm assembly foo is weak. I tried writing a procedure that just calls fldz multiple times, when using it I get an exception. Help?

In lots_of_fldz.asm :

.code

lots_of_fldz proc

fldz

fldz

fldz

fldz

fldz

fldz

lots_of_fldz endp

end

In another file:

#include <iostream>

#include <Windows.h>

extern "C" {

int lots_of_fldz(void);

}

int main()

{

typedef double(*dbldbldbl)(double, double);

//HMODULE dll = LoadLibraryA("D:\\CPython38\\ucrtbase_old.dll");

HMODULE dll = LoadLibraryA("c:\\windows\\system32\\ucrtbase.DLL");

if (dll == NULL) {

return -1;

}

dbldbldbl myfmod;

myfmod = (dbldbldbl)GetProcAddress(dll, "fmod");

double x = 0.0, y = 17.0;

double z = x + y;

z = myfmod(x, y);

lots_of_fldz();

/* CRASH */

std::cout << "Hello World!\n";

return 0;

}

Put them into a VisualStudio project, and follow this guide to turn on masm compilation

@mattip, I created an assembler file for the 64-bit fmod function that creates an identical text: segment as found in the corresponding fmod function from mingw-w64 (64-bit). No idea if this works as a replacement for the buggy fmod function, but at least one should give it a try. The resulting obj-file could be added to npymath.lib.

fmod.asm: (64-bit)

.code

fmod PROC

sub rsp , 18h

movsd QWORD PTR [rsp+8h] , xmm0

fld QWORD PTR [rsp+8h]

movsd QWORD PTR [rsp+8h] , xmm1

fld QWORD PTR [rsp+8h]

fxch st(1)

L1:

fprem

fstsw ax

sahf

jp L1

fstp st(1)

fstp QWORD PTR [rsp+8h]

movsd xmm0 , QWORD PTR [rsp+8h]

add rsp,18h

ret

fmod endp

end

masm command: ml64.exe /c fmod.asm creates fmod.obj (use the 64-bit variant of ml64.exe)

``

objdump -D fmod.obj

....

Disassembly of section .text$mn:

0000000000000000 <fmod>:

0: 48 83 ec 18 sub $0x18,%rsp

4: f2 0f 11 44 24 08 movsd %xmm0,0x8(%rsp)

a: dd 44 24 08 fldl 0x8(%rsp)

e: f2 0f 11 4c 24 08 movsd %xmm1,0x8(%rsp)

14: dd 44 24 08 fldl 0x8(%rsp)

18: d9 c9 fxch %st(1)

1a: d9 f8 fprem

1c: 9b df e0 fstsw %ax

1f: 9e sahf

20: 7a f8 jp 1a <fmod+0x1a>

22: dd d9 fstp %st(1)

24: dd 5c 24 08 fstpl 0x8(%rsp)

28: f2 0f 10 44 24 08 movsd 0x8(%rsp),%xmm0

2e: 48 83 c4 18 add $0x18,%rsp

32: c3 retq

@carlkl thanks. I would be happier with a piece of assembler that resets the ST(N) registers that we could use on x86_64 before calling OpenBLAS functions. Today this problem cropped up because of a change in fmod, tomorrow it may be a different function. I am not sure that functions are required to reset the ST(N) registers on return. If there is no such requirement, fmod is not actually buggy and the change in windows exposed a failing in OpenBLAS to reset registers before using them that we should either help them fix or work around it.

I tried to reset the registers using fldz in the comment above but my test program doesn't work.

@mattip, my assembler knowlegde is also more or less basic. However, you may find a possible answer in this SO thread: https://stackoverflow.com/questions/19892215/free-the-x87-fpu-stack-ia32/33575875

From https://docs.microsoft.com/de-de/cpp/build/x64-calling-convention?view=vs-2019 :

The x87 register stack is unused. It may be used by the callee, but consider it volatile across function calls.

Guess we could also call fninit at the start of the OpenBLAS nrm2.S (after the PROFCODE macro) to check that theory

Interesting bit from the

System V Application Binary Interface

AMD64 Architecture Processor Supplement

Draft Version 0.99.6

_The control bits of the MXCSR register are callee-saved (preserved across calls), while the status bits are caller-saved (not preserved)._

_The x87 status word register is caller-saved, whereas the x87 control word is callee-saved._

_All x87 registers are caller-saved, so callees that make use of the MMX registers may use the faster femms instruction._

Quite different compared to the Windows 64-bit calling convention.

@martin-frbg, fninit is dangerous: The FPU control word is set to 037FH (round to nearest, all exceptions masked, 64-bit precision). X87 extended precision is not was you want in all cases, especially on Windows.

I would not want this for a release version, just for a quick test. Still not quite convinced that OpenBLAS should somehow cleanup "before" itself, but I cannot find any clear documentation on Windows behaviour w.r.t the legacy x87 fpu. i noticed Agner Fog has a document about calling conventions at http://www.agner.org/optimize/calling_conventions.pdf (discussion of Win64 handling of FP registers starts on page 13 but concentrates on basic availability and behaviour across context switches).

See https://github.com/numpy/numpy/issues/16744#issuecomment-703305582:

The x87 register stack is unused. It may be used by the callee, but consider it volatile across function calls.

I guess this means: do not use x87 instructions (Win64). If you do, good luck. Or in other words: the callee is responsible not to come to harm.

Hm that seems to describe the behaviour of the MSVC compiler specifically, while I _think_ we are in a mingw ecosystem ?

No, Numpy (Pypi) is compiled with MSVC (Visual Studio 2019) on Windows. For OpenBLAS mingw-w64 is used (due to the Fortran parts). Also the Scipy Fortran parts are compiled with mingw-w64. However, CPython and it's binary extensions are heavily based on the standards set by MSVC.

BTW: this was the reason for the development of https://github.com/mingwpy which is currently being breathed back into life again. (shameless plug)

Another bit:

mingw-w64 uses (almost) the same calling conventions as MSVC. The only notable differences are different stack alignment on x86 (32-bit) - relevant for SIMD and the un-supported vectorcall.

Interesting. x86 isn't affected. Only AMD64.

On Sun, Oct 4, 2020, 22:19 carlkl notifications@github.com wrote:

Another bit:

mingw-w64 uses (almost) the same calling conventions as MSVC. The only

notable differences are different stack alignment on x86 (32-bit) -

relevant for SIMD and the un-supported vectorcall.—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/numpy/numpy/issues/16744#issuecomment-703317784, or

unsubscribe

https://github.com/notifications/unsubscribe-auth/ABKTSRMFHWZVLDYDFGBM6YDSJDRGTANCNFSM4OP5IXNQ

.

MSVC 32bit hasn't disowned the fpu perhaps ? I'll just do a stopgap replacement of the fpu-using nrm2.S by either nrm2_see2.S (if its viable, unfortunately there are a number of unused but deadly assembly routines floating around in the codebase) or the plain C version for OS==Windows then. (The other problem discussed here has to be something else however, as I think all other older assembly routine for x86_64 are SSE2 at least)

@mattip, maybe a call to _fpreset after divmod is sufficient to reset from a messed up FPU state?

maybe a call to

_fpreset

Nope, it does not reset the ST(N) registers, nor does it fix the failing tests.

Did you give _fninit a try? This should reset the FPU stack. Or ffree or fstp instead of fldz as mentioned in https://stackoverflow.com/questions/19892215/free-the-x87-fpu-stack-ia32/33575875?

I would happily try the assembler commands, but the test project in the comment above crashes. Someone need to correct my code to make it work (indeed, fninit seems like a good candidate) then I can emit assembler instructions to reset the registers in NumPy before calling into OpenBLAS.

Something like this?

.code

reset_fpu proc

finit

fldz

reset_fpu endp

end

finit ist wait plus fninit. I'm not sure, that fldz is needed after fninit.

As I said in the comment, there is something missing to make the call to the compiled assembly code work correctly. This is pretty much what I had in that comment. The code crashes with exited with code -2147483645. Please take a look at the complete code and see if you can make it work.

I can try it (tomorrow). However mayby this snippets are helpful:

https://www.website.masmforum.com/tutorials/fptute/fpuchap4.htm

(one of the most readable site about this topics I found)

FLDZ (Load the value of Zero)

Syntax: fldz (no operand)

Exception flags: Stack Fault, Invalid operation

This instruction decrements the TOP register pointer in the Status Word

and loads the value of +0.0 into the new TOP data register.

If the ST(7) data register which would become the new ST(0) is not empty, both

a Stack Fault and an Invalid operation exceptions are detected, setting both flags

in the Status Word. The TOP register pointer in the Status Word would still

be decremented and the new value in ST(0) would be the INDEFINITE NAN.

A typical use of this instruction would be to "initialize" a data register intended

to be used as an accumulator. Even though a value of zero could also be easily

loaded from memory, this instruction is faster and does not need the use of memory.

As I understand fldz can segfault if st(7) is in use, therefore:

FFREE (Free a data register)

Syntax: free st(x)

This instruction modifies the Tag Word to indicate that the specified 80-bit data register

is now considered as empty. Any data previously in that register becomes unusable.

Any attempt to use that data will result in an Invalid exception being detected and an

INDEFINITE value possibly being generated.

Although any of the 8 data registers can be tagged as free with this instruction,

the only one which can be of any immediate value is the ST(7) register when

all registers are in use and another value must be loaded to the FPU.

If the data in that ST(7) is still valuable, other instructions should be used

to save it before emptying that register.

you may try:

.code

reset_fpu proc

ffree st(7)

fldz

reset_fpu endp

end

Sorry I am not making myself clear. The immediate problem is not which assembler calls to make. The immediate problem is how to properly create a callable procedure in a way that we can use it, and to demonstrate that in a two-file (*.asm and main.c/main.cpp) project that compiles and runs. Once we have that, I can continue to explore different calls and how they influence OpenBLAS.

@mattip, I understand. I will definitely try it, but this may take its time.

I got the impression the _tempory_ bad behaviour of fmod in UCRT should be _healed_ by OpenBLAS: https://github.com/xianyi/OpenBLAS/pull/2882 and not by numpy as numpy doesn't use the FPU on WIN64. And of course MS should fix this issue with a Windows patch.

The numpy patch in this case would be to ensure usage of a OpenBLAS version during build not older than the upcoming OpenBLAS release.

@matti There is a sanity check in numpy/__init__.py. Is there a simple and reliable test we could add to detect this problem?

a = np.arange(13 * 13, dtype=np.float64).reshape(13, 13)

a = a % 17

va, ve = np.linalg.eig(a)

@mattip, thank you for the snippet. But I will need some time to get access to an desktop where I will be able to make these kind of tests. Right now I'm working most of the time with a laptop with a minimal programming environment where I can't install almost nothing.

We have merged an update to OpenBLAS v0.3.12 and a local build using that version + windows update 2004 passes the test suite.

Leaving this open until Windows updates.

Have the pre-release wheels been built with the new OpenBLAS? Happy to do some further tests with downstream projects that were experiencing this bug.

The Windows 3.9 pre-release wheels are currently missing because there were test errors (now fixed). The fixed library will be used in 1.19.3 coming out today or tomorrow.

The /MT option enables static linking on Windows. It may be possible to statically link against libucrt.lib using the 1909 version of the Microsoft SDK. This could act as a workaround for the ucrtbase.dll bug in 2004 and 20H2. It would make the wheels bigger, which is a downside.

I have no idea if this /MT problem is still valid, but it should considered.

I think if NumPy is the only module that builds with MT then it might be

OK. Of course, it could be the case that if NumPy is MT then any

downstream would also need to be MT, and so this would be a problem.

On Mon, Nov 2, 2020, 19:37 carlkl notifications@github.com wrote:

I have no idea if this /MT problem

https://stevedower.id.au/blog/building-for-python-3-5-part-two is still

valid, but it should considered.—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

https://github.com/numpy/numpy/issues/16744#issuecomment-720682011, or

unsubscribe

https://github.com/notifications/unsubscribe-auth/ABKTSRJIVAQLECK4E2EVSSDSN4C65ANCNFSM4OP5IXNQ

.

Any recommended workarounds for this issue? My situation is that I'm on a machine updated to the 2004 update, and have desktop software that uses pip to install numpy/scipy/pandas etc. All of this software is affected by this problem caused by Microsoft's update.

Any recommendations would be appreciated, because using conda to install numpy isn't an option for the software I'm working on.

If you don't need docker you could pin 1.19.3. It passes all tests on my Windows systems.

Thanks. 1.19.3 works with pip on the issue.

The link in the bad BLAS detection is driving a lot of noisy posts on MS's site. I hope this doesn't end up blowing up.

I suppose another alternative is to use a 3rd party fmod on Win64.

I wonder what the response would be if NumPy had an unfixed bug this serious for four months after a release, especially if we did not provide clear instructions for users to downgrade to the previous version. Users can easily pin to 1.19.3, but that will not solve the issue, it will only move it to another package that uses the registers after they have been messed up: maybe scipy, maybe tensorflow.

Another idea for a workaround: See if there are any other function in ucrt that use the FPU and leave its state in a good condition. If there is one, we could call this after fmod. This would have the advantage over the assembly workaround @mattip wrote since it wouldn't need any special files or build process.

I don't think we should invest more effort into working around this problem. Any additional work we do to mitigate the problem will take up valuable developer time and reduce the pressure on Microsoft to fix this. Also, as mentioned above, any code in other projects that calls fmod and/or uses the registers may hit this problem with no connection to NumPy.

I don't think we should invest more effort into working around this problem. Any additional work we do to mitigate the problem will take up valuable developer time and reduce the pressure on Microsoft to fix this. Also, as mentioned above, any code in other projects that calls

fmodand/or uses the registers may hit this problem with no connection to NumPy.

Having seen the reaction to the error message it is clearly not very helpful. It should, for example, suggest the Windows users who want to use the latest NumPy features should use a distribution that ships with MKL rather than the pip wheel. It should also include a link to this thread.

While this is clearly MS's responsibility to fix, causing pain for users to point out someone else's fault is rarely a good way for any projects to maintain good will.

@bashtage Not quite sure what you mean about causing pain. We could remove the link and have users land here instead, but we should warn users that when they run on 2004 the results cannot be trusted, and if you cannot trust the results of a computation you should avoid computation on that platform.

Not quite sure what you mean about causing pain.

The import of NumPy fails spectacularly and there is no workaround provided. It would be most helpful for most users if the list of known workarounds was clearly visible:

(a) Using a MKL-based distribution such as conda or Enthought Deployment Manager which avoids this but in linear algebra code

(b) Revert to NumPy 1.19.3 which ships with a version of OpenBLAS that is protected from the bug. This option may not be suitable for users who are running in containers.

(c) Build from source. This option will have low-performing BLAS, but may be suitable in environments where third-party distribution is not permitted and either containers are used or a recent feature or bug fix in NumPy is required.

I don't think it is wrong to call attention to the fmod bug, but then it sends users to that forum as if there is some solution waiting for them.

We could remove the link and have users land here instead, but we should warn users that when they run on 2004 the results cannot be trusted, and if you cannot trust the results of a computation you should avoid computation on that platform.

This isn't an option. Users only need to avoid NumPy + OpenBLAS (ex 0.3.12). They don't need to avoid NumPy+Windows (2004/20H2 (there are now 2 released versions affected)).

MKL-based distribution

There was a report of problems with MKL as well.

Revert to NumPy 1.19.3

But that won't fix other potential problems resulting from the use of fmod. The problem is that there is no way to be sure that the results are correct.

Folks should not be using Python on 2004, maybe use WSL instead? I'd also be OK with including an older ucrt with the windows wheels if that was possible, but what about other projects? Is there an easy way to go back to earlier windows versions?

There was a report of problems with MKL as well.

NumPy doesn't have a test that fails on MKL. It seems difficult to presume there is a problem when there are no failures reported. The fmod bug does not manifest itself in MKL's BLAS (presumably because it doesn't use FPU).

But that won't fix other potential problems resulting from the use of fmod. The problem is that there is no way to be sure that the results are correct.

No, but it also won't fix Windows security problems either, or many other bugs. The issue here is very particular.

- fmod is fine in that is produced correct results

- You need to have code written in assembler to encounter this problem since the system compiler won't produce x87 code.

These two suggest to me that the problem is very challenging to reach for almost all code. Only the highest performance code like OpenBLAS, FFT libraries containing hand-written kernels, or MKL are likely to trigger #2.

I also think it is reasonable to release the fix since if OpenBLAS 0.3.12 worked as expected then NumPy would have released and this issue would never have been raised to users.

Folks should not be using Python on 2004, maybe use WSL instead? I'd also be OK with including an older ucrt with the windows wheels if that was possible, but what about other projects? Is there an easy way to go back to earlier windows versions?

I suspect for many users this isn't really an option for many users: corporate users on enterprise desktop, novice students (who should use conda), or anyone who bought a laptop with 2004 or 20H2 who cannot downgrade.

Note that not only is Condas numpy packed linked against MLK it also does already ship its own version of ucrtbase.dll that appers to be an older version (10.0.17134.12)

Largely agree with @bashtage here, a failing import with no reasonable recommendation to fix it is a bit hostile to the users (despite the main fault being with microsoft).

@jenshnielsen: Note that not only is Condas numpy packed linked against MLK [...]

The builds packaged by conda-forge allow switching out the blas/lapack implementation (out of openblas/mkl/blis/netlib), it doesn't _have_ to be MKL.

Folks should not be using Python on 2004, maybe use WSL instead?

That isn't the default mode of operations for most users.

I'd also be OK with including an older ucrt with the windows wheels if that was possible, but what about other projects?

Other projects aren't our responsibility.

Is there an easy way to go back to earlier windows versions?

If you did a fresh install or cleaned up your disk space, it becomes almost impossible to do that.

Largely agree with @bashtage here, a failing import with no reasonable recommendation to fix it is a bit hostile to the users (despite the main fault being with microsoft).

I agree. We might ending up losing a lot of users if we don't fix this.

I'd also be OK with including an older ucrt with the windows wheels if that was possible, but what about other projects?

@charris, this won't help on Windows 10. If you deploy a different ucrt alongside with python or numpy it will never be loaded on Windows 10. This is method only applies to older Windows versions (Windows 7, 8, 8.1).

@carlkl

The conda-packaged python actually interjects itself into the DLL search path resolution (which - unchanged - would presumably be the reason why you say it can never work on Windows 10), and this is AFAICT also the reason why conda _does_ provide a ucrtbase.dll, as @jenshnielsen writes.

@h-vetinari, Universal CRT deployment clearly states:

_There are two restrictions on local deployment to be aware of:

On Windows 10, the Universal CRT in the system directory is always used, even if an application includes an application-local copy of the Universal CRT. It's true even when the local copy is newer, because the Universal CRT is a core operating system component on Windows 10._

BTW: I tested it myself. No way to load another UCRT than the available UCRT deployed with Windows 10.

I also think it is reasonable to release the fix

by this I think you mean adding PR gh-17547?

Proof of @carlkl's point:

This bug, caused by MS itself, should be called a Heisenbug. It was costly and difficult to find the cause: Windows 2004 UCRT fmod leaves the FPU registers in a botched state under certain circumstances. This can lead to numerical calculation errors much later when using the FPU again. Calculation errors usually do not pop up in user codes if not rigorously tested. This can mean that significant numerical errors remain undetected for a long time. It could hardly be worse.

by this I think you mean adding PR gh-17547?

Sorry, I wrote the wrong thing.

The only change I'm suggesting is that NumPy provides more information in the exception raised on import that suggests ways that a user could get an environment on Windows 2004/H2 that would let them get on with their job/school/hobby.

- WSL

- conda/enthought

- 1.19.3

- Build from source

[This ordering is my preference as to the quality of the solution]

I think it would also make sense to link to this issue, or to an issue that is a bit cleaner that provides a deeper explanation. This second link could also be to some release notes in the docs, rather than a github issue.

The builds packaged by conda-forge allow switching out the blas/lapack implementation (out of openblas/mkl/blis/netlib), it doesn't _have_ to be MKL.

@h-vetinari Do conda-forge + OpenBLAS builds pass tests on 2004/H2?

I just checked and coda-forge ships OpenBlas 0.3.12. Does this crash containers then?

Using the method of healing of the FPU state with the EMMS instruction in OpenBLAS-0.3.12 should help of course to get the numpy and scipy tests clean, but as @charris stated calling fmod(0,x) in CPython and then FPU instructions called later (in a different package than OpenBLAS) could happen as well. In this case the problem is only shifted to elsewhere.

The best bet is indeed forcing MS to patch ths buggy behaviour. Maybe Steve Dower can help?

This could also an interesting story for Agner Fog or maybe Bruce Dawson: See i.e. this related story in his blog:

Everything Old is New Again, and a Compiler Bug

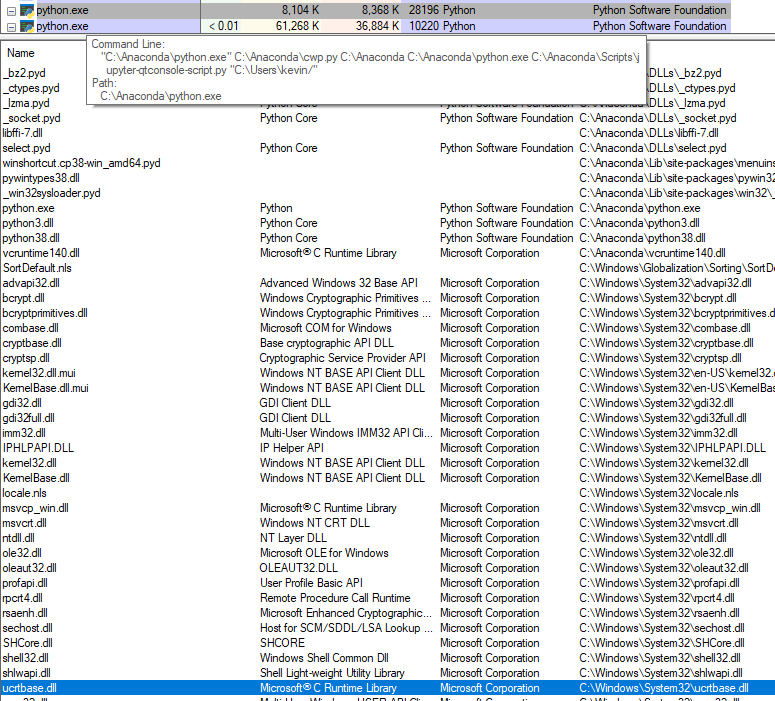

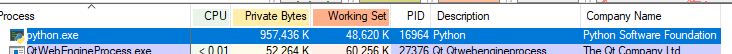

Probably the bug in OpenBlas 0.3.12. Note the Private Bytes column:

This probably defaults to 24 BLAS threads since I have 24 logical CPUs.

This is OpenBLAS 0.3.12 with NumPy 1.19.2 from conda-forge.

Setting $env:OPENBLAS_NUM_THREADS=1 makes for a dramatic reduction

And with $env:OPENBLAS_NUM_THREADS=4 threads:

@bashtage: could you continue discussing OpenBLAS 0.3.12 in OpenBLAS xianyi/OpenBLAS#2970?

@mattip I was trying to determine if conda + OpenBLAS was a credible alternative. I don't think it solves anything that 1.19.3 solves having seen these results.

@bashtage: Proof of @carlkl's point:

Since the conda-packaged python actively circumvents windows standard DLL resolution, I'm not sure how much of a proof a windows tool is going to be. I've encountered this issue for example with outdated libcrypto.dll in C:\Windows\System32, see e.g. https://github.com/conda-forge/staged-recipes/pull/11452. Long story short, the outdated system library was only picked up by a test failure in the cryptography test suite, and then - using CONDA_DLL_SEARCH_MODIFICATION_ENABLE - the use of the conda-provided openssl could be forced.

@bashtage: @h-vetinari Do conda-forge + OpenBLAS builds pass tests on 2004/H2?

The CI that's building the packages is presumably not on such a current version, and it took me a bit to build it myself. I'm running on a 2004 machine currently, and this is the - very positive - result**:

= 10487 passed, 492 skipped, 19 xfailed, 1 xpassed, 227 warnings in 529.08s (0:08:49) =

@bashtage: I just checked and conda-forge ships OpenBlas 0.3.12. Does this crash containers then?

conda-forge doesn't ship with any fixed openblas version - the blas version can even be "hotswapped" (e.g. between openblas, mkl, blis), so the version is not a biggie. Generally though, it will use the newest packaged version. I cannot verify if the crash reproduces or not within containers.

@bashtage:: @mattip I was trying to determine if conda + OpenBLAS was a credible alternative. I don't think it solves anything that 1.19.3 solves having seen these results.

The memory increase was due to a change in openblas 0.3.12, as discussed further in xianyi/OpenBLAS#2970. So far, conda-forge seems a credible alternative to me - at least it's not affected directly by the error.

** I used the current master of https://github.com/conda-forge/numpy-feedstock, which is still on 1.19.2, coupled with openblas 0.3.12. I could also try https://github.com/conda-forge/numpy-feedstock/pull/210 + openblas 0.3.12, if people are interested.

In good news, MS got back on the VS forum and suggested that a fix could be out by end of Jan 2021. Given this update, I think the only question is whether there is any value in updating the error message that is raised when the buggy function is detected.

Since the conda-packaged python actively circumvents windows standard DLL resolution, I'm not sure how much of a proof a windows tool is going to be. I've encountered this issue for example with outdated

libcrypto.dllinC:\Windows\System32, see e.g. conda-forge/staged-recipes#11452. Long story short, the outdated system library was only picked up by a test failure in thecryptographytest suite, and then - usingCONDA_DLL_SEARCH_MODIFICATION_ENABLE- the use of the conda-provided openssl could be forced.

conda create -n cf -c conda-forge python numpy pytest hypothesis blas=*=openblas openblas=0.3.9 -y

conda activate cf

python -c "import numpy as np;np.test('full')"

outputs

C:\Anaconda\envs\cf\lib\site-packages\numpy\linalg\linalg.py:94: LinAlgError

---------------------------------------------------------------------- Captured stdout call ----------------------------------------------------------------------

** On entry to DGEBAL parameter number 3 had an illegal value

** On entry to DGEHRD parameter number 2 had an illegal value

** On entry to DORGHR DORGQR parameter number 2 had an illegal value

** On entry to DHSEQR parameter number 4 had an illegal value

This shows that when the old OpenBLAS is used the buggy function from the current ucrt DLL is being used.

Only openblas=0.3.12 from conda forge will pass tests, but this is the same that shipped in NumPy 1.19.3.

What speaks against a new numpy release compiled with a disarmed OpenBLAS-0.3.12? Reduced Buffersize and maybe reduced number of threads at compile time of OpenBLAS used for numpy. This should reduced the memory consumption of OpenBLAS and should help not only in the Docker testcase but also users with less well equiped

windows boxes.

Here from the https://tinyurl.com/y3dm3h86 bug report from inside Python. First, thank you for providing a version that works on Windows for now (1.19.3).

I understand that 1.19.3 doesn't work on Linux and 1.19.4 doesn't work on Windows (while it has the bug).

Would it be possible to make the latest version on pypi 1.19.3 for Windows, and 1.19.4 for all other platforms? In other words, simply delete https://files.pythonhosted.org/packages/33/26/c448c5203823d744b7e71b81c2b6dcbcd4bff972897ce989b437ee836b2b/numpy-1.19.4-cp36-cp36m-win_amd64.whl (and the corresponding 3.7/3.8/3.9 amd64 versions) entirely for now?

@luciansmith As long as the source is available for 1.19.4 pip will try and use this version unless additional flags are passed. I think most users would only pass additional flags if they knew the issue, but then they could just pin 1.19.3.

My preference would be to have a 1.19.5 that uses OpenBLAS 0.3.9 on Linux and 0.3.12 on Windows, but I don't know if this is possible.

Most helpful comment

We have merged an update to OpenBLAS v0.3.12 and a local build using that version + windows update 2004 passes the test suite.