Tensorflow: At Runtime : "Error while reading resource variable softmax/kernel from Container: localhost"

System information

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): yes, found here (https://github.com/viaboxxsystems/deeplearning-showcase/blob/tensorflow_2.0/flaskApp.py)

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): MAC OSX 10.14.4

- TensorFlow version (use command below): 2.0.0-alpha0

- Python version: Python 3.6.5

You can collect some of this information using our environment capture

python -c "import tensorflow as tf; print(tf.version.GIT_VERSION, tf.version.VERSION)"

v1.12.0-9492-g2c319fb415 2.0.0-alpha0

Describe the current behavior

when running "flaskApp.py", After loading the model and trying to classify an image using "predict", it fails with the error:

tensorflow.python.framework.errors_impl.FailedPreconditionError: Error while reading resource variable softmax/kernel from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/softmax/kernel/N10tensorflow3VarE does not exist.

Describe the expected behavior

a result of image classification should be returned.

Code to reproduce the issue

Steps to reproduce:

git clone https://github.com/viaboxxsystems/deeplearning-showcase.gitgit checkout tensorflow_2.0- (if needed)

pip3 install -r requirements.txt export FLASK_APP=flaskApp.py- start the app with

flask run - using Postman or curl send any image of a dog or cat to the app

OR

curl -X POST \

http://localhost:5000/net/MobileNet \

-H 'Postman-Token: ea35b79b-b34d-4be1-a80c-505c104050ec' \

-H 'cache-control: no-cache' \

-H 'content-type: multipart/form-data; boundary=----WebKitFormBoundary7MA4YWxkTrZu0gW' \

-F image=@/Users/haitham.b/Projects/ResearchProjects/CNNs/deeplearning-showcase/data/sample/valid/dogs/dog.1008.jpg

Other info / logs

E0430 13:36:10.374372 123145501933568 app.py:1761] Exception on /net/MobileNet [POST]

Traceback (most recent call last):

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 2292, in wsgi_app

response = self.full_dispatch_request()

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 1815, in full_dispatch_request

rv = self.handle_user_exception(e)

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 1718, in handle_user_exception

reraise(exc_type, exc_value, tb)

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/_compat.py", line 35, in reraise

raise value

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 1813, in full_dispatch_request

rv = self.dispatch_request()

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 1799, in dispatch_request

return self.view_functions[rule.endpoint](**req.view_args)

File "/Users/haitham.b/Projects/Virtualenvs/deeplearning-showcase/flaskApp.py", line 97, in use_net_to_classify_image

prediction, prob = predict(net_name, image)

File "/Users/haitham.b/Projects/Virtualenvs/deeplearning-showcase/flaskApp.py", line 59, in predict

output_probability = net_models[cnn_name].predict(post_processed_input_images)

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/keras/engine/training.py", line 1167, in predict

callbacks=callbacks)

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/keras/engine/training_arrays.py", line 352, in model_iteration

batch_outs = f(ins_batch)

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/keras/backend.py", line 3096, in __call__

run_metadata=self.run_metadata)

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 1440, in __call__

run_metadata_ptr)

File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/framework/errors_impl.py", line 548, in __exit__

c_api.TF_GetCode(self.status.status))

tensorflow.python.framework.errors_impl.FailedPreconditionError: Error while reading resource variable softmax/kernel from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/softmax/kernel/N10tensorflow3VarE does not exist.

[[{{node softmax/MatMul/ReadVariableOp}}]]

All 26 comments

Any updates on this?

This is not Build/Installation or Bug/Performance issue. Please post this kind of support questions at Stackoverflow. There is a big community to support and learn from your questions. GitHub is mainly for addressing bugs in installation and performance. Thanks!

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session

from tensorflow.python.keras.models import load_model

tf_config = some_custom_config

sess = tf.Session(config=tf_config)

graph = tf.get_default_graph()

# IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras!

# Otherwise, their weights will be unavailable in the threads after the session there has been set

set_session(sess)

model = load_model(...)

and then in each request (i.e. in each thread):

global sess

global graph

with graph.as_default():

set_session(sess)

model.predict(...)

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)

You are amazing!!!!! This is the best solution. Can you tell me why did it just work after adding session?

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)You are amazing!!!!! This is the best solution. Can you tell me why did it just work after adding session?

Thank you and you are very welcome :).

As far as I understand, the problem is that tensorflow graphs and sessions are not thread safe. So by default a new session (which does not contain any previously loaded weights, models a.s.o.) is created for each thread, i.e. for each request. By saving the global session that contains all your models and setting it to be used by keras in each thread the problem is solved.

Closing this out since I understand it to be resolved, but please let me know if I'm mistaken. Thanks!

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)You are amazing!!!!! This is the best solution. Can you tell me why did it just work after adding session?

Thank you and you are very welcome :).

As far as I understand, the problem is that tensorflow graphs and sessions are not thread safe. So by default a new session (which does not contain any previously loaded weights, models a.s.o.) is created for each thread, i.e. for each request. By saving the global session that contains all your models and setting it to be used by keras in each thread the problem is solved.

I have the same issue with tensor flow version 1.13.1, the above solution works for me.

I am having the same issues and was wondering what the value of some_custom_config was?

I am having the same issues and was wondering what the value of some_custom_config was?

In case you want to configure your session (which I had to do), you can pass the config in this parameter. Else just leave it out.

I am having the same issues and was wondering what the value of some_custom_config was?

In case you want to configure your session (which I had to do), you can pass the config in this parameter. Else just leave it out.

Thank you so much! Everything is running perfectly now.

Thanks for providing the codes. I ran into similar error message while running BERT on Kera. I tried your solution but can't seem to get it to work. Any guidance is most appreciated!

```FailedPreconditionError Traceback (most recent call last)

11 validation_data=([test_input_ids, test_input_masks, test_segment_ids], test_labels),

12 epochs=1,

---> 13 batch_size=32

14 )

3 frames

/usr/local/lib/python3.6/dist-packages/tensorflow/python/client/session.py in __call__(self, args, *kwargs)

1456 ret = tf_session.TF_SessionRunCallable(self._session._session,

1457 self._handle, args,

-> 1458 run_metadata_ptr)

1459 if run_metadata:

1460 proto_data = tf_session.TF_GetBuffer(run_metadata_ptr)

FailedPreconditionError: Error while reading resource variable bert_layer_9_module/bert/encoder/layer_3/output/LayerNorm/gamma from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/bert_layer_9_module/bert/encoder/layer_3/output/LayerNorm/gamma/N10tensorflow3VarE does not exist.

[[{{node bert_layer_9/bert_layer_9_module_apply_tokens/bert/encoder/layer_3/output/LayerNorm/batchnorm/mul/ReadVariableOp}}]]```

I have a similar error when using Elmo embeddings from tf-hub inside a custom keras layer.

tensorflow.python.framework.errors_impl.FailedPreconditionError: 2 root error(s) found.

(0) Failed precondition: Error while reading resource variable ElmoEmbeddingLayer_module/bilm/CNN_high_0/b_carry from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/ElmoEmbeddingLayer_module/bilm/CNN_high_0/b_carry/class tensorflow::Var does not exist.

[[{{node ElmoEmbeddingLayer/ElmoEmbeddingLayer_module_apply_default/bilm/add/ReadVariableOp}}]]

(1) Failed precondition: Error while reading resource variable ElmoEmbeddingLayer_module/bilm/CNN_high_0/b_carry from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/ElmoEmbeddingLayer_module/bilm/CNN_high_0/b_carry/class tensorflow::Var does not exist.

[[{{node ElmoEmbeddingLayer/ElmoEmbeddingLayer_module_apply_default/bilm/add/ReadVariableOp}}]]

[[metrics/acc/Identity/_199]]

0 successful operations.

0 derived errors ignored.

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)You are amazing!!!!! This is the best solution. Can you tell me why did it just work after adding session?

Thank you and you are very welcome :).

As far as I understand, the problem is that tensorflow graphs and sessions are not thread safe. So by default a new session (which does not contain any previously loaded weights, models a.s.o.) is created for each thread, i.e. for each request. By saving the global session that contains all your models and setting it to be used by keras in each thread the problem is solved.

Thank you so much for this.

In my case I did it a bit differently, in case it helps anyone:

# on thread 1

session = tf.Session(graph=tf.Graph())

with session.graph.as_default():

k.backend.set_session(session)

model = k.models.load_model(filepath)

# on thread 2

with session.graph.as_default():

k.backend.set_session(session)

model.predict(x, **kwargs)

The novelty here is allowing for multiple models to be loaded (once) and used in multiple threads.

By default, the "default" Session and the "default" Graph are used while loading a model.

But here you create new ones.

Also note the Graph is stored in the Session object, which is a bit more convenient.

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)You are amazing!!!!! This is the best solution. Can you tell me why did it just work after adding session?

Thank you and you are very welcome :).

As far as I understand, the problem is that tensorflow graphs and sessions are not thread safe. So by default a new session (which does not contain any previously loaded weights, models a.s.o.) is created for each thread, i.e. for each request. By saving the global session that contains all your models and setting it to be used by keras in each thread the problem is solved.Thank you so much for this.

In my case I did it a bit differently, in case it helps anyone:

# on thread 1 session = tf.Session(graph=tf.Graph()) with session.graph.as_default(): k.backend.set_session(session) model = k.models.load_model(filepath) # on thread 2 with session.graph.as_default(): k.backend.set_session(session) model.predict(x, **kwargs)The novelty here is allowing for multiple models to be loaded (once) and used in multiple threads.

By default, the "default"Sessionand the "default"Graphare used while loading a model.

But here you create new ones.

Also note theGraphis stored in theSessionobject, which is a bit more convenient.

Thank you for this answer

I am also facing this problem when using flask and multithreading, @eliadl Your solution worked for me.

Thanks :smiley:

System information

- Have I written custom code (as opposed to using a stock example script provided in TensorFlow): yes, found here (https://github.com/viaboxxsystems/deeplearning-showcase/blob/tensorflow_2.0/flaskApp.py)

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): MAC OSX 10.14.4

- TensorFlow version (use command below): 2.0.0-alpha0

- Python version: Python 3.6.5

You can collect some of this information using our environment capture

python -c "import tensorflow as tf; print(tf.version.GIT_VERSION, tf.version.VERSION)"

v1.12.0-9492-g2c319fb415 2.0.0-alpha0Describe the current behavior

when running "flaskApp.py", After loading the model and trying to classify an image using "predict", it fails with the error:tensorflow.python.framework.errors_impl.FailedPreconditionError: Error while reading resource variable softmax/kernel from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/softmax/kernel/N10tensorflow3VarE does not exist.

Describe the expected behavior

a result of image classification should be returned.Code to reproduce the issue

Steps to reproduce:

git clone https://github.com/viaboxxsystems/deeplearning-showcase.gitgit checkout tensorflow_2.0- (if needed)

pip3 install -r requirements.txtexport FLASK_APP=flaskApp.py- start the app with

flask run- using Postman or curl send any image of a dog or cat to the app

ORcurl -X POST \ http://localhost:5000/net/MobileNet \ -H 'Postman-Token: ea35b79b-b34d-4be1-a80c-505c104050ec' \ -H 'cache-control: no-cache' \ -H 'content-type: multipart/form-data; boundary=----WebKitFormBoundary7MA4YWxkTrZu0gW' \ -F image=@/Users/haitham.b/Projects/ResearchProjects/CNNs/deeplearning-showcase/data/sample/valid/dogs/dog.1008.jpgOther info / logs

E0430 13:36:10.374372 123145501933568 app.py:1761] Exception on /net/MobileNet [POST] Traceback (most recent call last): File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 2292, in wsgi_app response = self.full_dispatch_request() File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 1815, in full_dispatch_request rv = self.handle_user_exception(e) File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 1718, in handle_user_exception reraise(exc_type, exc_value, tb) File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/_compat.py", line 35, in reraise raise value File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 1813, in full_dispatch_request rv = self.dispatch_request() File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/flask/app.py", line 1799, in dispatch_request return self.view_functions[rule.endpoint](**req.view_args) File "/Users/haitham.b/Projects/Virtualenvs/deeplearning-showcase/flaskApp.py", line 97, in use_net_to_classify_image prediction, prob = predict(net_name, image) File "/Users/haitham.b/Projects/Virtualenvs/deeplearning-showcase/flaskApp.py", line 59, in predict output_probability = net_models[cnn_name].predict(post_processed_input_images) File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/keras/engine/training.py", line 1167, in predict callbacks=callbacks) File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/keras/engine/training_arrays.py", line 352, in model_iteration batch_outs = f(ins_batch) File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/keras/backend.py", line 3096, in __call__ run_metadata=self.run_metadata) File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/client/session.py", line 1440, in __call__ run_metadata_ptr) File "/Users/haitham.b/venv/tensorflow2.0alpha/lib/python3.6/site-packages/tensorflow/python/framework/errors_impl.py", line 548, in __exit__ c_api.TF_GetCode(self.status.status)) tensorflow.python.framework.errors_impl.FailedPreconditionError: Error while reading resource variable softmax/kernel from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/softmax/kernel/N10tensorflow3VarE does not exist. [[{{node softmax/MatMul/ReadVariableOp}}]]

I am also facing this issue

tensorflow.python.framework.errors_impl.FailedPreconditionError: Error while reading resource variable dense_6/kernel from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/dense_6/kernel/class tensorflow::Var does not exist.

[[{{node dense_6/MatMul/ReadVariableOp}}]]

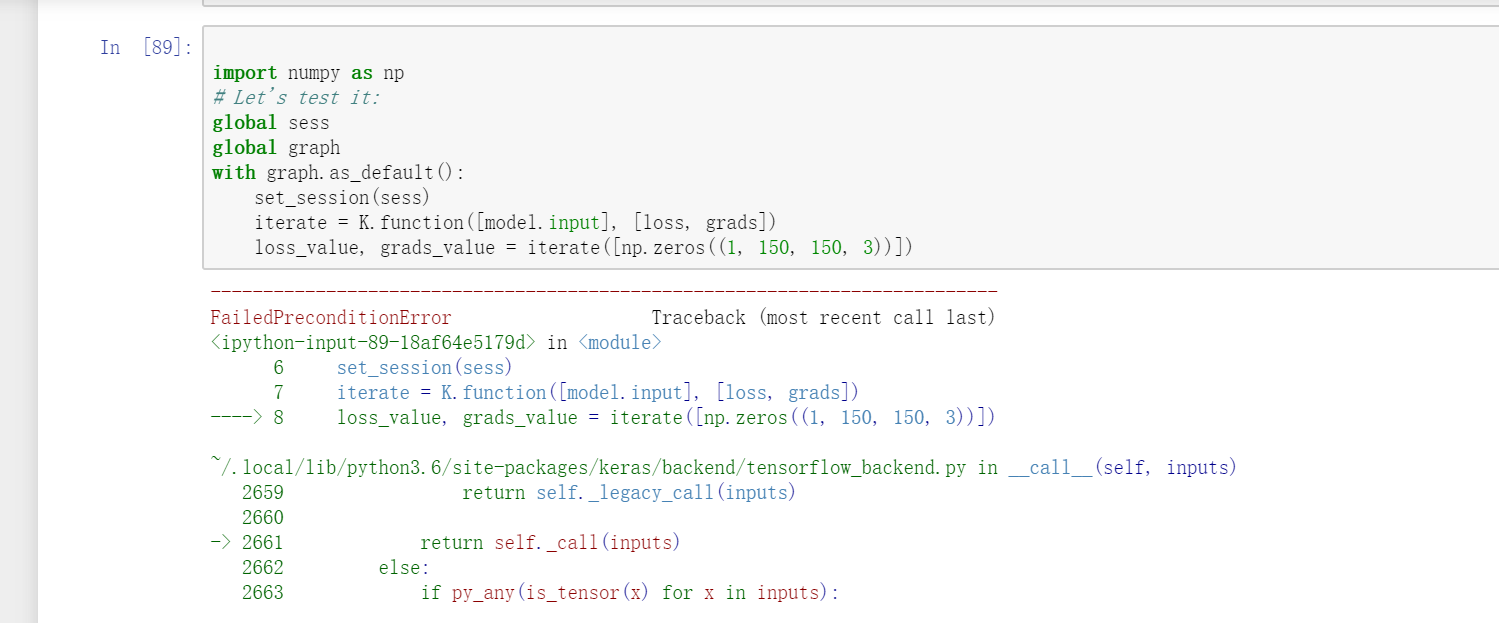

sorry,I am also facing this issue. But your answer doesn't fix my problem maybe it's in different condition. In my problem, It is when I use VGG16 in keras and I want to use iterate funtion

loss_value, grads_value = iterate([np.zeros((1, 150, 150, 3))])

But this similar question occured.

FailedPreconditionError: Error while reading resource variable block1_conv1_12/bias from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/block1_conv1_12/bias/N10tensorflow3VarE does not exist.

[[{{node block1_conv1_12/BiasAdd/ReadVariableOp}}]]

In https://github.com/JarvisUSTC/deep-learning-with-python-notebooks/blob/master/5.4-visualizing-what-convnets-learn.ipynb, the problem didn't encounter.

from tensorflow.python.keras.backend import set_session

from tensorflow.python.keras.models import load_model

from tensorflow.python.keras.applications import VGG16

sess = tf.Session()

graph = tf.get_default_graph()

set_session(sess)

#model = load_model('vgg16_weights_tf_dim_ordering_tf_kernels_notop.h5')

model = VGG16(weights='imagenet',include_top=False)

layer_name = 'block3_conv1'

filter_index = 0

layer_output = model.get_layer(layer_name).output

loss = K.mean(layer_output[:, :, :, filter_index])

grads = K.gradients(loss, model.input)[0]

grads /= (K.sqrt(K.mean(K.square(grads))) + 1e-5)

import numpy as np

global sess

global graph

with graph.as_default():

set_session(sess)

iterate = K.function([model.input], [loss, grads])

loss_value, grads_value = iterate([np.zeros((1, 150, 150, 3))])

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)You are amazing!!!!! This is the best solution. Can you tell me why did it just work after adding session?

Thank you and you are very welcome :).

As far as I understand, the problem is that tensorflow graphs and sessions are not thread safe. So by default a new session (which does not contain any previously loaded weights, models a.s.o.) is created for each thread, i.e. for each request. By saving the global session that contains all your models and setting it to be used by keras in each thread the problem is solved.I have the same issue with tensor flow version 1.13.1, the above solution works for me.

This solution worked for me as well.. Thanks @eliadl

In TF 2.x, there is no session(). how do I fix it? same problem is happening in my TF 2.x code.

@SungmanHong instead of tf.Session try to use tf.compat.v1.Session.

In TF 2.x, there is no session(). how do I fix it? same problem is happening in my TF 2.x code.

import session in TF 2.X

tf.compat.v1.Session()

import keras.backend.get_session in TF 2.X

tf.compat.v1.keras.backend.get_session()

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)

This worked for me , thanks

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)

Man you are genius and awesome , you just saved my project , Thank you so muuch

In TF 2.x, there is no session(). how do I fix it? same problem is happening in my TF 2.x code.

import session in TF 2.X

tf.compat.v1.Session()

import keras.backend.get_session in TF 2.X

tf.compat.v1.keras.backend.get_session()

I have used the given solution but didn't work for me still, I'm getting the error

``The graph tensor has name: anchors/Variable:0

/usr/local/lib/python3.6/dist-packages/keras/engine/training_generator.py:49: UserWarning: Using a generator withuse_multiprocessing=Trueand multiple workers may duplicate your data. Please consider using thekeras.utils.Sequence class.

UserWarning('Using a generator with use_multiprocessing=True'

Epoch 1/4

FailedPreconditionError Traceback (most recent call last)

4 learning_rate=config.LEARNING_RATE,

5 epochs=4,

----> 6 layers='heads')

6 frames

/usr/local/lib/python3.6/dist-packages/mrcnn/model.py in train(self, train_dataset, val_dataset, learning_rate, epochs, layers, augmentation)

2350 max_queue_size=100,

2351 workers=workers,

-> 2352 use_multiprocessing=True,

2353 )

2354 self.epoch = max(self.epoch, epochs)

/usr/local/lib/python3.6/dist-packages/keras/legacy/interfaces.py in wrapper(args, *kwargs)

89 warnings.warn('Update your ' + object_name + ' call to the ' +

90 'Keras 2 API: ' + signature, stacklevel=2)

---> 91 return func(args, *kwargs)

92 wrapper._original_function = func

93 return wrapper

/usr/local/lib/python3.6/dist-packages/keras/engine/training.py in fit_generator(self, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, validation_freq, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

1730 use_multiprocessing=use_multiprocessing,

1731 shuffle=shuffle,

-> 1732 initial_epoch=initial_epoch)

1733

1734 @interfaces.legacy_generator_methods_support

/usr/local/lib/python3.6/dist-packages/keras/engine/training_generator.py in fit_generator(model, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, validation_freq, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

218 sample_weight=sample_weight,

219 class_weight=class_weight,

--> 220 reset_metrics=False)

221

222 outs = to_list(outs)

/usr/local/lib/python3.6/dist-packages/keras/engine/training.py in train_on_batch(self, x, y, sample_weight, class_weight, reset_metrics)

1512 ins = x + y + sample_weights

1513 self._make_train_function()

-> 1514 outputs = self.train_function(ins)

1515

1516 if reset_metrics:

/usr/local/lib/python3.6/dist-packages/tensorflow/python/keras/backend.py in __call__(self, inputs)

3630

3631 fetched = self._callable_fn(*array_vals,

-> 3632 run_metadata=self.run_metadata)

3633 self._call_fetch_callbacks(fetched[-len(self._fetches):])

3634 output_structure = nest.pack_sequence_as(

/usr/local/lib/python3.6/dist-packages/tensorflow/python/client/session.py in __call__(self, args, *kwargs)

1470 ret = tf_session.TF_SessionRunCallable(self._session._session,

1471 self._handle, args,

-> 1472 run_metadata_ptr)

1473 if run_metadata:

1474 proto_data = tf_session.TF_GetBuffer(run_metadata_ptr)

FailedPreconditionError: Error while reading resource variable anchors/Variable from Container: localhost. This could mean that the variable was uninitialized. Not found: Resource localhost/anchors/Variable/N10tensorflow3VarE does not exist.

[[{{node ROI/ReadVariableOp}}]] ```

PLEASE HELP!!!

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)You are amazing!!!!! This is the best solution. Can you tell me why did it just work after adding session?

Thank you and you are very welcome :).

As far as I understand, the problem is that tensorflow graphs and sessions are not thread safe. So by default a new session (which does not contain any previously loaded weights, models a.s.o.) is created for each thread, i.e. for each request. By saving the global session that contains all your models and setting it to be used by keras in each thread the problem is solved.

Pls it's showing some_custom_config not defined

Pls it's showing some_custom_config not defined

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

from tensorflow.python.keras.backend import set_session from tensorflow.python.keras.models import load_model tf_config = some_custom_config sess = tf.Session(config=tf_config) graph = tf.get_default_graph() # IMPORTANT: models have to be loaded AFTER SETTING THE SESSION for keras! # Otherwise, their weights will be unavailable in the threads after the session there has been set set_session(sess) model = load_model(...)and then in each request (i.e. in each thread):

global sess global graph with graph.as_default(): set_session(sess) model.predict(...)

work's for me, thanks!!

Most helpful comment

I had the same issue in tensorflow 1.13.1 which I have resolved by creating a reference to the session that is used for loading the models and then to set it to be used by keras in each request. I.e. I have done the following:

and then in each request (i.e. in each thread):