Pytorch: Broken `Type Hints` in PyTorch 0.4.0, related to IDEs(eq. PyCharm)

If you have a question or would like help and support, please ask at our

forums.

If you are submitting a feature request, please preface the title with [feature request].

If you are submitting a bug report, please fill in the following details.

Issue description

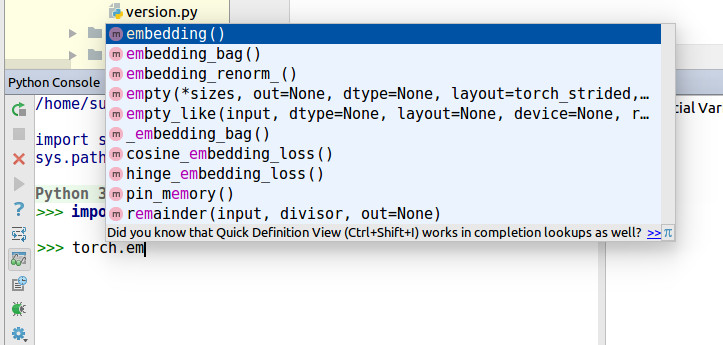

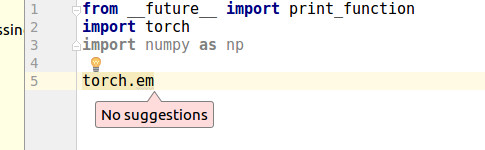

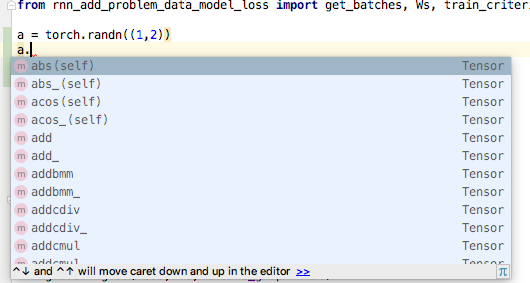

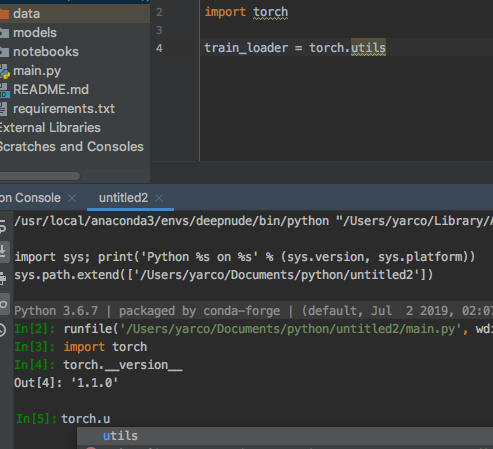

Recently, I figured out PyCharm cannot make auto-complete for torch.zeros.

PyCharm says

Cannot find reference 'zeros' in '__init__.py'

I dig it for a while I found broken Type Hints.

From these changes,

https://github.com/pytorch/pytorch/commit/30ec06c140b0428d591e2f5007bc8046d1bdf7c4

https://github.com/pytorch/pytorch/wiki/Breaking-Changes-from-Variable-and-Tensor-merge

Especially, https://github.com/pytorch/pytorch/commit/30ec06c140b0428d591e2f5007bc8046d1bdf7c4#diff-14258fce7c17ccb97b488e64373b0803R308 @colesbury

This line cannot make Type Hints for lots of IDEs.

Originally, torch.zeros was in torch/_C/__init__.py

But, it moved to torch/_C/_VariableFunctions

Code example

https://gist.github.com/kimdwkimdw/50c18b5cf72c69c2d01bb4146c8a2b5c

This is Proof of Concept for this bug.

If you look at main.py

import T_B as torch

torch.p2() # IDE can detect `p2`

torch.p1 # IDE cannot detect `p1`

System Info

Please copy and paste the output from our

environment collection script

(or fill out the checklist below manually).

You can get the script and run it with:

wget https://raw.githubusercontent.com/pytorch/pytorch/master/torch/utils/collect_env.py

# For security purposes, please check the contents of collect_env.py before running it.

python collect_env.py

- PyTorch or Caffe2:

How you installed PyTorch (conda, pip, source):

Any case for conda, pip, source.Build command you used (if compiling from source):

- OS: Any

- PyTorch version: 0.4.0

- Python version: 3.6.5

- CUDA/cuDNN version: .

- GPU models and configuration: .

- GCC version (if compiling from source): .

- CMake version: .

- Versions of any other relevant libraries:.

All 106 comments

any related point? #4568

The same problem, pycharm is basically useless when using pytorch0.4. Almost everything is underlined either because "cannot find reference" or "is not callable".

There's no auto-completion, quick definition & quick documentation for torch.tensor, torch.max and loss.backward.

- OS: Linux (64-bit Fedora 27 with Gnome).

- PyTorch version: 0.4.0.

- How you installed PyTorch (conda, pip, source): pip3.

- Python version: 3.6.5.

- I use CPU (No CUDA).

If any of you have suggestions for how to fix this, please let us know!

It sounds like PyCharm's searching for functions could be improved, but I'm not sure what can be done from the PyTorch side. Using torch.tensor as an example, help(torch.tensor) gets the documentation while dir(torch) shows "tensor" as a member.

@zou3519

I thought that below is not good code style.

for name in dir(_C._VariableFunctions):

globals()[name] = getattr(_C._VariableFunctions, name)

from https://github.com/pytorch/pytorch/commit/30ec06c140b0428d591e2f5007bc8046d1bdf7c4

globals()[name] is not Pythonic way. It is not only problem for PyCharm, but also for Python code style.

I thought _VariableFunctions is not need to be Class.

I'm trying to make PR for this issue. Would you review? @zou3519

@kimdwkimdw I don't know what's going on with the global() there or what would make it better, but yes, please submit a PR and I will look at it :)

It sounds like PyCharm's searching for functions could be improved, but I'm not sure what can be done from the PyTorch side.

Just wanted to note that I have never run into this issue with any other package in PyCharm. That's not to say PyCharm shouldn't be doing things differently, but this issue does seem to be an uncommon case.

I'm mainly working on these files.

tools/autograd/gen_autograd.py

tools/autograd/templates/python_torch_functions.cpp

torch/lib/include/torch/csrc/Module.cpp

Trying to figure out what's changed when generates C extensions.

I'll make PR soon.

Any updates?

I made a working example in my fork.

If anyone want to use auto-complete first, try below.

1. git clone

git clone -b pytorch-interface https://github.com/kimdwkimdw/pytorch.git

2. Install PyTorch

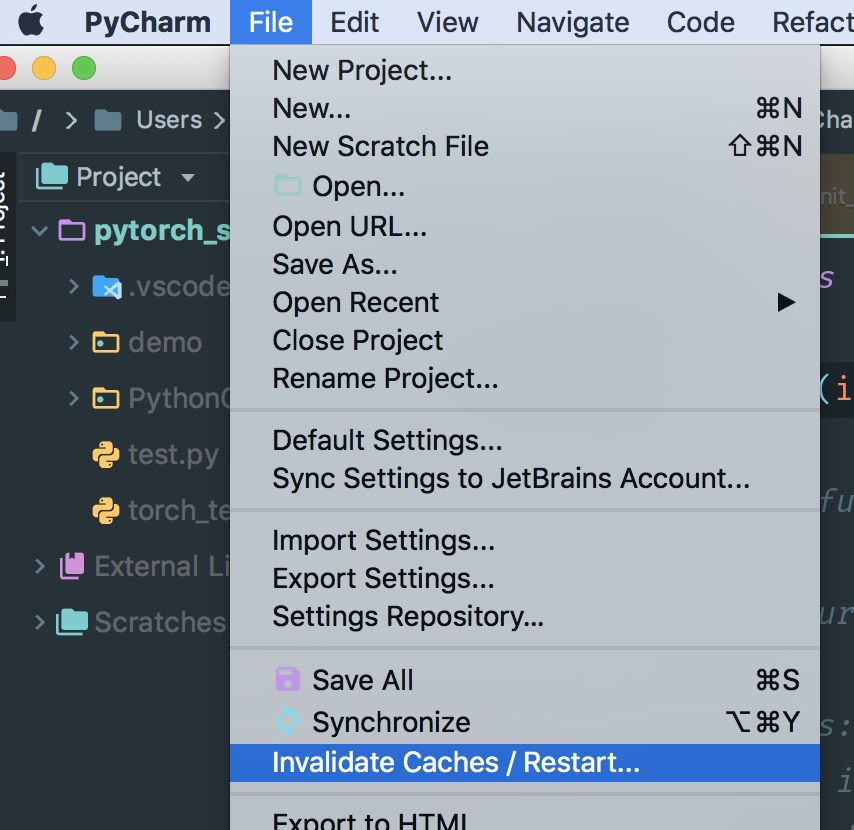

3. Clear cache in PyCharm

- usually caches are located in

/Users/USERNAME/Library/Caches/PyCharm*/python_stubs/ - check your

project interpreterin PyCharm

Before pull request, I need to add more commits to organize results.

install failed following the description above.

is there any simple way to install?

I'm having the same problem. I've always loved pytorch and pycharm. Such a shame they don't work along well : (

Looking forward to updates.

This is really strange when I used the same code in the python console with pycharm, it is normal, but in the editor. O__O "…

Going to bump the priority on this because a lot of users have been requesting this... we'll try our best to look into it more.

A lot of users are requesting.... I should make a pull request this weekend.

I merged my code with current master branch.

Type Hints are alive now.

MACOSX_DEPLOYMENT_TARGET=10.9 CC=clang CXX=clang++ python setup.py install

Checkout PR - https://github.com/pytorch/pytorch/pull/8845

cc. @zou3519

@kimdwkimdw should I reinstall pytorch from source? Is there any easy way to renew in the windows platform?

@541435721

Yes, you should. Check out https://github.com/pytorch/pytorch#install-pytorch this page.

I don't know there is more simple way to build it.

It doesn't seem to change anything for Pycharm (2018.1, with rebuilt caches).

You should check out @kimdwkimdw 's pull request, not pytorch master. The pull request has not been merged into pytorch master yet.

This is the PR: https://github.com/pytorch/pytorch/pull/8845.

Yes, this what I did:

git remote add kimdwkimdw https://github.com/kimdwkimdw/pytorch.git

git fetch kimdwkimdw

git checkout --track kimdwkimdw/interface-0

git submodule update --init

CC=gcc-5 CXX=g++-5 CFLAGS="-march=native -O2" CXXFLAGS="-march=native -O2" python setup.py build

python setup.py install --optimize=1 --skip-build

@nlgranger you should delete python stubs manually. it cannot be deleted with 'invalidate cache'.

When you setup project, Pycharm initialize its python stub files.

After you delete python stub directory, restart Pycharm.

PyCharm will re-generate its python stub.

Doesn't seem t change anything, but it might be my setup. Maybe we can wait for someone else to try?

You should find python stub directory. When you click torch, you can find stub directory.

@kimdwkimdw I have reinstalled and deleted the cache, but no changes.

You cannot delete PyCharm Cache through this invalidate Caches

In MacOS, you should manually delete folders in ~/Library/Caches/PyCharm2018.1/python_stubs/.

There is an another option. If you make a new project and change your interpreter setting, PyCharm will make its python stubs

@541435721 @nlgranger

This is exactly what I have done (invalidate cache, stop pycharm, rm .PyCharm2018.1/system/python_stubs/* -rf).

BTW I'm using python 3 in case that matters (I see some python2 tests in the PR).

@kimdwkimdw

I did this as the guidence and my os is windows10, but I'm facing this problem.

@kimdwkimdw I can do that! thank you!

As others have been having trouble, I just wanted to note that @kimdwkimdw 's fix is working for me. This is with Python 3 on macOS. Using:

source path/to/my/venv/bin/activate

git clone https://github.com/kimdwkimdw/pytorch.git

cd pytorch

git checkout interface-0

git submodule update --init

MACOSX_DEPLOYMENT_TARGET=10.13 CC=clang CXX=clang++ python setup.py install

I did delete my stubs manually, though I don't know if that was needed. In any case, it at least works on my system.

However, I've found that both torch.float32 (and other datatypes) and torch.backends.cudnn are still unresolved by PyCharm (at least in my case).

@shianiawhite Good point. Thanks. I think I should add more updates to Pull Requests.

@zou3519

Didn't work for me, even though I successfully built @kimdwkimdw 's PR and manually deleted python_stub in ~/.PyCharmCE2018.1/system.

Sorry to ask again but any news about this?

Would it help opening an issue here at jetbrains https://youtrack.jetbrains.com/issues/PY?q=pytorch ?

I'm having the same issue in Ubuntu 16.04 and PyCharm 2017.3

Strange thing is when I run in python console, it shows auto-complete properly.

But when I run the same in the editor it doesn't work.

I'm using python 3.6.6 and PyTorch 0.41. I installed it using pip and cuda 9.2

I have the same issue in Window7 PyCharm2018, Win10 PyCharm2018, Win10 VS2017, Win10 Spyder.

and i try to use pytorch0.4.0 and pytorch0.4.1, both cannot auto-complete.

only ipython shows auto-complete properly.

please help me

I opened an issue in PyCharm youtrack. Maybe they can help too.

I have tried VS2017 and PyCharm2018.2 with pytorch0.4.1 on Windows 10 and autocompletion doesn't work for me.

PyTorch-0.4.1 installed by pip with PyCharm-2018.2, still have same problems.

it would be good if people could vote here https://youtrack.jetbrains.com/issue/PY-31259

so the fix has higher priority

Hello!

I'm the assignee of PY-31259.

PyCharm does not run any user-code while doing static analysis (exclusion is console where environment could be easily investigated). So most dynamic ways to declare attributes are not discoverable.

@sproshev thank you for your reply. Could you shed some light on why @kimdwkimdw 's pull request https://github.com/pytorch/pytorch/pull/8845 works?

For context, pytorch does some dynamic assigning of attributes when you import it. @kimdwkimdw's pull request keeps that, but does it in a different way that pycharm is able to follow.

I think the most reasonable way to fix this (at least for Python 3) is to generate a .pyi stub.

A quick test seems to indicate that would work well.

I'm not a PyCharm user myself, but if there are enough people who will be extremely happy, I could see if we can generate one from the native_functions.yaml and friends.

@t-vi I have no idea what you're talking about, but given that this has been just dragging on and on, please generate the .pyi! :D

So the main module seems easy enough:

For some reason or other, just having

class Tensor: ...

@overload

def randn(size: Tuple[int, ...], *, out: Optional[torch.Tensor], dtype: dtype=None, layout: layout=torch.strided, device: Union[device, str, None]=None, requires_grad: bool=False) -> Tensor: ...

in the __init__.pyi does not seem to allow PyCharm to infer that after a = randn((1,2)), a is of class torch.Tensor. :(

(I actually have annotations in the class, too, but they are only used if I spell out the annotation a : torch.Tensor = ...)

I posted the pyi here, if you want to try: https://gist.github.com/t-vi/0d0ae013072f96f50fa11fbc2287e33b

Again, if you have an idea why PyCharm doesn't appear to identify the return type, I would be most grateful if you shared it.

@t-vi The solution works for VS code as well. Thank you!

Thank you, ZongyueZhao, for your feedback. I didn't try with VS code yet, but if the pyi works including return types, I'll submit a patch for its generation.

Thanks, that __init__ worked for me! I'm using conda env called "main" so what I did was:

pushd /Users/yaroslavvb/anaconda3/envs/main/lib/python3.6/site-packages/torch

rm __init__.py

wget https://gist.githubusercontent.com/t-vi/0d0ae013072f96f50fa11fbc2287e33b/raw/e0e3878fa612c5a4557ec76c011fd5f9453ff0e8/__init__.py

popd

I didn't need to regenerate cache

Regarding why it doesn't recognize the Tensor return type, it has to do with syntax errors in the file. Open file in PyCharm, and it shows a few of them

Once I deleted lines with errors, I got autocompletion

Another thing I noticed is that Tensor class member hints return -> Tensor, which is a circular reference, so it's not recognized by PyCharm either. The solution is to do -> "Tensor" instead of -> Tensor for methods of Tensor class (https://www.python.org/dev/peps/pep-0484/#forward-references)

Thanks @yaroslavvb, that was exactly what I had been missing!

Bump for visibility. It's actually a huge deal for PyCharm users. I was evaluating PyTorch as an alternative to our tf/keras codebase and this issue is a blocker for us.

I use pytorch 0.4.1.

Is there any a concrete solution for this. I use pip to install pytorch. There are so many pytorch built-in functions torch.xxx unrecognizable in pycharm.

If it can't be fixed. Can you help suggest an alternative IDE that suggest all pytorch functions and help navigation within the framework (like Ctrl+B to jump to definition of pytorch function).

Thank you.

Anyone care to do a PR (@t-vi)? I'm using PyTorch nightlies now and it's a bit of a drag to do this everytime I upgrade...

I'm actively discussing this with the core devs to have a good solution of when and how to generate the PYI during the build process. The hard part is to merge the information from the python-defined bits with those from the _C bits. We're trying to figure out a good solution and I hope to have a PR soonish, but it will probably need a round of iteration.

If you are looking for a way to set my priorities, don't hesitate to contact me by mail.

Replacing __init__.py is not a good solution. In @t-vi 's gist, for example, when using

a = torch.cat([a,b], dim=-1)

PyCharm gives a warning that parameter "out" is missing, but the "out" parameter is optional.

In another case,

x = torch.empty(10, 2)

This line of code is correct because the method can accept (*sizes, ...), but PyCharm warns about invalid parameters as well. With so many strange warnings I finally choose to ignore "torch.*" in the code inspection settings...

@hitvoice Thank you for pointing these out.

The gist only is a very rough fist stab at getting a proper pyi, so your feedback is very much appreciated! I hope you'll be able to turn back on the inspection with the next iteration.

I think the Optional[out] should be out : Optional[Tensor]=None, right? That is easy to fix.

For empty, it is a bit more complicated as it likely means that we need to split declarations with only one list argument to also accept varargs (I once stared at the C code parsing the python args for too long), but we're certainly committed to having great type hints.

Hi,

I updated the __init__.pyi and would appreciate if you could give it a spin.

- I'm much happier regarding the generation method, so even though that is behind the scenes, I think there is good progress towards having a PR.

- I also generate hints for some python-defined functions (btrifact, einsum), but I didn't add all of them.

- I think I fixed (in the generation code) the deficiencies that @yaroslavvb and @hitvoice pointed out.

If you think this roughly works, I'll look for ways to call the code (it needs to be after the build) and we have (almost) a PR.

@t-vi Looks good. So far it's working nicely for me.

Just curious, what is the reason for generating the python-defined function hints (btrifact, einsum)? I'm using the nightly build and those seem to be working for me without the hints.

@elliotwaite In my (short) experimentation, I didn't seem to get autocompletion on the results unless I add type hints on them, i.e. on x = einsum('ii', a); x. and now get the tensor methods for x. Also, I'm not sure how having a pyi affects how stuff not included in the pyi is treated. As far as I understand (which is not very good), that is a source of subtleties.

@t-vi Ah, I see. I was only testing between including the pyi file entirely vs not. You're right, if I include the pyi file and only comment out the einsum hint line, it breaks the autocomplete for me too. Thanks for explaining.

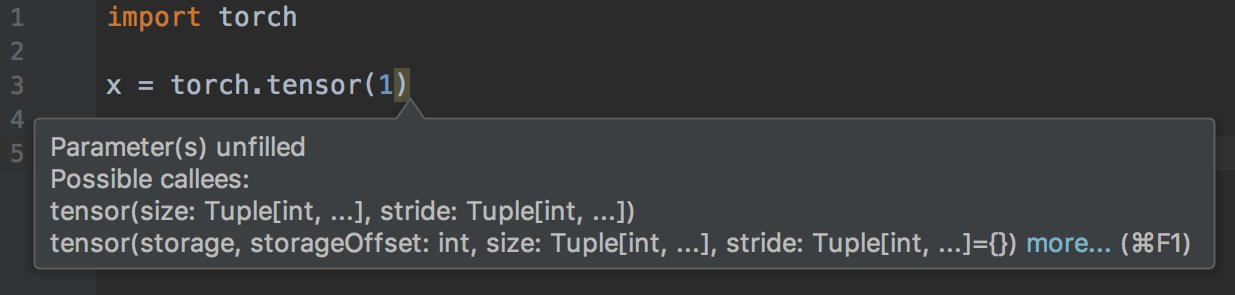

@t-vi I'm getting a "Parameter(s) unfilled" warning when only passing a sinlge argument into torch.tensor().

@elliotwaite Thanks for noting!

tensor is too special, so I probably need to include the proper signature manually.

I'll update the PR. Will you find more issues when I also update the gist? (That's pretty manual and I'd do it if it helps you give it a spin.)

@t-vi I'm also getting an "Unexpected argument" warning for torch.Tensor.view():

torch.randn(2, 2).view(1, 1, 2, 2)

I also made a script that searched through all the docs and pulled out all the "tensor.*" strings. Then I tested them for autocompletion. Here's the list of the ones where autocompletion isn't working yet. I used the print() function to test and make sure that they are actually accessible when running the script.

https://github.com/elliotwaite/pytorch_typehints/blob/master/no_autocomplete.py

@elliotwaite Right, there is some varargs thing for tensors missing (I think I'm also failing to act on kwonly annotations in the yaml).

The idea of using doc(string)s for seeing if the annotations are good (or at least have a chance of being liberal enough) is great, I'll be looking to do this as part of the testing.

8845 is confirmed from PyCharm side. But in general it's better to have pyi stubs because they at least contains type information. +1 for them.

The problem exists in general with all of IDEs that use Jedi as backend for Python completion right? And your solution will be general too or specific for PyCharm and VS Code? I use emacs with Jedi as completion backend and I have the same problem. BUT, what is interesting, you can try completion in IPython console and it works! It sees all the functions, how is that possible?

Well, IPython uses dynamic introspection, and that always works (it only works after you have created the objects, so you'll be able to complete mytensor.a but not mytensor.abs().m, which you can with type hints.

As far as I understand both VSCode and Jupyter also use Jedi. Stubs (.pyi files) are the "standard" way of adding type information on top of existing modules, but they not yet supported as widely as one might like (and there are still corner cases, e.g. you cannot express that you can pass an ellipsis ("...") to indexing functions). The right course of action is to hop over to Jedi and add type hint stub support (https://github.com/davidhalter/jedi/issues/839). Your chance to invest your time or money to make your toolchain better!

@t-vi Thanks to keep tracking this issue!

I understand Stubs are a quite good way to resolve this issue.

https://www.python.org/dev/peps/pep-0484/#stub-files

However, Docstrings are not generated with Stubs. How about adding Docstring?

@t-vi I don't understand what should I do. What do you mean to improve my toolchain? I use Jedi currently, but I don't pretty much understand how to work with those stub files. This is something I should write or it is present in modules and I have to update Jedi? Is it like headers in C++?

PEP documentation and issue you've linked aren't really helpful for me on how can I start using them.

@kimdwkimdw Where do you put docstrings so everyone will find them? (I don't think you can put them in the PYI(?)). If you have an idea where to put them, I'll be sure to put them there...

@piojanu Jedi seems to have a typeshed branch, but I don't know whether that is about pyi's in general. Probably best to ask the Jedi author how you can help to make pyi support happen.

@t-vi I get it now, thanks.

Originally inferred method signature and types with Docstring in PyTorch 0.3.0 and my PR(https://github.com/pytorch/pytorch/pull/8845).

@t-vi

Docstrings can be added below each method in PYI.

Most of them is located in _torch_docs.py

For @sproshev 's comment, I think #8845 is a good way not only in PyCharm, but also in another IDEs because it uses the standard way of importing modules. Therefore, PyCharm can generate its Python stubs automatically.

PyTorch's original way 0.3.0 or #8845 makes in PyCharms, in IPython.

The type informations can be include in gen_python_functions.py or else.

I agree that generating explicit pyi files is one way to resolve this issue. but perhaps not the cleanest way.

The same problem, pycharm is basically useless when using pytorch0.4. Almost everything is underlined either because "cannot find reference" or "is not callable".

@nimcho I also have this issue.This is why?

I install torch-nightly on my Mac and Pycharm could not prompt for it at all, although able to jump to source when I Command-Click. After removing cache directories in the following folders, the problem is fixed.

- /Users/USERNAME/Library/Caches/PyCharm**/python_stubs

- /Users/USERNAME/Library/Caches/PyCharm**/LocalHistory

- /Users/USERNAME/Library/Caches/PyCharm**/caches

- /Users/USERNAME/Library/Caches/PyCharm**/tmp

- /Users/USERNAME/Library/Caches/PyCharm**/userHistory

I still have this problem with pytorch 1.0.0. How can I solve this problem with solutions above?

This is embarassing ... almost 8 months :-(

@ebagdasa I’d encourage us to keep this productive and not snarky. Who is the ‘you’ you are referring to? Production software doesn’t always have great editor support, in my experience.

to give context on why this has taken so long to fix:

- none of us core devs use PyCharm, so first it was hard to get visibility into the issue

- once we started looking into it, it was a problem that was cross-project, i.e. it was partly what part of the CPython types PyCharm supported for auto-complete, and partly what PyTorch could do to workaround PyCharm's limitations in this setting.

- this needed a combination of knowing PyCharm's internals and PyTorch's internals. I hope you understand the difficulty of this

- just as a counter-point, Microsoft VSCode + PyTorch works fine for auto-complete, and so does IPython auto-complete work fine. So @ebagdasa I dont think your comment is relevant.

Lastly, @kimdwkimdw and @t-vi have fixes, for example in https://github.com/pytorch/pytorch/pull/12500 by generating explicit type-hints, and once that PR gets in, we should hopefully fix the PyCharm issue.

And as always, this is an open-source project, so if any of you can help with this, please feel free to do so. For example @kimdwkimdw and @t-vi did it in their own free-time, and dont get paid for it, and dont need the hate.

@ebagdasa, you should ask for a refund

all right, that was a comment out of disappointment looking how my code is all highlighted by the editor. Sorry guys, I understand how it works. Keep going! Maybe time to try VSCode.

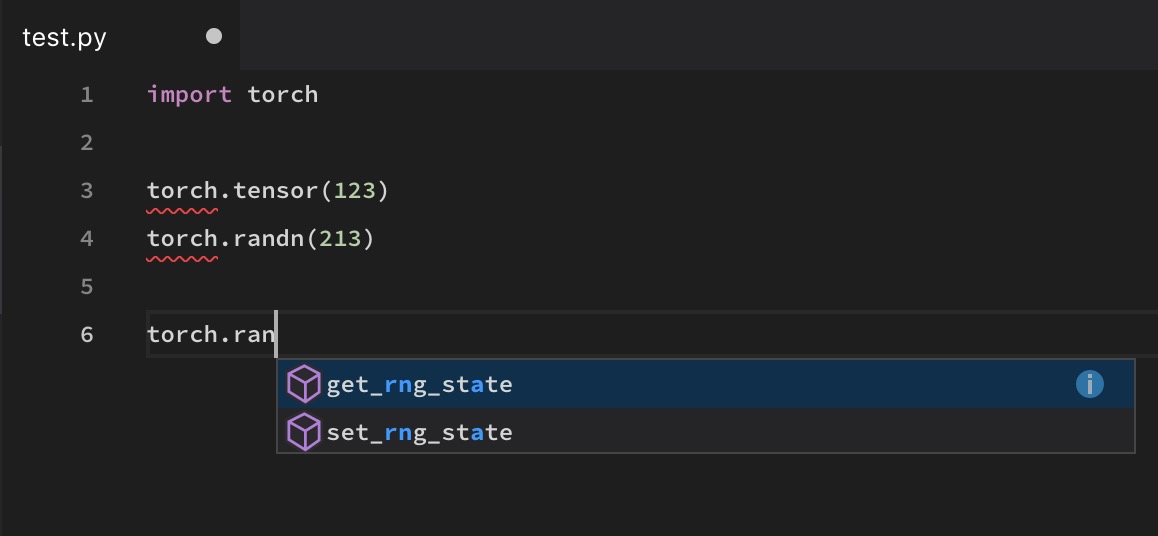

@soumith The autocomplete of VS Code is also broken on some functions such as torch.randn

And as always, this is an open-source project, so if any of you can help with this, please feel free to do so. For example @kimdwkimdw and @t-vi did it in their own free-time, and dont get paid for it, and dont need the hate.

Would be nice of course if they _did_ get paid for it... would it be possible for FAIR to use something like gitcoin to encourage participation by bounties? Of course the people at FAIR already get compensated handsomely for their contributions, and most people here (like me) probably have their hands full with their day jobs...

I've tried VS Code, Atom and Pycharm using either pip or conda, but none of them work fine for auto-complete on some fuctions like torch.max(). IPython and Jupyter doesn't have this problem, but they are not editors.

the same here. Tried PyCharm , VS code , Spyder .. torch.cat impossible to get , the same as the Longtensor.

Throughout the comments in this issue, this has continually been billed as a problem related to PyCharm, and it's been mentioned that it's a problem with how PyCharm's internals and PyTorch's internals work together. This is a mischaracterization of the problem, and viewing it as such probably won't lead to the correct solution. PyCharm is taking a fairly standard approach to resolving packages, and, as has been mentioned by several others, this problem is not unique PyCharm. PyCharm just happens to be the most popular editor that runs into this issue with PyTorch. Any editor which does not dynamically resolve the package (for speed purposes) will have this problem. I think the fact that IPython/Jupyter/etc can resolve it correctly is the exception rather than the rule. I just wanted to emphasize this so that not too much focus is put on "making it work with PyCharm" rather than "making it work in general".

That being said, the stubs being worked on in this PR are a good "standard" solution, and should probably be able to resolve the issue in general. For those of you looking for the quick temporary solution, copy that stub file into your dist-packages/site-packages as explained in the gist. Manually add any other missing parts, and report those missing parts to ensure they get handled.

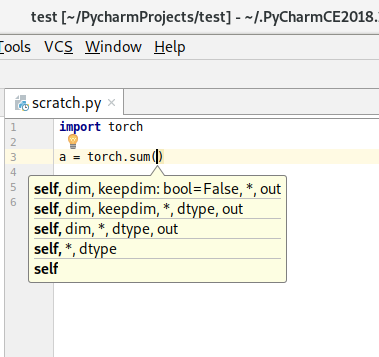

Hi everyone! @t-vi's patch has merged to master, so if you update you should get working autocomplete for torch on master. Additionally, we committed a hand-generated type stub for 1.0.1, so when that release happens, autocomplete will also work for people on that release.

The type stub is probably not good enough for actually typechecking your code with mypy. We're tracking this follow up work in #16574, please pipe up if it affects you there. Also, we only fixed autocomplete on torch; if you are encountering problems with autocomplete on other modules (or you think there are missing identifiers on torch), please let us know with a bug report.

Thanks for your patience!

just fyi, the new v1.0.1 release now has this solved, and it's shipped.

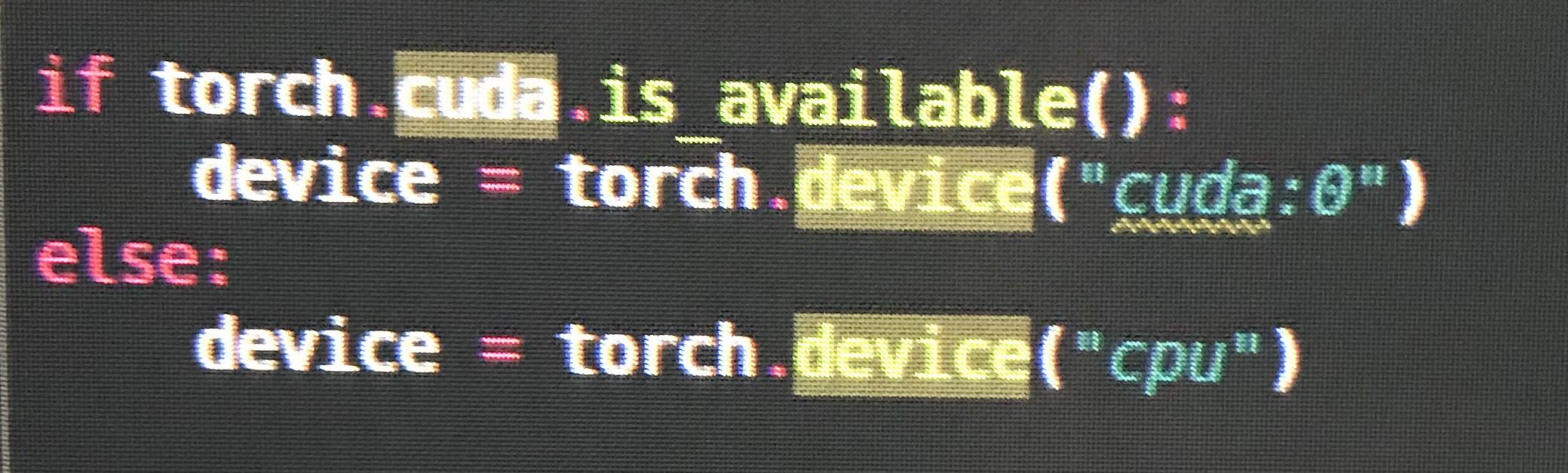

@ezyang torch.cuda is missing in the pyi, for example when using torch.cuda.is_available.

I am getting intellisense for VSCode to partially work on torch 1.0.1, but some functions are still missing, eg, torch.as_tensor

I was also getting errors and no autocomplete for some functions in PyCharm (it was missing when I needed it most), and I don't think this is completely solved yet.

I wrote my first PyTorch program last weekend so not sure if I made some mistake with the setup.

@vpj it was fixed in PyTorch v1.0.1. Anything lower still has the issue. check print(torch.__version__)

Version is 1.0.1post2

Some definitions are missing in the __init__.pyi file.

PyCharm also seems to be causing some problems because it fails to infer return types. For instance, it doesn't seem to infer that the return value of torch.exp is a Tensor (however the return type is inferred with Tensor.exp). Although the return type is defined in the torch.exp method definition.

yes, I also upgrade to '1.0.1.post2'

and auto completing not seems to work

so, apparently a few namespaces are still missing, though we fixed the larger problem.

I opened a new tracking task for this here: https://github.com/pytorch/pytorch/issues/16996

I'm not quite sure about this one? (Is this fixed?) Console works fine.

(But from torch.utils.data import DataLoader, Dataset works)

@yarcowang We've been steadily adding more and more namespaces. This should work quite well on 1.2.0. If there are still missing things please file bugs.

@ezyang OK. I see. It seems a long-term bug.

I am a newer for pytorch. Thanks a lot, it works well for me.

Hello everyone, went through the entire thread. It's quite difficult to find whether the issue is resolved or not. I'm using torch1.4+cpu and torch.tensor still has warning issues. Can anyone help? Thanks

Also still experiencing this issue with torch.Tensor

Thanks for the comments. We've been fixing lots of small type hint bugs over the last few months; if you notice things that are not working on the latest release or master please open bugs for them and we'll look. Thanks!

Broken things that I have noticed in version 1.4.0:

torch.cuda.manual_seed # manual_seed not hinting

torch.cuda.manual_seed_all # manual_seed_all not hinting

torch.utils # cannot find utils

torch.backends # cannot find backends

torch.optim.lr_scheduler._LRScheduler.step() # argument "epoch" unfilled, while it's optional

torch.Tensor(4, 1) # unexpected arguments & argument "requires_grad" unfilled

torch.optim.Adadelta # cannot find Adadelta

torch.nn.TransformerEncoderLayer # cannot find TransformerEncoderLayer

torch.nn.TransformerEncoder # cannot find TransformerEncoder

@tjysdsg Thanks for reporting. Could you open a new issue with those cases for more visibility?

@tjysdsg Thanks for reporting. Could you open a new issue with those cases for more visibility?

@zou3519 Here it is #34699, I'll also update the issue if I find anything new

Most helpful comment

Going to bump the priority on this because a lot of users have been requesting this... we'll try our best to look into it more.