Godot: Using the slowest data structure almost every time.

I was reviewing the code of godot, and i`ve found complete disregard for any kind of cpu side performance, down to the core data structures. Its using List everywhere, when a linked list is the slowest data structure you can use on modern PCs. Almost any other data structure would be faster, specially on small sized structures. Its specially egregious on things like the light list or reflection captures in renderables, where you are storing 3 pointers every time, instead of just giving it a stack array of 8 max lights (for example) + an extra for the cases where it would be more than that.

Same thing with RID_Owner being a tree where it could be a hash map, or a slotmap. Also the Octree implementation for culling has the exact same issue.

I want to ask about the design intention behind that total and absolute overuse of linked lists and dreadful performance pointer heavy data structures everywhere in the code. Is that for a specific reason? In most of the cases a "chunked" linked list, where you do a linked list of arrays would automatically bring performance gains on the same code.

The use of these data structures also prevents any kind of "easy" parallelism in a good chunk of the code and completely trashes the cache.

I am working on making a proof of concept implementation of refactoring some of the internals to use better data structures. At this moment i am working on a rewrite of the culling code which bypasses the current octree and just uses a flat array with optional parallelism. Ill use the tps-demo as benchmark and come back with results, which in fact i have benchmarked to go 25 levels deep on that octree...

On another more happy note, i am very impressed with the code style quality, everything is easy to follow and understand, and quite well commented.

All 61 comments

Who needs performance? :trollface:

Curious to see what you measure.

So this can explain why a simple Light2D node in Godot can burn your computer?

@Ranoller I don't think so. The bad lighting performance is most likely related to how Godot performs 2D lighting at the moment: Rendering every sprite n-times (with n being the number of lights that are affecting it).

Edit: see #23593

To clarify, this is about CPU side inefficiencies all over the code. This has nothing to do with godot features or godot GPU rendering itself.

@vblanco20-1 on a bit of a side-tangent, you and I had talked about the nodes being modeled as ECS entities instead. I wonder if the trick is to do a feature branch of godot with a new ent module that would gradually work side-by-side with the tree. like a get_tree() and get_registry(). the ent module would probably miss like 80% of the functionality of the tree/scene, but it could be useful for as a testbed, especially for stuff like composing large static levels with lots of objects (culling, streaming, batch rendering). Reduced functionality and flexibility but greater perfromance.

Before going full ECS (wich i might do) i want to work on some low hanging fruits as a experiment. I might try to go full data-oriented later down the line.

So, first updates:

update_dirty_instances : from 0.2-0.25 miliseconds to 0.1 milisecond

octree_cull (the main view): from 0.35 miliseconds to 0.1 milisecond

The fun part? the replacement for octree cull is not using any acceleration structure, it just iterates over a dumb array with all the AABBs.

The even funnier part? The new culling is 10 lines of code. If i wanted to have it parallel, it would be a single change to the line.

Ill continue with implementing my new culling with the lights, to see how much the speedup accumulates.

we should probably get a google benchmark directory going too on the master branch. That may help with validating so people don't have to argue about it.

There is the godotengine/godot-tests repository, but not many people are using it yet.

New update:

Ive implemented a fairly dumb spatial acceleration structure, with 2 levels. Im just generating it every frame Further improvement could make it better and update it dynamically instead of remaking it.

In the image, the different rows are different scopes, and each of the colored block is a task/chunk of code. In that capture, im doing both the octree cull and my cull side by side to have a direct comparaison

It demonstrates that recreating the entire acceleration structure is faster than a single old octree cull.

Also that further culls tend to be about half to one third of the current octree.

I havent found a single case where my dumb structure is slower than the current octree, other that the cost of the initial creation.

Another bonus is that its very multithread friendly, and can be scaled to whatever core count you want just with a parallel for.

It also checks the memory completely contigously, so it should be possible to make it do SIMD without much hassle.

Another important detail is that its vastly simpler than the current octree, with way less lines of code.

You are causing more hype that the end of game of thrones....

Modified the algos a bit so now they can use C++17 parallel algorythms, wich allows the programmer to just tell it to do a parallel for, or a parallel sort.

Speedup of about x2 in the main frustrum cull, but for the lights is about same speed as before.

My culler is now 10 times faster than godot octree for the main view.

If you want to look at the changes, the main important thing is here:

https://github.com/vblanco20-1/godot/blob/4ab733200faa20e0dadc9306e7cc93230ebc120a/servers/visual/visual_server_scene.cpp#L387

This is the new culling function. The acceleration structure is on the function right above it.

This should compile with VS2015? Console throws a bunch of errors about the files inside entt\

@Ranoller it's c++17 so make sure you're using newer compiler

Uh, I think Godot did not make the move to c++17 yet? At least I recall some discussions on the topic?

Godot is C++ 03. This move will be controversial. I recomend @vblanco20-1 to talk with Juan when he finish hollydays... this optimization will be great and we don't want an instantly "This Will Never Happend TM

@vblanco20-1

octree_cull (the main view): from 0.35 miliseconds to 0.1 milisecond

how many objects?

@nem0

@vblanco20-1

octree_cull (the main view): from 0.35 miliseconds to 0.1 milisecond

how many objects?

Around 2200. The godot octree performs better if the check is very small, because it early outs. The bigger the query, the slower godot octree is compared to my solution. If the godot octree cant early-out 90% of the scene, then its dreadfully slow, because its essentially iterating linked lists for every single object, while my system is structure of arrays where each cache line holds a good amount of AABBs, greatly reducing cache misses.

My system works by having 2 levels of AABBs, its an array of blocks, where every block can hold 128 instances.

The top level is mostly an array of AABBs + an array of Blocks. If the AABB check passes, then i iterate the block. The block is array of structure of AABBs, Mask, and a pointer to the instance. This way everything is faaaaast.

Right now, the generation of the top level data structure is done in a very dumb way. If it was better generated, its performance could be a lot bigger. Im performing some experiments with different block sizes other than 128.

Ive checked different numbers, and 128 per block seems to still be the sweetspot somehow.

By changing the algorythm to separate "big" objects from small objects, ive managed to get another 30% speed upgrade, mostly in the case where you are not looking into the center of the map. It works becouse that way the big objects dont bloat the block AABBs that also include small objects. I believe that 3 sizes would probably work the best.

Block generation is still not optimal at all. The big blocks only cull about 10 to 20% of the instances when looking at the center, and up to 50% when looking outside the map, so its performing a LOT of extra AABB checks than what it should need.

I think an improvement would probably be to reuse the current octree but "flatten" it.

There is now not a single case where my algorithm is equal or worse than the current octree, even without parallel execution.

@vblanco20-1 I assume you are doing your comparison with godot compiled in release mode (which uses -O3) and not the regular editor build in debug (which has no optimizations and is default) right?

Sorry if it's a silly question but I see no mention of that in the thread.

Nice work anyway :)

@Faless Its release_debug, wich does add some optimizations. I havent been able to test full "release" as i cant get it to open the game (game needs to be built too?)

Ive had an idea on how to improve the culling a lot more, removing the need to regenerate every frame, and creating better spatial optimization. Ill see what i can try. In theory such a thing could remove the regenerate, improve the perf of the main cull a bit, and have a specific mode for pointlights which would increase their cull performance by a huge amount, so much that they would have nearly 0 cost, because it will turn into a cheap O(1) to get the "objects in a radius".

Its inspired by how one of my experiments worked, where im doing 400.000 physics objects bouncing from each other.

New culling is implemented now. The block generation is now far smarter, resuling in a faster cull. The special case for lights is not implemented yet.

Ive commited it to my fork

I am curious if you can run that benchmark tool (the one from your screenshots) on a build from the current master branch, to give us a reference point for how much performance your implementation is giving over the current implementation. I'm a dummy, woops.

@LikeLakers2 You can see both the current implementation and his implementation in the screenshot.

He ran this on a master fork

On Wednesday, December 12, 2018, MichiRecRoom notifications@github.com

wrote:

@neikeq https://github.com/neikeq Explain? I thought the screenshots

that have been posted were only of his implementation, given how the posts

with screenshots have been worded thus far.—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

https://github.com/godotengine/godot/issues/23998#issuecomment-446831151,

or mute the thread

https://github.com/notifications/unsubscribe-auth/ADLZ9pRh9Lksse9KBfWV0z5GmHRXf5P2ks5u4crCgaJpZM4YziJp

.

Are there any news on this topic?

Are there any news on this topic?

I talked with reduz. Is never going to get merged couse it doesnt fit the project. I went to do other experiments instead. Maybe i upgrade the culling later for 4.0

That's a shame, considering it looked really promising. Hopefully these issues will be worked on in time.

Perhaps this should be given the 4.0 milestone? Since Reduz is planning to work on Vulkan for 4.0, and this topic, while about performance, is focused on rendering, or at least OP is.

@vblanco20-1 Did he say how it doesn't fit? I'm assuming because of the move to c++17?

There is an other related issue on the milestone though: #25013

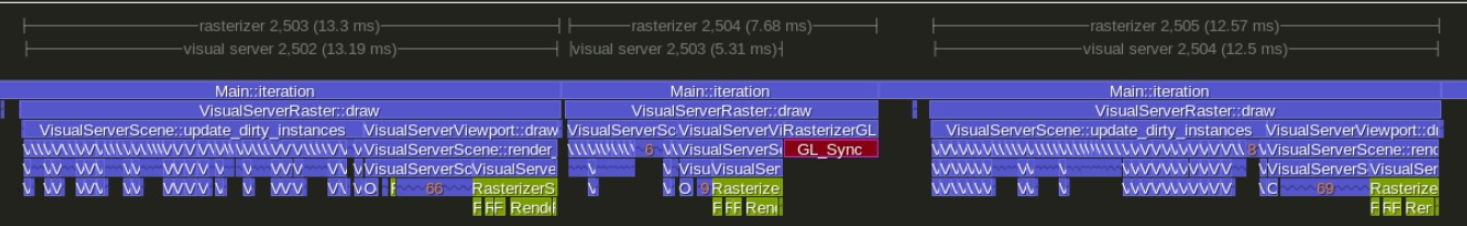

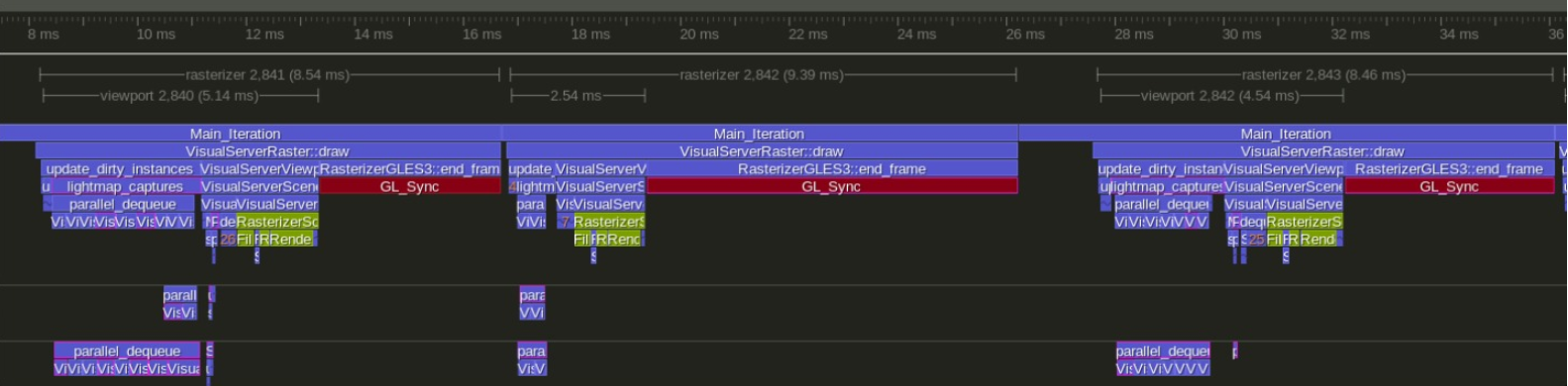

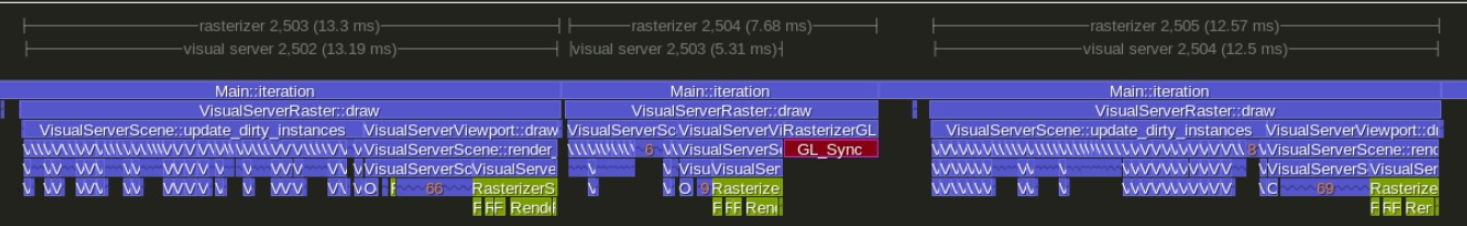

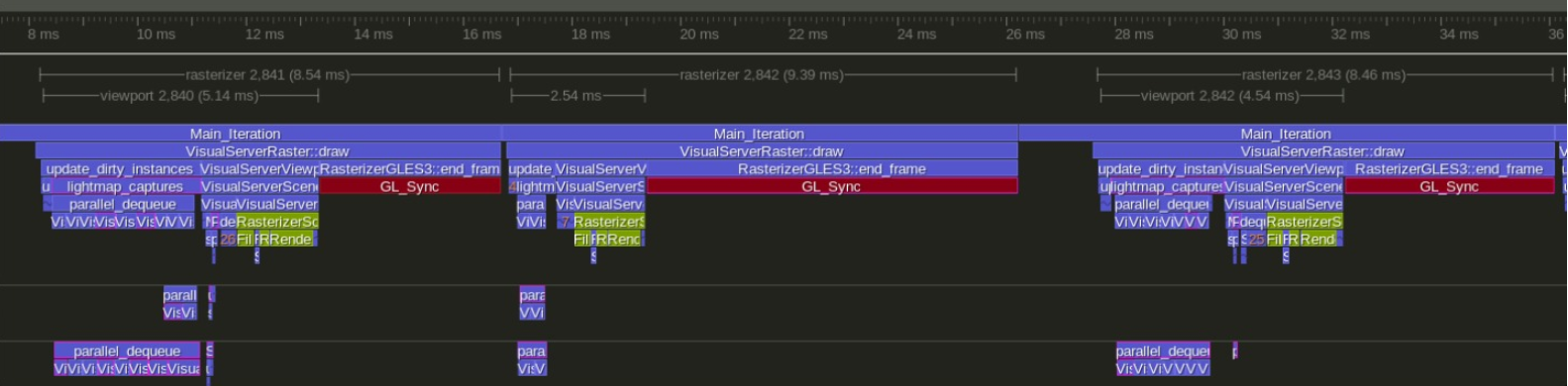

You might be interested. Ive been working on the fancy fork for quite a while. The current results are like this:

Captured on a big gaming laptop with an 4 core intel cpu and GTX 1070

Normal godot

My fork at https://github.com/vblanco20-1/godot/tree/ECS_Refactor

In both profiles, the Red is essentially "wait for GPU". Currently bottlenecked due to too many drawcalls on opengl. Cant really be solved without vulkan or rewriting the entire renderer.

On the CPU side, we go from 13 ms frames to 5 ms frames. "Fast" frames go from 5.5 ms to 2.5ms

On the "total frame" side, we go from 13 ms to 8-9 ms

~75 FPS to ~115 FPS

Less CPU time = more time you can spend on gameplay. The current godot is bottlenecked on the CPU, so more time spent into gameplay will mean lower FPS, while my fork is GPU bound, so you can add a lot of more gameplay logic with the FPS untouched as the CPU is just "free" for quite a while every frame.

A LOT of these improvements are mergeable into godot, IF godot supported modern C++ and had a multithreading system that allow very cheap "small" tasks.

The biggest gain is done by doing multithreaded shadows and multithreaded lightmap read on dynamic objects #25013. Both of those work in the same way. A lot of other parts of the renderer are multithreaded too.

Other gains are a Octree that is from 10 to 20 times faster than godot one, and improvements on some of the flow of rendering, such as recording both depth-prepass and normal-pass render list at once, instead of iterating the cull list 2 times, and a significant change to how light->mesh connecction works (no linked list!)

Im currently looking for more test maps other than the TPS demo so i can have more metrics in other types of maps. Im also going to write a series of articles explaining how all of this works in detail.

@vblanco20-1 this is totally awesome. Thanks for sharing!

I also agree that even though Vulkan is cool, having a decent GLES3 performance would be an absolute win for Godot. Full Vulkan support will likely not land anytime soon while patching GLES3 is something that guys here proved to be fully doable right now.

My huge +1 for bringing more and more attention to this. I'd be the happiest person on Earth if Reduz agreed to allow community to improve GLES3. We're doing a very serious 3D project in Godot for 1.5 years now, and we are very supportive towards Godot (including donations), but the lack of solid 60 FPS really demotivates us and puts all our efforts under a huge risk.

It's really a shame this is not getting enough credits. If we won't have 60 FPS within the coming months with our current (pretty simple in terms of 3D) project, we will fail to deliver a playable game and thus all the effort will be wasted. :/

@vblanco20-1 honestly, I'm even considering using your fork as a base of our game in production.

P. S. Some thoughts: as a last resort, I think it would totally be possible to even have, say, 2 GLES3 rasterizers - GLES3 & GLES3-community.

P. S. Some thoughts: as a last resort, I think it would totally be possible to even have, say, 2 GLES3 rasterizers - GLES3 & GLES3-community.

That doesn't make sense to me; it's probably a better idea to get fixes and performance improvements directly into the GLES3 and GLES2 renderers (as long as they don't break rendering in major ways in existing projects).

Other gains are a Octree that is from 10 to 20 times faster than godot one, and improvements on some of the flow of rendering, such as recording both depth-prepass and normal-pass render list at once, instead of iterating the cull list 2 times, and a significant change to how light->mesh connecction works (no linked list!)

@vblanco20-1 Would it be possible to optimize the depth prepass while staying on C++03?

@Calinou the depth prepass stuff is one of the things that do not need anything and is very easily mergeable. Its main downside is that it will require the renderer to use extra memory. As it will now need 2 RenderList instead of only one. Other than the extra memory, there is pretty much no downside. The cost of building the renderlist for 1 pass or 2 is almost the same thanks to cpu caching, so it pretty much completely removes the cost of prepass fill_list()

@vblanco20-1 honestly, I'm even considering using your fork as a base of our game in production.

@and3rson please dont. This fork is purely a research project, and its not even fully stable. In fact it wont even compile outside of some very specific compilers due to the usage of parallel STL. The fork is mostly a testbed of random optimization ideas and practises.

What tools you using to profile Godot? Did you need to add Profiler.Begin and Profiler.End flags to the godot source to generate these samples?

What tools you using to profile Godot? Did you need to add Profiler.Begin and Profiler.End flags to the godot source to generate these samples?

Its using Tracy profiler, it has different types of scope profile mark, which i use here. For example ZoneScopedNC("Fill List", 0x123abc) adds a profile mark with that name and the hex color you want.

@vblanco20-1 - Will we be seeing some of these performance improvements brought over to the godot main branch?

@vblanco20-1 - Will we be seeing some of these performance improvements brought over to the godot main branch?

Maybe when C++11 is supported. But reduz doesnt really want more work on the renderer before vulkan happens, so might be never. Some of the findings from the optimziation work could be useful for the 4.0 renderer.

@vblanco20-1 mind sharing the compiler and environment details for the branch, I think I can figure most of it out but just don't to waste time figuring out issues if I don't have to.

Also can't we add the AABB change to Godot main without much cost?

@vblanco20-1 mind sharing the compiler and environment details for the branch, I think I can figure most of it out but just don't to waste time figuring out issues if I don't have to.

Also can't we add the AABB change to Godot main without much cost?

@swarnimarun Visual Studio 17 or 19, one of the later updates, works fine. (windows)

The scons script got modified to add the cpp17 flag. Also, launching editor is broken right now. Im working on see if i can restore it.

Sadly the AABB change is one of the biggest things. Godot octree is intertwined with a LOT of things in the visual server. It not only keeps track of objects for culling, but it also connects objects to lights/reflection probes, with its own system for pairing them. Those pairs also depend on the linked list structure of the octree, so its almost impossible to adapt the current octree to be faster without changing quite a lot of the visual server. In fact i still havent been able to get rid of the normal godot octree in my fork for a couple objects types.

@vblanco20-1 Do you have a Patreon? I would put down some money towards a fork focused on performance and that doesn't restrict itself to ancient C++ and I'm probably not the only one. I would like to toy around with Godot for 3D projects but I'm losing hope it will become viable without a huge proof a concept that proves your approach to performance and usability to the core team.

@mixedCase I would be extremely hesitant to support any kind of fork of Godot. Forking and fragmentation is often the death of open source projects.

The better scenario, and more likely now that it has been demonstrated that newer C++ allows for significant optimizations, is for Godot to officially upgrade to a newer version of C++. I would expect this to happen in Godot 4.0, to minimize the risk of breakages in 3.2, and in time for the new C++ features to be utilized with Vulkan being added to Godot 4.0. I also don't expect any significant changes to be made to the GLES 3 renderer, as reduz wants to delete it.

(But I don't speak for the Godot devs, this is just my speculation)

@aaronfranke I agree, I think that a permanent fork would not be ideal. But from what I've gathered from what he's said (please correct me if I'm wrong) is that @reduz believes these newer features should not be used because they do not bring anything significant enough to be worth learning the concepts and because he perceives some of them as making the code "unreadable" (at least for developers used to the "augmented C" that C++ was almost 10 years ago).

I believe Juan to be a very pragmatic person, so it would probably take a big proof of concept that demonstrates the benefits of using modern abstractions and more efficient data structures to convince him that the engineering trade offs are probably worth it. @vblanco20-1 has done amazing work so far so I personally would not hesitate to put down some money every month towards him if he would like to go for something like that.

I am perpetually amazed how Godot devs act like they are allergic to good ideas, performance, and progress. It's a shame this was not pulled in.

This is a piece from a series of articles im working on about the optimizations, precisely about the data structure usage.

Godot bans the STL containers, and its C++03. This means it does not have move operators in its containers, and its containers tend to be worse than the STL containers themselves. Here i have an overview of the godot data structures, and the problems with them.

Preallocated C++ array. Very common around the entire engine. Its sizes tend to be set by some configuration option. This is very common in the renderer, and a proper dynamic array class would work great here.

Example of misuse: https://github.com/godotengine/godot/blob/master/servers/visual/visual_server_scene.h#L442

This arrays are wasting memory for no good reason, using a dynamic array would be a significantly better option here as it would make them only the size they need to be, instead of the maximum size by a compile constant. Each of the Instance* arrays using MAX_INSTANCE_CULL are using half a megabyte of memory

Vector ( vector.h) a std::vector dynamic array equivalent, but with a deep flaw.

The vector is atomically refcounted with a copy-on-write implementation. When you do

Vector a = build_vector();

Vector b = a;

the refcount will go to 2. B and A now point to the same location in memory, and will copy the array once an edit is made to it. If you now modify vector A or B, it will trigger a vector copy.

Every time you write into a vector it will need to check the atomic refcount, slowing down every single write operation in the vector. It is estimated godot Vector is 5 times slower than std::vector, at minimum. Another issue is that vector will relocate automatically when you remove items from it, causing terrible uncontrollable performance issues.

PoolVector (pool_vector.h). More or less the same as a vector, but as a pool instead. It has the same pitfalls as Vector, with copy on write for no reason and automatic downsizing.

List ( list.h) a std::list equivalent. The list has 2 pointers (first and last) + a element count variable. Every node in the list is double linked, plus an extra pointer to the list container itself. The memory overhead for every node of the list is 24 bytes. It is horribly overused in godot due to Vector being not very good in general. Horribly misused over the entire engine.

Examples of big misuse:

https://github.com/godotengine/godot/blob/master/servers/visual/visual_server_scene.h#L129

No reason whatsoever for this to be a list, should be a vector

https://github.com/godotengine/godot/blob/master/servers/visual/visual_server_scene.h#L242

Every single of this cases is wrong. again, it should be vector or similar. This one can actually cause performance issues.

The biggest mistake is using List in the octree used for culling. Ive already gone into detail about the godot octree in this thread.

SelfList(self_list.h) a intrusive list, similar to boost::intrusive_list. This one allways stores 32 bytes of overhead per node as it also points to self. Horribly misused over the entire engine.

Examples:

https://github.com/godotengine/godot/blob/master/servers/visual/visual_server_scene.h#L159

This one is specially bad. One of the worst in the entire engine. This is adding 8 pointers of fatness to every single renderable object in the engine for no reason whatsoever. There is no need for this to be a list. It, again, can be a slotmap or a vector, and that's what i’ve replaced it with.

Map(map.h) a red-black tree. Misused as a hashmap in pretty much all of its uses. Should be deleted from the engine in favor of OAHashmap or Hashmap, depending on usage.

Every use of Map i saw was wrong. I cant find an example of a Map being used where it makes sense.

Hashmap ( hash_map.h) roughly equivalent to std::unordered_map, a closed adressing hashmap based on linked list buckets. It can rehash when removing elements.

OAHashMap( oa_hash_map.h) newly written fast open-adressing hashmap. uses robinhood hashing. Unlike hashmap, it will not resize when removing elements. Really well implemented structure, better than std::unordered_map.

CommandQueueMT(command_queue_mt.h) the command queue used for to communicate to the different Servers, such as the visual server. It works by having a hardcoded 250 kb array to act as allocator pool, and allocates every command as an object with a virtual call() and post() function. It uses mutexes to protect the push/pop operations. Locking mutexes is very expensive, i recommend using the moodycamel queue instead, which should be an order of magnitude faster. This is likely to become a bottleneck for games that perform a lot of operations with the visual server, like lots of moving objects.

These are pretty much the core set of data structures in godot. There is no proper std::vector equivalent. If you want the “dynamic array” data structure, you are stuck with Vector, and its drawbacks with the copy on write and downsizing. I think a DynamicArray data structure is the thing godot needs the most right now.

For my fork i use STL and other containers from external libraries. I avoid godot containers as they are worse than the STL for performance, with the exception of the 2 hashmaps.

Problems ive found on the vulkan implementation in 4.0. I know its work in progress, so there is still time to fix it.

Usage of Map in the render API. https://github.com/godotengine/godot/blob/vulkan/drivers/vulkan/rendering_device_vulkan.h#L350

As i commented, there is no good use of Map and no reason for it to exist. Should just be hashmap in those cases.

Linked list overuse instead of just arrays or similar.

https://github.com/godotengine/godot/blob/vulkan/drivers/vulkan/rendering_device_vulkan.h#L680

Luckily this is likely not on the fast loops, but its still an example of using List where it shouldnt be used

Using PoolVector and Vector in the render API. https://github.com/godotengine/godot/blob/vulkan/drivers/vulkan/rendering_device_vulkan.h#L747

There is no real good reason to use those 2 flawed structures as part of the render API abstraction. By using them, users are forced to use those 2, with their drawbacks, instead of being able to use any other data structure. A recommendation is to use pointer + size on those cases, and still have a version of the function that takes a Vector if neccesary.

An actual example where this API will harm an user is on the vertex buffer create functions. In the GLTF format, the vertex buffers will come packed in a binary file. With this API, the user will have to load the GLTF binary buffer into memory, create this structures copying the data for each buffer, and then use the API.

If the API took pointer + size, or a Span<> struct, the user would be able to directly load the vertex data from the loaded binary buffer into the API, without having to perform a conversion.

This is specially important for people who deal with procedural vertex data, like a voxel engine. With this API, the developer is forced to use Vector for his vertex data, incurring a significant performance cost, or have to copy the data into a Vector, instead of just loading the vertex data directly from the internal structures that the developer uses.

If you are interested on knowing more about this topic, the best talk i know is this one from CppCon. https://www.youtube.com/watch?v=fHNmRkzxHWs

Other great talks is this one: https://www.youtube.com/watch?v=-8UZhDjgeZU , where it explains a few data structures such as the slotmap that would be super useful for godot needs.

Godot have a great problem of performance... you would be a good adition to godot team, please considerate that!

I'm closing this thread, because I don't think it's the right way to contribute or help.

@vblanco20-1 Again, I really appreciate that you have good intentions, but you don't understand any of the inner workings of the engine, or the philosophy behind it. Most of your comments are towards things you don't really understand how they are used, how critical they are to performance, or what their priorities are in general.

Your tone is also unnecessarily aggressive, too. Instead of asking what you don't understand, or why something is done in a certain way, you just go all out arrogant. This is not the right attitude for this community.

Most of the things you are mentioning regarding optimization are only a very small surface of the amount of items that I planned for optimization in Godot 4.0 (there are plenty more things in my list). I already told you this would get rewritten several times since several months ago. If you don't believe me, it's fine, but I feel you are wasting your own time with this and confusing a lot of people for no obvious reason.

I obviously welcome very much your feedback once I'm done with my work, but all you are doing now is beating a dead horse, so just chill for a while.

Again, when an alpha of Godot 4.0 is rolled out (hopefully before the end of this year), you are welcome to profile all the new code and give feedback on how further optimize it. I'm sure that we will all benefit. For now, there is not much of a point in discussing anything else here since existing code will go away in 4.0, and nothing will get merged in the 3.x branch, where at this point stability is more important than optimizations.

Since many may be curious about the technical details:

- All the spatial indexing code (what vblanco rewrote) will be replaced by a linear algorithm for culling with multiple threads, combined with an octree for hierarchial occlusion culling and a SAP for overlapping checks, which are most likely the best all rounder algorithms that warrant good performance on any kind of game. Allocation for these structures will be in the same vein as the new RID_Allocator, which is O(1) ). I discussed this with @vblanco20-1 before and explained that his approach does not scale well for all types of games, as it requires user to have a certain degree of expertise to tweak which commonly the typical Godot user is not expected to have. It was also not a good approach for adding occlusion culling.

- I won't use arrays when lists can be used because lists do small temporal allocations with zero risk of fragentation. In some cases, I prefer to allocate a sectioned array (aligned to pages, so they cause 0 fragmentation) that always grow and never shrink (like in RID_Allocator or the new CanvasItem in the 2D engine Vulkan branch, which now allows you to redraw items with a lot of commands very efficiently), but there has to be a performance reason for this. When lists are used in Godot it's because small allocations are preferred over performance (and actually they make the code intent more clear for others to read).

- PoolVector is intended for very large allocations with consecutive memory pages. Until Godot 2.1 it used a preallocated memory pool, but this was removed in 3.x and right now the current behavior is wrong. For 4.0 it will be replaced with virtual memory, it's on the list of things to do.

- Comparing Godot's Vector<> to std::vector is pointless because they have different use cases. We use it mostly to pass data around and we lock it for fast access (via ptr() or ptrw() methods). If we used std::vector, Godot would be much slower due to unnecessary copying. We also take a lot of advantage of the copy on write mechanic for many different uses.

- Map<> is just simpler and friendlier for large amount of elements and no need to worry about hastable growing/shinking creating fragmentation. When performance is required, HashMap is used instead (though it's true, should probably use OAHashMap more, but it's too new and never had time to do that). As a general philosophy, when performance is not priority, small allocations are always preferred over large ones because it's easier for the memory allocator from your operating system to find tiny holes to put them in (which is pretty much what it is designed to do), effectively consuming less heap.

Again, you can ask about plans and design anytime you want instead of going rogue and complain, this is not the best way to help the project.

I'm also sure that many reading this thread are wondering why the spatial indexer was slow to begin with. The reason is that, maybe you are new to Godot, but until very recently the 3D engine was more than 10 years old and extremely outdated. Work was done to modernize it in OpenGL ES 3.0, but we had to desist due to problems we found in OpenGL and the fact it was deprecated for Vulkan (and Apple abandoned it).

Added to this, Godot used to run on devices like the PSP not too long ago (which had only 24mb of memory available for both engine and game, so a lot of the core code is very conservative regarding memory allocation). As hardware is very different now, this is being changed for code that is more optimal and uses more memory, but when it's not needed doing this work is pointless and it's fine that you'll see lists used in a lot of places where performance does not matter.

Also, many optimizations we wanted to do (moving many of the mutex code to atomics for better performance) had to be put on hold until we could move Godot to C++11 (which has much better support for inlined atomics and it does not requiere you to include windows headers, which pollute the whole namespace), which was not something we could do in a stable branch. The move to C++11 will take place after Godot 3.2 is branched and feature freezed, otherwise keeping Vulkan an Master branches in sync would be a huge pain. There is not much of a hurry, as currently focus is now on Vulkan itself.

Sorry, things take time, but I prefer they are done properly rather than rushing them. It pays off better in the long term. Right now all the performance optimizations are being worked on and should be ready soon (if you tested the Vulkan branch, the 2D engine is way faster than it used to be already).

Hi reduz,

while I mostly see that your points are valid, I'd like to comment on two on which I disagree:

- I won't use arrays when lists can be used because lists do small temporal allocations with zero risk of fragmentation. In some cases, I prefer to allocate a sectioned array (aligned to pages, so they cause 0 fragmentation) that always grow and never shrink (like in RID_Allocator or the new CanvasItem in the 2D engine Vulkan branch, which now allows you to redraw items with a lot of commands very efficiently), but there has to be a performance reason for this. When lists are used in Godot it's because small allocations are preferred over performance (and actually they make the code intent more clear for others to read).

I highly doubt that a linked list will have better overall performance, let it be speed or memory efficiency, than a dynamic array with exponential growth. The latter is guaranteed to occupy at most twice as much space as it actually uses, while a List<some pointer> uses exactly three times as much storage (the actual content, the next and the prev pointer). For a sectioned array, things look even better.

When properly wrapped (and as much I can tell from what I've seen already of the godot code, they are), they look pretty much the same to the programmer, so I don't understand what you mean with "they [Lists] make the code intent more clear".

IMHO, Lists are valid exactly in two conditions:

- You need to frequently erase/insert elements at the middle of the container

- or you need constant-time (and not just amortized constant-time) insertion/deletion of elements. I.e., in real-time contexts where a time-consuming allocation of memory isn't possible, they're fine.

- Map<> is just simpler and friendlier for large amount of elements and no need to worry about hastable growing/shinking creating fragmentation. When performance is required, HashMap is used instead (though it's true, should probably use OAHashMap more, but it's too new and never had time to do that). As a general philosophy, when performance is not priority, small allocations are always preferred over large ones because it's easier for the memory allocator from your operating system to find tiny holes to put them in (which is pretty much what it is designed to do), effectively consuming less heap.

libc-allocators are usually quite smart, and since an OAHashMap (or a std::unordered_map) reallocate their storages from time to time (amortized constant-time), the allocator usually manages to keep its memory blocks compact. I firmly believe that an OAHashMap does not effectively consume more heap than a plain binary tree map like Map. Instead, I'm quite sure that the huge pointer overhead in each element of Map actually consumes more memory than any heap fragmentation of OAHashmap (or std::unordered_map).

After all, I think the best way to sort out these kinds of arguments is to benchmark it. Surely, this is of much greater use for Godot 4.0, since -- as you said -- lots of performance optimizations will happen there and there is not much use of improving code paths that may well be completely rewritten in 4.0 anyway.

But @reduz, what do you think of benchmarking all these changes that @vblanco20-1 suggested (maybe even now, in 3.1). If @vblanco20-1 (or anyone else) is willing to invest the time of writing such a benchmarking suite and to evaluate Godot3.1's performance (both in terms of speed and "heap consumption considering fragmentation") against vblanco's changes? It might yield valuable hints for the actual 4.0 changes.

I think that such a methodology fits your "[things] are done properly rather than rushing them" well.

@vblanco20-1: In fact, I appreciate your work. Would you be motivated to create such benchmarks, so we can actually measure whether your changes are actual performance improvements? I would be very interested.

@Windfisch I suggest you re-read my post above, as you have misread or misunderstood a few things. I'll clarify them for you.

- Lists are used exactly for the use case you describe, and I never claimed that they have better performance. They are more efficient than arrays for reusing heap simply because they are made-up of small allocations. On a scale (when you use them a lot for their intended use case), this really makes a difference. When performance is required, other containers which are faster, more packed or have better cache coherency are used already. For good or bad, Victor mostly focused in one of the older engine areas (if not the oldest actually) which was never optimized since the times of it being an in-house engine used to publish games for PSP. This had a rewrite pending since a long time, but there were other priorities. His main optimization was the CPU based voxel cone tracing I added recently, which to be honest, I did a bad job with that because it was too rushed near the 3.0 release, but the proper fix for this is a different algorithm entirely, and not adding parallel processing like he did.

- I never argued about the performance of @vblanco20-1's work and I frankly don't care about it (so you don't need to make him waste time doing benchmarks). The reasons for not merging his work is because 1) The algorithms he uses needs manual tweaking depending on average size of objects in the game, something most Godot users will need to do. I tend to favor algorithms that may be a bit slower but scale better, without the need for tweaks. 2) The algorithm he uses is not good for occlusion culling (simple octree is better due to the hierarchical nature). 3) The algorithm he uses is not good for pairing (SAP is often better). 4) He uses C++17 and libraries I'm not interested in supporting, or lambdas which I believe are unnecessary 5) I'm already working on optimizing this for 4.0, and the 3.x branch has stability as priority and we intend to release 3.2 as soon as possible, so this won't be modified or worked on there. Existing code may be slower, but it's very stable and tested. If this gets merged ant there are bug reports, regressions, etc. No one will have time to work on it or help Victor because we are already mostly busy with 4.0 branch. This is all explained above, so I suggest you re-read the post.

In any case, I promised Victor that indexing code can be pluggable, so eventually different algorithms for different types of games can be implemented, too.

Godot is open source and so is his fork. We are all open and sharing here, nothing should stop you from using his work if you need it.

Since that optimizations seems not to affect gdscript, render or the "stuttering" problems, and there are the things that people complains (me includes), maybe with acomplish that optimizations people will be happy (me includes)... doesn´t need lua jit velocity...

Works in "copy on write" was a very big performance optimization in a plugin of mine (from 25 seconds in a script parse to only 1 second in a script of 7000 lines)... i feel that this kind of optimizations are that we need, in gdscript, in render and accomplish the stuttering problem... that´s all.

Thanks @reduz for your clarification. It indeed made your points more clear than the previous postings.

It's good that the spatial indexing code will be pluggable, because indeed that one fell on my feet before when handling lots of objects at vastly different scales. Looking forward to 4.0.

I was also thinking about it and I think it may be a good idea to put some shared document with ideas on optimizing spatial indexing, so more contributors can learn about this and also throw ideas or do implementations. I have very good idea about what needs to be done, but I'm sure there is a lot of room here for doing further optimizations and coming up with interesting algorithms.

We can put very clear requirements that we know algorithms have to meet (as in, not requiring user tweaking if possible for average elements in the world size, not brute-forcing stuff with threads if possible -they are not free, other parts of the engine may need them too, like physics or animation and they consume more battery on mobile-, compatibility with re-projection based occlusion culling -so, some form of hierarchy may be desired, but should be tested against brute force too-, be smart about shadow buffer updates -don't update if nothing changed-, explore optimizations such as re-projection based occlusion culling for directional shadows, etc). We can also discuss creation of some benchmark tests (I don't think the TPS demo is a good benchmark because it doesn't have as many objects or occlusion). @vblanco20-1 if you are willing to follow our coding/language style and philosophy you are of course more than welcome to lend a hand.

The rest of the rendering code (actual rendering using RenderingDevice) is more or less straightforward and there's not many ways to do it, but indexing seems like a more interesting problem to solve for optimization.

@reduz for reference on the spatial indexing. The original tile based culling was removed and replaced by an Octree about halfway through this thread. The octree i got there is WIP (missing some re-fit functionality), but the results are quite good for the prototype. Its code is not that good from its prototype nature, so its only useful to check how this kind of octree would perform in a complex scene such as tps-demo.

It is inspired by how the unreal engine octree works, but with some modifications like the possibility of a flat iterate.

The main idea is that only the leafs on the octree hold objects, and those objects are held on an array of size 64 (compile time size, can be different). An octree leaf will only split once it "overflows" into 65 elements. When you remove objects, if each of the leafs of the parent node fit into the 64 size array, then we merge the leafs back into their parent node, which becomes a leaf.

By doing that, we can minimize the time testing on the nodes, as the octree wont end up being too deep.

Another good thing i was doing is that the leaf nodes are also stored in a flat array, which allows a parallel-for in the culling. This way the hierarchical cull can be used when doing cull for point shadows, or other "small" operations, and the flat parallel-for culling can be used for the main view. Of course could just use hierarchical for everything, but could be slower and cant be parallelized.

The block structure will waste a bit of memory, but even in worst case scenarios i dont think it will waste a lot of memory as the nodes will merge if they drop below an amount. It also allows using a pool allocator given that both the nodes and the leafs will have a constant size.

I also have multiple octrees in my fork, which is used to optimize some things. For example i have an octree only for shadowcaster objects, which allows skipping all the logic related to "can cast shadows" when culling for shadowmaps.

On my concerns for Vector and others on the render API, this issue explains what i was worrying about. https://github.com/godotengine/godot/issues/24731

On the library and C++17 stuff of the fork.. thats unnecesary. The fork overuses a lot of libraries because i needed some parts of them. The only thing thats really needed, and that i think godot needs, is a industry-strenght parallel queue, i used moodycamel queue for it.

On the lambdas, their usage is mainly for culling, and its used to save a significant amount of memory, as you only need to save the objects that pass your checks into the output array. The alternative is to do iterator objects (thats what unreal engine does with their octree), but it ends up being worse code and a lot harder to write.

My intention was not to "go rogue", but to answer frequent comments on "you are free to make a fork to demonstrate", which is exactly what ive done. The first message on the thread is a bit alarmist and not very correct. Sorry if i appeared rude to you, as my only goal is to help the most popular open source game engine.

@vblanco20-1 That sounds great, and the way you mention the octree works makes a lot of sense. I honestly don't mind implementing a parallel queue (seems simple enough for not needing an external dependency, and need C++11 to do it properly, so guess that will happen only on the Vulkan branch). If you have this commited, I will definitely want to take a look at it, so I can use it as a basis for the indexer rewrite in the Vulkan branch (I'm just rewriting most stuff there, so it needs to be rewritten anyway). Of course if you want to aid with the implementation (probably when there are more things in place with the new API), it's super welcome.

The use of a parallel version with flattened arrays is interesting, but my point of view on it is that it won't be that useful for occlusion culling, and it would be interesting to measure how much of an improvement over regular octree culling is, considering the extra amount of cores used. Keep in mind there are many other areas that may be using multiple threads that may make more efficient (less brute force) use of it (like physics), so if even if with this approach we get a relatively marginal improvement, it is not always in the best of interests, as it may starve other areas from using CPU. Maybe it could be optional.

I also find Bullet 3's implementation of dbvt pretty interesting, which does incremental self balancing and linear allocation, it's one of the things I wanted to research more (I've seen this approach implemented in proprietary engines I can't mention :P ), as algorithm wise, a balanced binary tree is by design just a lot less redundant than an octree, and may work better in both pairing and occlusion tests, so a pluggable broadphase/culling aproach may be actually a good idea, given we make proper useful benchmark scenes.

In any case, you are exceptionally smart and it would be great if we can work together on this, but I will just ask you to please understand and respect many of the existing design philosophies we have, even if they may not always suit your taste. The project is huge, with a lot of inertial force, and changing things for the sake of tastes is just not a good idea. User friendliness and "just works" is always first on the list regarding design, and simplicity and readability of code usually takes priority over efficiency (that is, when efficiency is not a goal because an area is not critical). Last time we discussed, you wanted to change pretty much the whole thing and did not want to listen to any reasoning or focus on actual problems, and that's not how we usually work with the community and contributors, which is why my advice to chill was well intended.

Who needs performance? :trollface:

Performance and source model is what keeps me with Godot.

Edit: Sorry, maybe I am some offtopic but I wanted to clarify the benefits of open source.

@mixedCase I would be extremely hesitant to support any kind of fork of Godot. Forking and fragmentation is often the death of open source projects.

I don't think so. The nature of open source is just that you can use and reuse the code freely as you want. So that will not be an end of some project but more options for the users.

What you say is a monopoly, and that is not what defend the open source. Don't get cheated by companies that pretend to show you that there is better to have full control of something. That is a lie, at least in the open source world, because if someone other have the code you too have the code. What they need to do is to take care of how to handle a community or how to be OK with that.

Anyway the original developers can just merge improvements from the fork. They are free to do it always. That is another nature of the open source world.

And in the worst of the cases the fork will be better than the original and a lot of contributer will go there. Nobody lost, all win. Oh, sorry, if a company is behind the original maybe they lost (or they can merge the improvements from the fork too).

Most helpful comment

You might be interested. Ive been working on the fancy fork for quite a while. The current results are like this:

Captured on a big gaming laptop with an 4 core intel cpu and GTX 1070

Normal godot

My fork at https://github.com/vblanco20-1/godot/tree/ECS_Refactor

In both profiles, the Red is essentially "wait for GPU". Currently bottlenecked due to too many drawcalls on opengl. Cant really be solved without vulkan or rewriting the entire renderer.

On the CPU side, we go from 13 ms frames to 5 ms frames. "Fast" frames go from 5.5 ms to 2.5ms

On the "total frame" side, we go from 13 ms to 8-9 ms

~75 FPS to ~115 FPS

Less CPU time = more time you can spend on gameplay. The current godot is bottlenecked on the CPU, so more time spent into gameplay will mean lower FPS, while my fork is GPU bound, so you can add a lot of more gameplay logic with the FPS untouched as the CPU is just "free" for quite a while every frame.

A LOT of these improvements are mergeable into godot, IF godot supported modern C++ and had a multithreading system that allow very cheap "small" tasks.

The biggest gain is done by doing multithreaded shadows and multithreaded lightmap read on dynamic objects #25013. Both of those work in the same way. A lot of other parts of the renderer are multithreaded too.

Other gains are a Octree that is from 10 to 20 times faster than godot one, and improvements on some of the flow of rendering, such as recording both depth-prepass and normal-pass render list at once, instead of iterating the cull list 2 times, and a significant change to how light->mesh connecction works (no linked list!)

Im currently looking for more test maps other than the TPS demo so i can have more metrics in other types of maps. Im also going to write a series of articles explaining how all of this works in detail.