Tensorflow: Can I export a gpu-trained model to a cpu-only machine for serving/evaluation?

I trained a model on a GPU machine.

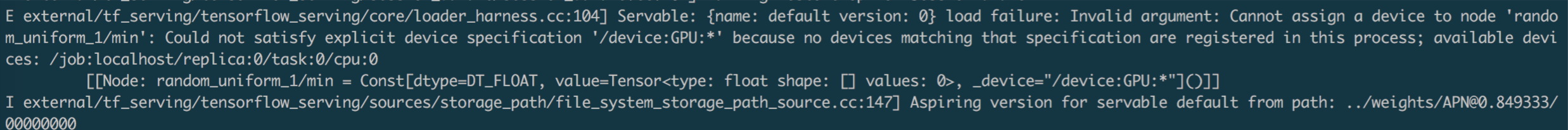

When l load such model on a cpu-only machine, I get the error below.

Thank you for any suggestions!

awaiting tensorflower

All 3 comments

@vrv Could you take a look at this please? Do we have such support now? Thanks.

Yup, you can pass tf.ConfigProto(allow_soft_placement=True) to the tf.Session if you really want to ignore device placement directives in the graph. I'm not a huge fan of using this option (I'd rather the graph be rewritten to strip out the device fields explicitly), but that should work.

Also, in the future, StackOverflow is the right place to ask these questions.

Was this page helpful?

0 / 5 - 0 ratings

Most helpful comment

Also, in the future, StackOverflow is the right place to ask these questions.